Transform Windows 7 Into Ubuntu 11.04 Natty Narwhal With Ubuntu Skin Pack

Some Linux users find it hard to adjust to the Windows operating systems at office, as they are more accustomed to using operating systems like Ubuntu. Over the years some transformation packs have helped Mac and Linux users to migrate towards Windows 7 by giving it a Mac or Linux OS look. These transformation packs, have also been handy for people who might prefer another operating system’s interface on Windows 7, as they are more accustomed to using it.

Ubuntu fans who for some reason happen to use Windows 7, are in for a treat with a wonderful Ubuntu Skin Pack released by user hamed. This is the same developer who has previously brought us the Mac OS X Lion Skin Pack and Windows 8 Transformation Pack. Like the former two, this Ubuntu 11.04 transformation pack does not require any third-party theme patching application. Just launch the .exe file, select the desired options and install the theme with a simple installation wizard.

Read more: Addictive tips

Patent 5,893,120 Reduced To Pure Math

Posted by

jasper22

at

15:35

|

US Patent #5,893,120 has been reduced to mathematical formulae as a demonstration of the oft-ignored fact that there is an equivalence relation between programs and mathematics. You may recognize Patent #5,893,210 as the one over which Google was ordered to pay $5M for infringing due to some code in Linux. It should be interesting to see how legal fiction will deal with this. Will Lambda calculus no longer be 'math'? Or will they just decide to fix the inconsistency and make mathematics patentable?

Read more: Slashdot

Android Commander Is A Swiss Army Knife For Android Devices

Posted by

jasper22

at

15:26

|

The Android world is full of tools, hacks and guides that let you customize your device the way you see fit. Most of these utilize the official Android SDK tools provided by Google, but using these isn’t always the simplest of tasks. Enter Android Commander – a free app for Windows that is basically a front-end for the core Android tools laid out in such a way that enables even a novice to use them without much hassle. For our detailed review and screenshot tour of this gem of an app, continue reading.

We at AddictiveTips love Android and we know you do too. That’s the reason we do our best to bring you apps and tools to help you make the best out of your Android phone or tablet. While most of the apps are pretty straight-forward to install and use, other more advanced modifications to your device often involve the use of command-line utilities and complex methods that can be intimidating to those used to GUI tools, and although we try our best to make our guides the safest possible for you, there is still a relatively high risk involved with using such methods that can result in potentially bricking your device due to any mistakes being made in entering the commands. That’s why when we came across Android Commander, we were delighted at the way it provides a well laid-out graphical front-end for several standard Android tools in one package that makes the process of using these tools a breeze!

Read more: Addictive tips

ConFavor – Add Frequently Used File And Folders In Windows Shell Menu

Posted by

jasper22

at

15:09

|

Windows right-click context menu extensions provide a great utility to quickly access favorite applications, files and folders, but generally require long steps to configure and register each option. In the past, we have covered a handful of context menu extensions to keep mostly frequntly used files, folders and apps at fingertips, such as, CMenu, Moo0 RightClicker, and FileMenu Tools. ConFavor offers the easiest way to add customized menu (Favorites menu) in Windows right-click context menu. It not only integrates with Windows right-click menu, but adds itself in My Computer (Explorer Window) as well to lets you quickly add files and folders via simple drag & drop. You can optionally choose to use its main interface for same purpose, which provides simple add, rename and delete user-added context menu options.

Read more: Addictive tips

Create your first CLR Trigger in SQL Server 2008 using C#

Posted by

jasper22

at

10:35

|

Create your first CLR Trigger for SQL Server 2008 using C#

What are CLR Triggers?

CLR triggers are trigger based on CLR.

CLR integration is new in SQL Server 2008. It allows for the database objects (such as trigger) to be coded in .NET.

Object that have heavy computation or require reference to object outside SQL are coded in the CLR.

We can code both DDL and DML triggers by using a supported CLR language like C#.

Let us follow below simple steps to create a CLR trigger:

Step 1: Create the CLR class. We code the CLR class module with reference to the namespace required to compile CLR database objects.

Add below reference:

using Microsoft.SqlServer.Server;

using System.Data.SqlTypes;

So below is the complete code for class;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Data.Sql;

using System.Data.SqlClient;

using Microsoft.SqlServer.Server;

using System.Data.SqlTypes;

using System.Text.RegularExpressions;

namespace CLRTrigger

{

public class CLRTrigger

{

public static void showinserted()

{

SqlTriggerContext triggContext = SqlContext.TriggerContext;

SqlConnection conn = new SqlConnection(" context connection =true ");

conn.Open();

SqlCommand sqlComm = conn.CreateCommand();

SqlPipe sqlP = SqlContext.Pipe;

SqlDataReader dr;

sqlComm.CommandText = "SELECT pub_id, pub_name from inserted";

dr = sqlComm.ExecuteReader();

while (dr.Read())

sqlP.Send((string)dr[0] + "," + (string)dr[1]);

}

}

}

Step 2: Compile this class and in the BIN folder of project we will get CLRTrigger.dll generated. After compiling for CLRTrigger.dll, we need to load the assembly into SQL Server

Step 3: Now we will use T-SQL command to execute to create the assembly for CLRTrigger.dll. For that we will use CREATE ASSEMBLY in SQL Server.

CREATE ASSEMBLY triggertest

FROM 'C:\CLRTrigger\CLRTrigger.dll'

WITH PERMISSION_SET = SAFE

Read more: C# Corner

When to go for XBAP,WPF and Silverlight

Posted by

jasper22

at

10:20

|

Recently , i have given session on Silver light 4. Where Participants posted be question of Differences between Silverlight ,XBAP and WPF. Though I have given answer in the session, i want to make sure other participant who could not turn session and having same doubt to clarify.

Firstly: XBAP is XAML Browser application , It require .NET Framework to installed on the machine where it runs. it is Depployed through Click Once gets updated with latest version. XBAP application should be online and run in Browser scope. It is almost same as WPF but with Browser scope. It works on only Window Operation. I think it is best option for intranet browser applications.WCF not supported. Only FireFox and IE

Secondly Silverlight, It is require .NET Compact framework to be present in Browser. It works very where indepedent of platform and browser. it has limitation of Triggers, No DynamicResource. Rendered along with HTMl content

Read more: WELCOME TO MAHENDER'S BLOG

Visual Studio go faster! Faster!… (Well okay, maybe just phone home and tell Microsoft you’re feeling a little slow…) - Visual Studio PerfWatson

Posted by

jasper22

at

10:17

|

Would you like your performance issues to be reported automatically? Well now you can, with PerfWatson extension! Install this extension and assist the Visual Studio team in providing a faster future IDE for you.

We’re constantly working to improve the performance of Visual Studio and take feedback about it very seriously. Our investigations into these issues have found that there are a variety of scenarios where a long running task can cause the UI thread to hang or become unresponsive. Visual Studio PerfWatson is a low overhead telemetry system that helps us capture these instances of UI unresponsiveness and report them back to Microsoft automatically and anonymously. We then use this data to drive performance improvements that make Visual Studio faster.

Here’s how it works: when the tool detects that the Visual Studio UI has become unresponsive, it records information about the length of the delay and the root cause, and submits a report to Microsoft. The Visual Studio team can then aggregate the data from these reports to prioritize the issues that are causing the largest or most frequent delays across our user base. By installing the PerfWatson extension, you are helping Microsoft identify and fix the performance issues that you most frequently encounter on your PC.

To allow PerfWatson to submit performance reports to Microsoft, please make sure that Windows Error Reporting (WER) is enabled on your machine, please see how to configure WER setting session. PerfWatson employs the WER service to send the collected data to Microsoft.

Using PerfWatson

PerfWatson is an automatic feedback service. Once it is installed, all you need to do is use the product, and it will automatically create an error report for every UI delay you experience in the product. It stores these error reports in%LOCALAPPDATA%\PerfWatson. This data is then submitted to Microsoft on next Visual Studio launch.

If your Windows Error Reporting permissions are set to Automatically check for solutions and upload data, the performance reports will be submitted to Microsoft automatically. Otherwise, PerfWatson will prompt the user for permission to send the collected data. This prompt can be disabled by checking “Do not show this dialog again. Report problems automatically” on the dialog.

Read more: Greg's Cool [Insert Clever Name] of the Day

Read more: Visual Studio PerfWatson

Three helpful SSH tips for developers

Posted by

jasper22

at

09:48

|

If you're a developer that deploys stuff to unix systems, then one of the most common tools you interact with is SSH. It never ceases to amaze me, in spite of this, how little developers really know about SSH.

SSH config file

The first thing any developer needs to know is that SSH has a config file that allows you to configure defaults for SSH on a host by host basis. It also allows aliases, and bash completion can use this config file to make sshing into a system much easier. This file lives under the .ssh folder in your home directory, ~/.ssh/config. Here's a sample of things that I have in my config file.

Host home

HostName jamesroper.homelinux.org

Port 2222

User james

Host sshjump

Hostname sshjump.atlassian.com

User jamesroper

ForwardAgent yes

So I can SSH home by running ssh home and that will automatically use james as the username, jamesroper.homelinux.org as the host name, and 2222 as the port number. For details on all the configuration just read the man page, man ssh_config. You'll see that you can configure everything including port forwarding and much more.

Jump boxes

There are a number of times when I need to SSH into a jump box to SSH to another system. The annoying thing about this is to get to a system, I need to run two commands, and scp is even more annoying because I have to copy first to the jump box, then to the remote system.

Enter ProxyCommand. The proxy command config option allows you to specify a command that SSH will run first in order to establish the connection to the remote system, and then it will pipe its communication through that command. Combined with nc on the jump box end to create a TCP connection to the your destination host, this can be used to tunnel an SSH connection through another SSH connection. The configuration in the SSH config file looks like this:

Host *.atlassian.com

ProxyCommand nohup ssh sshjump nc -w1 %h %p

The other thing I've made use of here is wildcards, and the ability for SSH to substitute the host name and port number for the particular host into option values. Now I can run ssh jira.atlassian.com, and the SSH connection will be piped through an ssh connection to sshjump, which is an alias defined above as sshjump.atlassian.com. I can also scp directly.

Read more: Atlassian blog

USB & CD/DVD Blocking: One Way to Keep Data Free of Theft

Posted by

jasper22

at

09:48

|

Introduction

Have you ever thought of blocking access to USB Memory and CD? I will introduce an example of this. Perhaps some of you will not be interested in this, but I think this technique will be useful for more large-scale projects. I referenced a sample in Microsoft Windows DDK. This sample is implemented by file system filter driver. As you know, File System Filter Driver is commonly used in Anti-Virus and it can be used for some other purposes. In this sample, we can not only block access, but log the file path written to USB.

How to Use

This sample consists of 2 sysfiles and a DLL file. In order to test this sample, first execute install.exe in 1_install folder. You can uninstall this by executing uninstall.exe in 3_uninstall folder.

As seen above, click OK button first and then test the functions.

Using the Code

Here I would explain the file system filter driver. There are two ways of developing file system filter driver. One is to use filter function supported by FLTLIB.DLL in system32 directory. In this case, we can communicate with driver by using FilterConnectCommunicationPort() function and FilterSendMessage() function. Another one is to get file system driver's pointer and attach our driver to it by using IoAttachDeviceToDeviceStack() function.

DriverEntry

DriverEntry() function should be written like below:

NTSTATUS

DriverEntry (

__in PDRIVER_OBJECT DriverObject,

__in PUNICODE_STRING RegistryPath

)

{

PSECURITY_DESCRIPTOR sd;

OBJECT_ATTRIBUTES oa;

UNICODE_STRING uniString;

NTSTATUS status;

PFLT_VOLUME fltvolume;

HANDLE handle = (PVOID)-1;

PROCESS_DEVICEMAP_INFORMATION ldrives;

ULONG drive, bit;

STRING ansiString, ansiVolString;

UNICODE_STRING unString, unVolString;

CHAR szDrv[20];

ULONG sizeneeded;

HANDLE hThread;

OBJECT_ATTRIBUTES oaThread;

KIRQL irql;

ULONG i;

try {

ACDrvData.LogSequenceNumber = 0;

ACDrvData.MaxRecordsToAllocate = DEFAULT_MAX_RECORDS_TO_ALLOCATE;

ACDrvData.RecordsAllocated = 0;

ACDrvData.NameQueryMethod = DEFAULT_NAME_QUERY_METHOD;

ACDrvData.DriverObject = DriverObject;

InitializeListHead( &ACDrvData.OutputBufferList );

KeInitializeSpinLock( &ACDrvData.OutputBufferLock );

#if ACDRV_LONGHORN

//

// Dynamically import FilterMgr APIs for transaction support

//

ACDrvData.PFltSetTransactionContext =

FltGetRoutineAddress( "FltSetTransactionContext" );

ACDrvData.PFltGetTransactionContext =

FltGetRoutineAddress( "FltGetTransactionContext" );

ACDrvData.PFltEnlistInTransaction =

FltGetRoutineAddress( "FltEnlistInTransaction" );

#endif

SpyReadDriverParameters(RegistryPath);

Read more: Codeproject

Why don't the file timestamps on an extracted file match the ones stored in the ZIP file?

Posted by

jasper22

at

09:42

|

A customer liaison had the following question:

My customer has ZIP files stored on a remote server being accessed from a machine running Windows Server 2003 and Internet Explorer Enhanced Security Configuration. When we extract files from the ZIP file, the last-modified time is set to the current time rather than the time specified in the ZIP file. The problem goes away if we disable Enhanced Security Configuration or if we add the remote server to our Trusted Sites list. We think the reason is that if the file is in a non-trusted zone, the ZIP file is copied to a temporary location and is extracted from there, and somehow the date information is lost.

The customer is reluctant to turn off Enhanced Security Configuration (which is understandable) and doesn't want to add the server as a trusted site (somewhat less understandable). Their questions are

Why is the time stamp changed during the extract? If we copy the ZIP file locally and extract from there, the time stamp is preserved.

Why does being in an untrusted zone affect the behavior?

How can we avoid this behavior without having to disable Enhanced Security Configuration or adding the server as a trusted site?

The customer has an interesting theory (that the ZIP file is copied locally) but it's the wrong theory. After all, copying the ZIP file locally doesn't modify the timestamps stored inside it.

Since the ZIP file is on an untrusted source, a zone identifier is being applied to the extracted file to indicate that the resulting file is not trustworthy. This permits Explorer to display a dialog box that says "Do you want to run this file? It was downloaded from the Internet, and bad guys hang out there, bad guys who try to give you candy."

And that's why the last-modified time is the current date: Applying the zone identifier to the extracted file modifies its last-modified time, since the file on disk is not identical to the one in the ZIP file. (The one on disk has the "Oh no, this file came from a stranger with candy!" label on it.)

Read more: The old new thing

Why doesn't ConcurrentBag implement ICollection?

Posted by

jasper22

at

09:42

|

Hopefully you’ve encountered by now the System.Collections.Concurrent namespace. It’s new to .NET 4, and it has many useful data structures which are optimized for multi-threaded usage. ConcurrentBag, for instance, is an unordered collection of objects, which multiple threads can add and remove objects from at the same time.

Recently I needed to test that some piece of code was thread-safe. To do that, I wanted to run it once synchronically, and once asynchronically. It looked something like that:

private static IEnumerable<ComputationResult> ComputeSynchronous(IEnumerable<string> inputs)

{

Console.WriteLine("Starting synchrnous correction.");var results = new ConcurrentBag<ComputationResult>();foreach (var input in inputs){//Some more printouts and work herevar result = computation.Compute(input);//Some more printouts and work hereresults.Add(result);

}

return results;

And so, the first method computes stuff in one thread, and the second does the same computation on the same inputs, but inside a parallel loop. This is all nice and simple, but I was a little bothered by the fact the the code inside the loop in the two methods repeats itself. The logical conclusion would be to extract a method, of course. This should look something like this:

Read more: Doron's .NET Space

How To Network Boot (PXE) The Ubuntu LiveCD

With Ubuntu’s latest release out the door, we thought we’d celebrate by showing you how to make it centrally available on your network by using network boot (PXE).

Overview

We already showed you how to setup a PXE server in the “What Is Network Booting (PXE) and How Can You Use It?” guide, in this guide we will show you how to add the Ubuntu LiveCD to the boot options.

If you are not already using Ubuntu as your number one “go to” for troubleshooting, diagnostics and rescue procedures tool… it will probably replace all of the tools you are currently using. Also, once the machine has booted into the Ubuntu live session, it is possible to perform the OS setup like you normally would. The immediate up shut of using Ubuntu over the network, is that if your already using the CD version, you will never again be looking for the CDs you forgot in the CD drives.

Read more: How-to-geek

sslsnoop v0.6 – Dump Live Session Keys From SSH & Decrypt Traffic On The Fly

Posted by

jasper22

at

09:57

|

sslsnoop dumps live session keys from openssh and can also decrypt the traffic on the fly.

Works if scapy doesn’t drop packets. using pcap instead of SOCK_RAW helps a lot now.

Works better on interactive traffic with no traffic at the time of the ptrace. It follows the flow, after that.

Dumps one file by fd in outputs/

Attaching a process is quickier with –addr 0xb788aa98 as provided by haystack INFO:abouchet:found instance @ 0xb788aa98

how to get a pickled session_state file : $ sudo haystack –pid `pgrep ssh` sslsnoop.ctypes_openssh.session_state search > ss.pickled

Not all ciphers are implemented.

Workings ciphers: aes128-ctr, aes192-ctr, aes256-ctr, blowfish-cbc, cast128-cbc

Partially workings ciphers (INBOUND only ?!): aes128-cbc, aes192-cbc, aes256-cbc

Non workings ciphers: 3des-cbc, 3des, ssh1-blowfish, arcfour, arcfour1280

Read more: DarkNet.org.uk

WCF Extensibility – Message Formatters

Posted by

jasper22

at

09:56

|

Message formatters are the component which do the translation between CLR operations and the WCF Message object – their role is to convert all the operation parameters and return values (possibly via serialization) into a Message on output, and deconstruct the message into parameter and return values on input. Anytime a new format is to be used (be it a new serialization format for currently supported CLR types, or supporting a new CLR type altogether), a message formatter is the interface you’d implement to enable this scenario. For example, even though the type System.IO.Stream is not serializable (it’s even abstract), WCF allows it to be used as the input or return values for methods (for example, in the WCF REST Raw programming model) and a formatter deals with converting it into messages. Like the message inspectors, there are two versions, one for the server side (IDispatchMessageFormatter), and one for the client side (IClientMessageFormatter).

Among the extensibility points listed in this series, the message formatters are the first kind to be required in the WCF pipeline – a service does not need to have any service / endpoint / contract / operation behavior, nor any message / parameter inspector. If the user doesn’t add any of those, the client / service will just work (some behaviors are automatically added to the operations when one adds an endpoint, but that’s an implementation detail for WCF – they aren’t strictly necessary). Formatters, on the other hand, are required (to bridge the gap between the message world and the operations using CLR types). If we don’t add any behaviors to the service / endpoint / contract / operation that sets a formatter, WCF will add one formatter to the operation (usually via the DataContractSerializerOperationBehavior, which is added by default in all operation contracts).

Public implementations in WCF

None. As with most of the runtime extensibility points, there are no public implementations of either the dispatch or the client message formatter. There are a lot of internal implementations, though, such as a formatter which converts operation parameters into a message (both using the DataContractSerializer and the XmlSerializer), formatters which map parameters to the HTTP URI (used in REST operations with UriTemplate), among others.

Interface declaration

public interface IDispatchMessageFormatter

{

void DeserializeRequest(Message message, object[] parameters);

Message SerializeReply(MessageVersion messageVersion, object[] parameters, object result);

}

public interface IClientMessageFormatter

{

Message SerializeRequest(MessageVersion messageVersion, object[] parameters);

object DeserializeReply(Message message, object[] parameters);

}

Read more: Carlos' blog

SQL Server: Keeping Log of Each Query Executed Through SSMS

Posted by

jasper22

at

09:56

|

During a normal working day, a DBA or Developer executes countless queries using SQL Server Management Studio. Some of these queries, which are thought important, are saved and roughly 80% of query windows are closed without pressing save button. But after few minutes, hours or even days, most DBAs and Developers like me want their quires back, which they have executed but can’t save.

SQL Server Management Studio has no such feature through which we can get our unsaved queries back. If you need to keep log of each query you have executed in SSMS then you must install free tool “SSMS Tools Pack 1.9” by Mladen Prajdić, which contains “Query Execution History” and much more.

Read more: Connect SQL

Send Cookies When Making WCF Service Calls

Posted by

jasper22

at

09:55

|

Introduction

This article presents an example on how to send cookies when making WCF service calls.

Background

Once a while, you may find that you want to send some information in the form of "Cookies" to the server when making WCF calls. For example, if the WCF service is protected by a "Forms Authentication" mechanism, you will need to send the authentication cookie when making the WCF call to gain the required access to the service.

If you are calling a "REST" service using the "WebClient" class, this should not be a difficult task. You can simply work on the "CookieContainer" property of the "HttpWebRequest" class.

If you are calling a regular WCF service, and your client proxies are generated by the "Adding service reference" tool in the Visual Studio, the method to send cookies is not so obvious.

This article is to present an example on how to send cookies when making WCF calls using the Visual Studio generated client proxies.

The WCF service

The example WCF service is implemented in the "ServiceWithCookies.svc.cs" file in the "WCFHost" project.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Runtime.Serialization;

using System.ServiceModel;

using System.Text;

using System.Web;

using System.ServiceModel.Activation;

namespace WCFHost

{

[DataContract]

public class CookieInformation

{

[DataMember(Order = 0)]

public string Key { get; set; }

[DataMember(Order = 1)]

public string Value { get; set; }

}

[ServiceContract]

public interface IServiceWithCookies

{

[OperationContract]

CookieInformation EchoCookieInformation();

}

[AspNetCompatibilityRequirements(RequirementsMode

= AspNetCompatibilityRequirementsMode.Required)]

public class ServiceWithCookies : IServiceWithCookies

{

public CookieInformation EchoCookieInformation()

{

var request = HttpContext.Current.Request;

string key = "NA";

string value = "NA";

if (request.Cookies.Count > 0)

{

key = request.Cookies.Keys[0];

value = request.Cookies[key].Value;

}

Read more: Codeproject

Customization of Project and Item Templates in Visual Studio

Posted by

jasper22

at

09:54

|

Introduction

This article explains how to customize the project and item templates in Visual Studio. The article comes in three parts. The first part explains how to customize project templates. The second part explains how to customize item templates, and the third part explains how to create text template generators for project items.

Though there are different project types of templates available in Visual Studio for different scenarios, a lot of initial designing and coding is common and has to be done for every project. To make work easier, Visual Studio provides features for customizing the project and item templates for different scenarios.

Using the code

First, we have to create a new project template.

I am creating a new project template for an ASP.NET Web Application, and adding custom parameters to be accepted from the user which will be updated in the project files at the time of creation of the project.

The following are the steps to create a new project template:

Create a new ASP.NET Web Application and name it MyWebTemplate.

Add a new folder named Views in the project.

Add a new .aspx page in the Views folder and name it ViewPage.aspx.

Add a custom parameter named $Title$ in the ViewPage.aspx file.

Read more: Codeproject

Ускоряем Visual Studio, часть I. Unity Builds

Posted by

jasper22

at

09:51

|

Это перевод статьи Oliver Reeve об одном из способов ускорения компиляции проекта. Автору удалось ускорить компиляцию с 55 до 6 минут. В своём проекте я получил прирост производительности около 22% (около минуты). Это не столь поразительно, как достижения автора, но всё же, умножив эту минуту на количество компиляций в день, количество разработчиков и длительность разработки проекта, я получил экономию, которая точно оправдывает затраты на чтение статьи и настройку проектов. Описано решение для Visual Studio и С++, но идея применима и к другим IDE, компиляторам и языкам программирования (не всем). В следующей статье я рассмотрел еще пару способов ускорения компиляции решения.

Каждый, кто работал над большим проектом на С++ (или С) ощутил на себе весь ужас длительного времени компиляции. Первый «большой» проект на С++, над которым я работал, собирался 10 минут. Это было намного дольше всего, с чем я работал ранее. Позже в моей карьере, когда я присоединился к индустрии разработки игр, я ощутил проблему компиляции по-настоящему большого проекта. Наша игра собиралась около часа.

И вот оно решение, которое мы внедрили: Unity Builds (далее — UB).

Некоторые читатели, хорошо знакомые с С и С++ могут решить, что то, что я опишу далее — это какой-то «хак». У меня нет конкретного мнения по этому поводу. Конечно, в идее есть нечто «хакообразное», но она действительно решает проблему длительной компиляции на большинстве платформ и для большинства компиляторов.

Перед тем, как я углублюсь в детали и расскажу как настроить UB, я хотел бы уточнить, что этот механизм не предназначен для замены обычного способа сборки релиз-версии решения. Основная идея в существенном сокращении времени сборки для разработчиков, которые минимум по 8 часов ежедневно заняты модификацией кода. Исправление багов и добавление функционала означают постоянную перекомпиляцию. Каждая компиляция — это ожидание, которое каждый заполняет, как умеет.

Read more: Habrahabr.ru

Asynchronous Multi-threaded ObservableCollection

Posted by

jasper22

at

09:45

|

Introduction

ObservableCollections are mainly used in WPF to notify bound UI components of any changes. Those notifications usually translate to UI component updates, normally due to binding. UI component changes could only occur in a UI thread. Whenever you have lengthy work to do, you should do those jobs on a worker thread to improve the responsiveness of the UI. But sometimes, UI updates are very lengthy too. In order to decouple the worker thread from the UI thread, I added/modified functions that enable me to process modifications on the ObservableCollection on the UI thread, either synchronously or asynchronously, and called from any thread. All that occurs transparently by calling the appropriate BeginInvoke (async) or Invoke (sync) whenever needed. But be careful: do not rely on any reads from the ObservableCollection from a worker thread if you use any asynchronous added functions.

Background

I found a few things but never exactly what I wanted. This is why I’m writing this article now. I took a look at: http://www.codeproject.com/KB/dotnet/MTObservableCollection.aspx (Paul Ortiz solution). And also http://powercollections.codeplex.com/. They weren’t what I expected. I also had some concerns about the first link (explained later).

Read more: Codeproject

DirectX End-User Runtimes (June 2010)

Posted by

jasper22

at

09:44

|

This download provides the DirectX end-user redistributable that developers can include with their product.

Read more: MS Download

MS11-028 Vulnerability Details

Posted by

jasper22

at

09:44

|

The bug report apparently originated on stackoverflow and was subsequently filed on Microsoft connect. So it has been public since August 4, 2010 and easily findable in connect (that's how I found it, by searching for JIT bugs). Microsoft seems to believe that since they have not seen any widespread exploits of .NET vulnerabilities (see claim here) that this was not a high priority issue.

I've drawn my conclusions and disabled running all .NET code in the browser on my system.

It should also be noted that using ClickOnce, this issue can be used to execute code outside of the Internet Explorer Protected Mode sandbox.

Here is a simple example that uses the bug to violate type safety:

using System;

using System.Runtime.InteropServices;

using System.Runtime.CompilerServices;

[StructLayout(LayoutKind.Sequential, Pack = 1)]

public struct RunLong {

public byte Count;

public long Value;

public RunLong(byte count, long value) {

Count = count;

Value = value;

}

}

class Program {

static void Main(string[] args) {

try {

Whoop(null, null, args, null, Frob(new RunLong(1, 0)));

} catch { }

}

[MethodImpl(MethodImplOptions.NoInlining)]

private static object Frob(RunLong runLong) {

return null;

}

Read more: IKVM.NET Weblog

New hack exposes details of '25m Sony customers'

Posted by

jasper22

at

09:43

|

Sony Online Entertainment old database exposed, despite previous assurances

Sony last night confessed that more customer data has been hacked, with the personal sensitive information of some 24.6 million SOE users exposed.

The total number of compromised Sony customer profiles has now reached over 100 million.

A new mass-email alert will be sent to 24.6 million Sony Online Entertainment customers, who play games such as Everquest and DC Universe Online.

The 24.6 million user number is unconfirmed, though cited in a Reuters report.

One individual has also accessed an outdated Sony database from 2007, the copany admitted.

This contains around 12,700 credit card numbers and expiration dates, and about 10,700 direct debit records which show account details.

The company provided no explanation on why it had kept an “outdated” server accessible.

Another seperate server, which contains up-to-date credit card data, has not been compromised, Sony added.

All Sony Online Entertainment games have been switched off.

Read more: Develop

Chromeless 0.2 - desktop apps the web way

Posted by

jasper22

at

09:42

|

Mozilla Labs Chromeless has now reached version 0.2 which brings lots of new features. Will it succeed in letting the web onto the desktop?

We reported on Chromeless 0.1 at the end of 2010 and now we have version 0.2 which marks a big upgrade in the project. Chromeless is a project aimed to make use of the operating environment provided by the Firefox rendering engine. This was something that has always been possible but it involved using a technology called XUL (pronounced ZUUL) and other arcane frameworks.

Now Chromeless aims to take the rough edges off the environment and present it in a form that any web programmer can use. In short, if you can create a web page then you should be able to use Chromeless to create a desktop app.

Version 0.2 introduces lots of new features but the key one is probably the upgrade to the new rendering engine used in Firefox 4. This brings HTML5 facilities to Chromeless. This means that you can write code in JavaScript 1.8.5, use multi-touch, WebM video and benefit from GPU acceleration.

Also important it the ability to package your newly created app so that it is simple to install. After all the whole app model is supposed to be about ease of installation and without this Chromeless has no advantages. It creates a package that is completely standalone in the sense that it includes the entire platform and you don't have to have Firefox installed to run the app.

Another big step in the right direction is the ability to use the menu API to create the apps menu structure using JavaScript objects. There is also a new library that allows you to embed web content within your app - well it is based on web technology.

Read more: I Programmer

goosh.org

Goosh goosh.org 0.5.0-beta #1 Mon, 23 Jun 08 12:32:53 UTC Google/Ajax

Welcome to goosh.org - the unofficial google shell.

This google-interface behaves similar to a unix-shell.

You type commands and the results are shown on this page.

goosh is powered by google.

goosh is written by Stefan Grothkopp <grothkopp@gmail.com>

it is NOT an official google product!

goosh is open source under the Artistic License/GPL.

Read more: www.goosh.org/

The Subversion Mistake

Posted by

jasper22

at

11:36

|

At my workplace, when I first got here, we were doing the waterfall method of development. We would do 3 months worth of hard work, with thousands of commits and tons of features. Then we would hand things off to QA to test. Spend a few more weeks bug fixing. Then when things were 'certified' by QA, we would spend a whole weekend (and the next week) doing the release and bug fixing in production. Then the cycle would repeat again. Ugly.

Now, based on feedback I gave, we use an iterative approach to development. It is extremely flexible and has allowed us to increase the rate of releases to our customers as well as the stability of our production environment. We did about 9 iteration releases in 3 months, our customers got features more quickly and we have fewer mid-week critical bug fixing patches. Everyone from the developers all the way up to the marketing department loves that we have become more agile as a company.

In order to support this model of development, we had to change the way we use subversion. Before, we would have a branch for the version in production and do all of our main work on trunk. We would then copy any bug fixes to that branch and do a release from there. I reversed and expanded that model.

- Trunk is now what is in production.

- Main development and bug fixes happen on numbered iteration branches (iteration-001, iteration-002, etc.)

- Features happen on branches named after the feature. (foobranch, barbranch, etc)

- Each iteration branch is based off of the previous iteration. If a checkin happens in iteration-001, it is merged into iteration-002. (ex: cd iteration-002; svn merge ^/branches/iteration-001 .)

- No commits happen directly to trunk, only merges. For midweek releases, we cherry pick individual commits from an iteration branch to trunk. (ex: cd trunk; svn merge -c23423 ^/branches/iteration-001 .)

- Feature branches are based off of an iteration.

Unfortunately, we are quickly learning that subversion does not support this model of development at all and I've had to become a subversion merge expert.

Read more: Kick me in the nuts

Microsoft(R) Silverlight™ 4 SDK - April 2011 Update

Posted by

jasper22

at

11:35

|

The Microsoft® Silverlight™ 4 SDK provides libraries and tools for developing Silverlight applications.

Read more: MS Download

Spin Lock in C++

Posted by

jasper22

at

11:34

|

Introduction

If we use common synchronization primitives like mutexes and critical sections then the following sequence of events occur between two threads that are looking to acquire a lock.

Thread 1 acquires lock L and executes

T2 tries to acquire lock L, but since its already held and therefore blocks incurring a context switch

T1 release the lock L. This signals T2 and at lower level this involves some sort of kernel transition.

T2 wakes up and acquires the lock L incurring another context switch.

So there are always at least two context switches when primitive synchronization objects are used. A spin lock can get away with expensive context switches and kernel transition.

Most modern hardware supports atomic instructions and one of them is called ‘compare and swap’ (CAS). On win32 systems they are called interlocked operations. Using these interlocked functions, an application can compare and store a value in an atomic uninterruptible operation. With interlocked functions, it is possible to achieve lock freedom to save expensive context switches and kernel transitions which can be bottleneck in a low latency application. On a multiprocessor machine a spin lock (a kind of busy waiting) can avoid both of the above issues to save thousands of CPU cycles in context switches. However, the downside of using spin locks is that they become wasteful if held for longer period of time in which case they can prevent other threads from acquiring the lock and progressing. The implementation shown in this article is an effort to develop a general purpose spin lock.

Algorithm

A typical (or basic) spin lock acquire and release functions would look something like below.

// acquire the lock

class Lock

{

volatile int dest = 0;

int exchange = 100;

int compare = 0;

void acquire()

{

While(true)

{

if(interlockedCompareExchange(&dest, exchange, compare) == 0)

{

// lock acquired

break;

}

}

}

// release the lock

Void release()

{

// lock released

dest = 0;

Read more: Codeproject

MySQL Server’s built-in profiling support

Posted by

jasper22

at

11:34

|

MySQL’s SHOW PROFILES command and its profiling support is something that I can’t believe I hadn’t spotted before today.

It allows you to enable profiling for a session and then record performance information about the queries executed. It shows details of the different stages in the query execution (as usually displayed in the thread state output of SHOW PROCESSLIST) and how long each of these stages took.

I’ll demonstrate using an example. First within our session we need to enable profiling, you should only do this in sessions that you want to profile as there’s some overhead in performing/recording the profiling information:

mysql> SET profiling=1;

Query OK, 0 rows affected (0.00 sec)

Now let’s run a couple of regular SELECT queries

mysql> SELECT COUNT(*) FROM myTable WHERE extra LIKE '%zkddj%';

+----------+

| COUNT(*) |

+----------+

| 0 |

+----------+

1 row in set (0.32 sec)

mysql> SELECT COUNT(id) FROM myTable;

+-----------+

| COUNT(id) |

+-----------+

| 513635 |

+-----------+

1 row in set (0.00 sec)

Followed up with some stuff that we know’s going to execute a bit slower:

mysql> CREATE TEMPORARY TABLE foo LIKE myTable;

Query OK, 0 rows affected (0.00 sec)

mysql> INSERT INTO foo SELECT * FROM myTable;

Query OK, 513635 rows affected (33.53 sec)

Records: 513635 Duplicates: 0 Warnings: 0

mysql> DROP TEMPORARY TABLE foo;

Query OK, 0 rows affected (0.06 sec)

Read more: James Cohen

NHibernate Code-First (NH Applications without writting any mapping classes)

Posted by

jasper22

at

11:32

|

NHibernate Code-First is an abstraction layer on top of NHibernate core that allow you easily create data-centric application without writing any line of XML mapping files.

NHibernate Code-First is based on .NET Framework 4.

Highlights

No XML mapping files

Just write POCO entity classes without writing any line of XML mapping files

Mapping customization

You can customize mapping by using new fluent mapping method inside your context classes

Manage sessions internally for web and windows application

No need to manage sessions in your code, just write context classes, and allow framework do it for you.

Advance mapping

Fluent mapper allow you easily write advance mappings

Read more: Codeplex

Take advantage of Reference Paths in Visual Studio and debug locally referenced libraries

Posted by

jasper22

at

11:32

|

Are you using NHibernate or other open-source library in your project? I’m pretty sure that you are. Have you ever wondered what’s happening “under the cover” when you call Session.Get or perform a query? You probably did. The problem is that usually all the external assemblies are stored in one directory (libs, packages, reflibs etc.) with no symbol files or sources. In this post I would like to show you how you can benefit from project’s ReferencePath property and debug the source code of your libraries at any time you want.

Let’s assume that our projects are using NHibernate 3.1. We ran nuget (http://nuget.codeplex.com/) to setup dependencies and NHibernate libraries are now in $(ProjectDir)\packages\NHibernate.3.1.0.4000\lib\Net35. In the project file (.csproj) we probably have a following section:

<Reference Include="NHibernate">

<HintPath>packages\NHibernate.3.1.0.4000\lib\Net35\NHibernate.dll</HintPath>

</Reference>

We are also using code repository and our .csproj file is checked-in as well as the whole packages directory. One day we noticed that one repository (DAO) function is performing really badly. While debugging we found out that the problem lies in Session.Get method. Without knowledge of what’s happening inside we are not able to tell what causes this performance loss. But we also know that NHibernate library is open-source so let’s get the source code, debug it and have a grasp at what NHibernate gurus put in there. We download the source code (NHibernate-3.1.0.GA-src.zip), save them to c:\NHibernate-src and run the compilation script (NHibernate is using nant script, we want pdb files that’s why project.config=debug, I skipped tests just to speed up the build process):

nant -t:net-3.5 -D:skip.tests=true -D:skip.manual=true -D:project.config=debug

You probably will need to download ILMerge and place it in the c:\NHibernate-src\Tools\ILMerge. I also needed to remove c:\NHibernate-src\src\NHibernate.Test.build – somehow I was getting compilation errors and didn’t have time to check why (we won’t need the NHibernate.Test.dll anyhow).

Read more: Low Level Design

WP7 - adding a ‘Fade to Black’ effect to a ListBox

Posted by

jasper22

at

11:30

|

I wanted to have a small and ‘nice’ application in which to experiment things related to networking and graphic effects in WP7, so I took out the ‘My WP7 Brand’ project from CodePlex and started to customize it, this is how ‘All About PrimordialCode’ is born.

Let’s start reminding you I’m not a designer, like many of you I’m a developer.

The first thing I want to show you is how I realized a ‘fade to black’ effect for a ListBox, requisites:

- Items that are scrolling out of the ListBox visible area have to fade away gently, not with an abrupt cut.

- The ListBox have to maintain its full and normal interactions as much as possible.

- It has to work with dark and light themes.

The straightforward way to obtain those result is to use and ‘OpacityMask’ like in the following code:

<ListBox Margin="0,0,-12,0">

<ListBox.OpacityMask>

<LinearGradientBrush StartPoint="0,0" EndPoint="0,1">

<GradientStop Offset="0" Color="Transparent" />

<GradientStop Offset="0.05" Color="Black" />

<GradientStop Offset="0.95" Color="Black" />

<GradientStop Offset="1" Color="Transparent" />

</LinearGradientBrush>

</ListBox.OpacityMask>

<ListBox.ItemTemplate>

<DataTemplate>

...your incredible item template goes here...

</DataTemplate>

</ListBox.ItemTemplate>

</ListBox>

Read more: Windows Phone 7

Uses and misuses of implicit typing

Posted by

jasper22

at

11:29

|

One of the most controversial features we've ever added was implicitly typed local variables, aka "var". Even now, years later, I still see articles debating the pros and cons of the feature. I'm often asked what my opinion is, so here you go.

Let's first establish what the purpose of code is in the first place. For this article, the purpose of code is to create value by solving a business problem.

Now, sure, that's not the purpose of all code. The purpose of the assembler I wrote for my CS 242 assignment all those years ago was not to solve any business problem; rather, its purpose was to teach me how assemblers work; it was pedagogic code. The purpose of the code I write to solve Project Euler problems is not to solve any business problem; it's for my own enjoyment. I'm sure there are people who write code just for the aesthetic experience, as an art form. There are lots of reasons to write code, but for the sake of this article I'm going to make the reasonable assumption that people who want to know whether they should use "var" or not are asking in their capacity as professional programmers working on complex business problems on large teams.

Note that by "business" problems I don't necessarily mean accounting problems; if analyzing the human genome in exchange for National Science Foundation grant money is your business, then writing software to recognize strings in a sequence is solving a business problem. If making a fun video game, giving it away for free and selling ads around it is your business, then making the aliens blow up convincingly is solving a business problem. And so on. I'm not putting any limits here on what sort of software solves business problems, or what the business model is.

Second, let's establish what decision we're talking about here. The decision we are talking about is whether it is better to write a local variable declaration as:

TheType theVariable = theInitializer;

or

var theVariable = theInitializer;

where "TheType" is the compile-time type of theInitializer. That is, I am interested in the question of whether to use "var" in scenarios where doing so does not introduce a semantic change. I am explicitly not interested in the question of whether

IFoo myFoo = new FooStruct();

is better or worse than

var myFoo = new FooStruct();

Read more: Fabulous Adventures In Coding

Application Virtualization for Developers

Posted by

jasper22

at

11:28

|

This article is in the Product Showcase section for our sponsors at The Code Project. These reviews are intended to provide you with information on products and services that we consider useful and of value to developers.

In March 2011, Virtualization Technologies released a new version of the developer library that makes it possible to use virtualization in applications.

The name of the product is BoxedApp SDK. By the way, the demo version is absolutely free, and you can download it even right now by clicking on this link.

Virtualization? Why do I need it?

I don't think I am going to be wrong if I say that the first things you thought of when you heard "virtualization" were virtual machines, products like VMWare and VirtualBox. But amazes is that virtualization can live right within your application.

Here is a couple of examples. A portable application which is to be run without installation; the problem is that the application uses Flash ActiveX. The problem seems insoluble: you could install Flash ActiveX, but the portable application, writing something to the registry - that's something odd. On top of that, you can simply have insufficient rights to write to the registry.

Another example. The only format a third-party code is distributed as is DLL, but you need to link it up statically to ensure that none of the competitors finds out, what is the essence your product is made upon. Yes, you could have saved the DLL to a temporary file and then load the DLL manually, but in this case the file could be saved by someone else just as easily. And, of course, again you can lack the rights for saving a DLL to disk.

Or, you want to create your own packer, which turns the numerous executable files, DLL and ActiveX into a single executable file. Yes, you can create a product of this kind with BoxedApp SDK.

These are just the tasks virtualization is needed for .

So, how does it work?

Its fundamental idea is intercepting system calls. When an application attempts to open a file or a registry key, we tuck it a pseudo-descriptor. There are plenty of functions, actually: not merely opening files, but many others as well. BoxedApp SDK neatly intercepts them and creates the realistic illusion that the pseudo-files or, to say more precise, virtual files actually exist.

It turns out that one can create a "file", and an application would be able to handle it as if it was real. But since we are developers, here is a sample of the code:

HANDLE hFile =

BoxedAppSDK_CreateVirtualFile(

_T("1.txt"),

GENERIC_WRITE,

FILE_SHARE_READ,

NULL,

CREATE_NEW,

0,

NULL);

See, just one call, and the "file" is ready.

Now we can write some text to this virtual file and then open it in the notepad:

const char* szText = "This is a virtual file. Cool! You have just loaded the virtual file into notepad.exe!\r\nDon't forget to obtain a license ;)\r\nhttp://boxedapp.com/order.html";

DWORD temp;

WriteFile(hFile, szText, lstrlenA(szText), &temp, NULL);

CloseHandle(hFile);

// Inject BoxedApp engine to child processes

BoxedAppSDK_EnableOption(DEF_BOXEDAPPSDK_OPTION__EMBED_BOXEDAPP_IN_CHILD_PROCESSES, TRUE);

// Now notepad loads the virtual file

WinExec("notepad.exe 1.txt", SW_SHOW);

Get numerous other examples now.

Where are virtual files located? Can I store a virtual file in my storage?

By default, virtual files are located in the memory, but sometimes it may be necessary to set a custom storage for such a file. For example, when data is to be stored in a database or somewhere on the Internet. For such cases, a virtual file is created upon the implementation of the IStream interface. You simply place the logics of the storage operations in the implementation of IStream, and the data will be placed there and not in the memory.

An interesting example of the use of such files is playing encrypted media content. For instance, the source video file could be encrypted unit-by-unit and placed in the application's or in a file near the main program. When it's time to play the video, the program creates an IStream-based virtual file. That IStream never decrypts the entire video file; instead, it feeds the data by little pieces (from the IStream::Read method), as demanded.

How about ActiveX / COM?

ActiveX is still widely used; there are many legacy ActiveX. With BoxedApp, you can register an ActiveX virtually, when the program starts, and the program will be able to use it! The registration is really simple:

BoxedAppSDK_RegisterCOMLibraryInVirtualRegistryW(L"Flash9e.ocx");

Read more: Codeproject

Android Fundamentals: Downloading Data With Services

Posted by

jasper22

at

11:27

|

The tutorial content of the still-unnamed “TutList” application we’ve been building together is getting stale. The data has been the same for over a month now. It’s time to breathe some life into the application by providing it with a means to read fresh Mobiletuts tutorial data on the fly.

As it stands now, our application reads a list of tutorial titles and links from a database. This is, fundamentally, the correct way to design the app. However, we need to add a component that retrieves new content from the Mobiletuts website and stores new titles and links in the application database. This component will download the raw RSS feed from the site, parse it for the title and link, then store that data into the database. The database will be modified to disallow duplicate links. Finally, a refresh option will be placed within the list fragment so the user can manually force an update of the database.

As with other tutorials in this series, the pacing will be faster than some of our beginner tutorials; you may have to review some of the other Android tutorials on this site or even in the Android SDK reference if you are unfamiliar with any of the basic Android concepts and classes discussed in this tutorial. The final sample code that accompanies this tutorial is available for download as open-source from the Google code hosting.

Step 0: Getting Started

This tutorial assumes you will start where our last tutorial, Android Fundamentals: Properly Loading Data, left off. You can download that code and work from there or you can download the code for this tutorial and follow along. Either way, get ready by downloading one or the other project and importing it into Eclipse.

Step 1: Creating the Service Class

One way to handle background processing, such as downloading and parsing the Mobiletuts tutorial feed, is to implement an Android service for this purpose. The service allows you to componentize the downloading task from any particular activity or fragment. Later on, this will allow it to easily perform the operation without the activity launching at all.

So let’s create the service. Begin by creating a new package, just like the data package. We named ours com.mamlambo.tutorial.tutlist.service. Within this package, add a new class called TutListDownloaderService and have it extend the Service class (android.app.Service). Since a service does not actually run in a new thread or process from the host (whatever other process or task starts it, in this case, an Activity), we need to also create a class to ensure that the background work is done asyncronously. For this purpose, we could use a Thread/Handler design or simply use the built-in AsyncTask class provided with the Android SDK to simplify this task.

Read more: MobileTuts

sec-wall: Open Source Security Proxy

Posted by

jasper22

at

11:25

|

sec-wall, a recently released security proxy is a one-stop place for everything related to securing HTTP/HTTPS traffic. Designed as a pragmatic solution to the question of securing servers using SSL/TLS certificates, WS-Security, HTTP Basic/Digest Auth, custom HTTP headers, XPath expressions with an option of modifying HTTP headers and URLs on the fly.

This article is an introductory material that will guide you through the process of installing the software on Ubuntu and preparing the first security configuration - using HTTP Basic Auth with and without tunneling it through SSL/TLS.

The core of sec-wall is a high-performance HTTP(S) server built on top of gevent framework which in turn is a Pythonic wrapper around the libevent notification library.

Most of the project's dependencies may be fetched using apt-get and that's what will be used below. Note the installation of pip, an installer for Python packages, it will come in handy because Spring Python, another of the project's dependencies isn't available in Ubuntu repositories yet (although there's an ITP for that). Installing zdaemon with pip will make sure the command will be consistently available under the same name regardless of the Python version you're using. pip will also be used for installing sec-wall itself, the software's just too new for there being a DEB in the repositories.

$ sudo apt-get install python-pip python-pesto python-gevent python-yaml python-lxml

$ sudo pip install zdaemon

$ sudo pip install springpython

$ sudo pip install sec-wall

And that's it, we can proceed to use sec-wall now.

Firstly, an instance of the proxy needs to be initialized in an empty directory. That sets up the place for future internal log files, places a dot-prefixed 'hidden' file to mark the directory as belonging to a sec-wall instance and - most importantly - creates a skeleton config file, one that we need to customize.

$ mkdir ~/sec-wall-tutorial

$ sec-wall --init ~/sec-wall-tutorial

Read more: LinuxSecurity.com

HIDEasy Lib

Posted by

jasper22

at

11:24

|

Project Description

With HIDEasy Lib, you can easily access to your Human Input Device. It works with Windows XP, Vista and Seven (32 or 64bits).

You can connect and handle your gamepad, joysticks, boxes of buttons/LEDs...

It's developed in VB (.NET 3.5)

Read more: Codeplex

Silverlight 5 – Elevated Trust In-Browser

Posted by

jasper22

at

11:23

|

In Silverlight 5 it is possible to enable applications to run with elevated permissions in-browser.

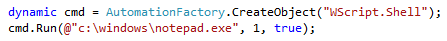

The code snippet below will open Notepad.exe using the AutomationFactory in Silverlight. Using the AutomationFactory requires elevated permissions.

To run the snippet in Silverlight 4 the application must run with elevated permissions and in out-of-browser. In Silverlight 5 it is possible to run the snippet in-browser and with elevated permissions.

There are some requirements that need to be fulfilled to be able to run with elevated permissions in-browser. The XAP file needs to be signed with a certificate present in the Trusted Publishers Certificate store. Furthermore it is necessary to add a registry setting:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Silverlight\

Add the following DWORD key "AllowElevatedTrustAppsInBrowser" with 0×00000001.

Read more: XAMLGEEK

WCF 4.0 Hebrew guide for beginner

Posted by

jasper22

at

11:22

|

Shlomo Goldberg released in his blog a great WCF 4.0 tutorial series, if can read Hebrew you might find it very useful:

Part 1 – Service and Contracts

Part 2 – Hosting Services

Part 3 – Consuming Services

Part 4 - Configuration

Part 5 – Configuration (Part 2)

Part 6 – Hosting Scenarios

Part 7 – Consuming service using JavaScript

Part 8 – Web style services and using GET and POST calls

Part 9 - Web style services and using GET and POST calls (Part 2)

Part 10 – RSS feed using WCF

Part 11 - Messaging Patterns

Part 12 – Duplex Pattern

Part 13 – IIS Hosting

Part 14 – Instance context and concurrency mode

Part 2 – Hosting Services

Part 3 – Consuming Services

Part 4 - Configuration

Part 5 – Configuration (Part 2)

Part 6 – Hosting Scenarios

Part 7 – Consuming service using JavaScript

Part 8 – Web style services and using GET and POST calls

Part 9 - Web style services and using GET and POST calls (Part 2)

Part 10 – RSS feed using WCF

Part 11 - Messaging Patterns

Part 12 – Duplex Pattern

Part 13 – IIS Hosting

Part 14 – Instance context and concurrency mode

Read more: Gadi Berqowitz's Blog

LLBLGen Pro QuerySpec: the basics

Posted by

jasper22

at

11:20

|

Last time I introduced LLBLGen Pro QuerySpec, a new fluent API for specifying queries for LLBLGen Pro. As promised I'll write a couple of blogposts about certain aspects of the new API and how it works. Today I'll kick off with the basics.

Two types of queries: EntityQuery<T> and DynamicQuery

There are two types of queries in QuerySpec: entity queries (specified with objects of type EntityQuery<T>, where T is the type of the entity to return) and dynamic queries, which are queries with a custom projection (specified with objects of type DynamicQuery or its typed variant DynamicQuery<T>). The difference between them is mainly visible in the number of actions you can specify on the query. For example, an entity query doesn't have a way to specify a group by, simply because fetching entities is about fetching rows from tables/views, not rows from a grouped set of data. Similarly, DynamicQuery doesn't have a way to specify an entity type filter, simply because it's about fetching a custom projection, not about fetching entities. This difference guides the user of the API with writing the final query: the set of actions to specify, e.g. Where, From, OrderBy etc., is within the scope of what the query will result in.

A custom projection is any projection (the list of elements in the 'SELECT' statement returned by a query) which isn't representing a known, mapped entity. This distinction between entity queries and dynamic queries might sound odd at first, but it will be straightforward once you've worked with a couple of queries.

QuerySpec queries are specifications, they're not executed when enumerated, in fact you can't enumerate them. To obtain the result-set, you have to execute the queries. I'll dig deeper in how to execute QuerySpec queries in a follow up post.

The starting point: the QueryFactory

To get started with a query, the user has to decide what kind of objects the query has to produce: entity objects or objects which contain the result of a custom projection. The question is rather easy if you formulate it like this: "Do I want to obtain one (or more) instances of a known entity type, or something else?". If the answer to that is: "One (or more) instances of a known entity type" the query you'll need is an EntityQuery<T>, in all other cases you'll need a DynamicQuery.

If you change your mind half-way writing your query, no worries: you can create a DynamicQuery from an EntityQuery<T> with the .Select(projection) method and can define a DynamicQuery to return entity class instances, so there's always a way to formulate what you want.

To create a query, we'll use a factory. This factory is a small generated class called QueryFactory. It's the starting point for all your queries in QuerySpec: to create a query, you need an instance of the QueryFactory:

var qf = new QueryFactory();

The QueryFactory instance offers a couple of helper methods and a property for each known entity type, which returns an EntityQuery<entitytype> instance, as well as a method to create a DynamicQuery instance.

We'll first focus on entity queries.

Read more: Frans Bouma's blog

PostgreSQL vs Oracle Differences #3 – System Resources

Posted by

jasper22

at

11:19

|

PostgreSQL has much lower system resource requirements than Oracle. I currently look after 200+ PostgreSQL databases running on various sized servers, including tiny virtual machines. Some servers have as many as 40 databases on them. With Oracle, I don’t think I ever had more than around 10 on a single server.

Oracle’s hardware requirements (11gR2):

- 1 GB disk space in temp directory

- 4.5 GB disk space for binaries (Enterprise Edition)

- 4 GB RAM (for 64-bit install)

- About 512 MB minimum per database

PostgreSQL’s hardware requirements (9.0):

- 25MB disk space for binaries

- I couldn’t even find information on the minimum RAM requirements (go documentation!) but it will pretty much run on anything.

If you are considering a change of platform from PostgreSQL to Oracle, this is a major consideration. With it being so easy to create new PostgreSQL databases, they tend to breed themselves over time. The hardware you have now probably won’t cut it if moving to Oracle.

Read more: Oracle DBA in a PostgreSQL world

Description of the course "Lessons on development of 64-bit C/C++ applications"

Posted by

jasper22

at

11:19

|

The course is devoted to creation of 64-bit applications in C/C++ language and is intended for the Windows developers who use Visual Studio 2005/2008/2010 environment. Developers working with other 64-bit operating systems will learn much interesting as well. The course will consider all the steps of creating a new safe 64-bit application or migrating the existing 32-bit code to a 64-bit system.

The course is composed of 28 lessons devoted to introduction to 64-bit systems, issues of building 64-bit applications, methods of searching errors specific to 64-bit code and code optimization. Such questions are also considered as estimate of the cost of moving to 64-bit systems and rationality of this move.

The authors of the course:

candidate of physico-mathematical sciences Andrey Nikolaevich Karpov;

candidate of technical sciences Evgeniy Alexandrovich Ryzhkov.

The authors are involved in maintaining the quality of 64-bit applications and participate in development of PVS-Studio static code analyzer for verifying the code of resource-intensive applications.

Read more: PVS-Studio

uberSVN

Posted by

jasper22

at

11:13

|

Read more: uberSVN

Loving MMVM and asynchronous operations

Posted by

jasper22

at

11:06

|

I’m using in a project a modified version of MVVM in WPF originally made by my dear friend Mauro (check his project Radical, it is really cool). Actually I use a custom DelegateCommand to handle communication between View and the View Model. Here is a sample snippet on how I initialize a command in View Model.

SaveCurrent = DelegateCommand.Create()

.OnCanExecute(o => this.SelectedLinkResult != null).TriggerUsing(PropertyChangedObserver.Monitor(this).HandleChangesOf(vm => vm.SelectedLinkResult)).OnExecute(ExecuteSaveCurrent);

This works but I need to solve a couple of problems.

The first one is that I never remember the syntax . I find the name TriggerUsing() somewhat confusing (it is surely my fault ) and moreover I hardly remember PropertyChangedObserver name of the class used to monitor the change of a property. This cause me every time I create a new DelegateCommand to search another VM to copy initialization. Since monitoring change of a property to reevaluate if a command can be executed is probably one of the most common logic, I wish for a better syntax to avoid being puzzled on what method to call.

Read more: Alkampfer's Place

Configuring the Silverlight plugin

Posted by

jasper22

at

11:05

|

Silverlight applications run within the context of a web page. On this page, an HTML OBJECT tag is embedded which contains a reference to the Silverlight XAP file, normally within the ClientBin directory. Using parameters (params) in the OBJECT tag, we have a communication channel that allows sending values from the HTML to the Silverlight application: in most cases, we can use known parameter names to both set up the Silverlight application the way we want and secondly pass parameter values to Silverlight.

In this tip, we’ll look at the several options we have to configure the Silverlight plugin from the HTML OBJECT tag. The code for this sample can be downloaded here.

The defaults

When creating a new Silverlight application in Visual Studio 2010, both an *.aspx and a *.html file are created. In “early” versions of Silverlight, the *.aspx page contained the asp:Silverlight control, which was a server-side control to setup a Silverlight application. This control isn’t used anymore, so both files use the above mentioned OBJECT tag. The default-generated code is shown here:

<form id="form1" runat="server" style="height:100%">

<div id="silverlightControlHost">

type="application/x-silverlight-2" width="100%" height="100%">

style="text-decoration:none">

<iframe id="_sl_historyFrame"

style="visibility:hidden;height:0px;width:0px;border:0px">

</iframe>

</div>

</form>

Let’s now look at how we can use this to configure how the plugin will show the Silverlight application.

Read more: Silverlight show

WCF Data Services Processing Pipeline

Posted by

jasper22

at

11:03

|

First I must confess. Even tough I like OData and WCF Data Services, in the last couple of months I didn’t have the chance to work or use them. This is why when .NET 4 was shipped I haven’t noticed a new and interesting extension point in the framework – the processing pipeline. In the last MIX11 I got a little introduction to that extension point in Mike Flasko’s session. In this post I’ll explain what is the WCF Data Services' server side processing pipeline.

WCF Data Services Processing Pipeline

In the past I wrote about the Interceptions mechanism that is built inside WCF Data Services. One of the advantages of using that mechanism is the ability to wire up business logic such as custom validations, access policy logic or whatever you like to insert in the queries/change sets pipeline. The problem starts when you need a generic behavior for all the entity sets interceptions. In the first release of WCF Data Services there were no extension points to use that will help implement generic things. But now, in .NET4, you can use the processing pipeline in order to do that. The new DataServiceProcessingPipeline is a class that is being used as a property of the DataService class (which every data service inherits from). It exposes four events that you can hook to:

ProcessingRequest – The event occurs before a request to the data service is processed.

ProcessingChangeset – The event occurs before a change set request to the data service is processed.

ProcessedRequest – The event occurs after a request to the data service has been processed.

ProcessedChangeset – The event occurs after a change set request to the data service has been processed.

These events enables the developer to write code that is performed during the process of WCF Data Services pipeline before and after things happen.

Using WCF Data Services Processing Pipeline

Here is a simple example of how to use the processing pipeline events to write to the output window that the events were called:

public class SchoolDataService : DataService<SchoolEntities>

{

public SchoolDataService()

{

ProcessingPipeline.ProcessedChangeset += new EventHandler<EventArgs>(ProcessingPipeline_ProcessedChangeset);

ProcessingPipeline.ProcessedRequest += new EventHandler<DataServiceProcessingPipelineEventArgs>(ProcessingPipeline_ProcessedRequest);

ProcessingPipeline.ProcessingChangeset += new EventHandler<EventArgs>(ProcessingPipeline_ProcessingChangeset);

ProcessingPipeline.ProcessingRequest += new EventHandler<DataServiceProcessingPipelineEventArgs>(ProcessingPipeline_ProcessingRequest);

}

Read more: Gil Fink on .Net

Static Class in C#

Posted by

jasper22

at

11:02

|

The C# programming language allows us to define a static class. We define a class as a static one when there is no need in instantiating it and we want to ensure that no one will try to do so. The following video clip (hebrew) explains how to define a static class.

Read more: Life Michael

Currency Exchange Rate from Bank of Israel by Code

Posted by

jasper22

at

11:00

|

בין השירותים אשר מספק אתר בנק ישראל קיים שירות של שערי חליפין יציגים.

ניתן באמצעות פרוטוקול HTTP לקבל את שער החליפין היציג לפי מטבע ולפי תאריך.

הנה דוגמא לפונקציה אשר מקבלת כקלט תאריך וקוד מטבע ומחזירה את שער החליפין היציג:

private double BankOfIsraelExchangeRate(DateTime date, string currency)

{

double ExchangeRate = 0;

string XmlUrl =

"rdate=" + date.Year + date.Month.ToString("00") +

date.Day.ToString("00") + @"&curr=" + currency;

DataSet dtExchangeRate = new DataSet("BankOfIsraelExchangeRate");

dtExchangeRate.ReadXml(XmlUrl);

if (dtExchangeRate.Tables.Count > 1)

ExchangeRate = Convert.ToDouble

(dtExchangeRate.Tables[1].Rows[0][4]);

// No exchange rate published for this date, then loop 6 days back

else if (dtExchangeRate.Tables.Count > 0)

{

for (int i = 1; i <= 6; i++)

{

DateTime CheckDate = date.AddDays((-1) * i);

string XmlLoopUrl =

"currency.php?rdate=" +

CheckDate.Year + CheckDate.Month.ToString("00") +

CheckDate.Day.ToString("00") +

@"&curr=" + currency;

Read more: Dudi Nissan's Blog

Subscribe to:

Comments (Atom)