Boffins 'crack' HTTPS encryption in Lucky Thirteen attack

The security of online transactions is again in the spotlight as a pair of UK cryptographers take aim at TLS.

TLS, or Transport Layer Security, is the successor to SSL, or Secure Sockets Layer.

It's the system that puts the S into HTTPS (that's the padlock you see on secure websites), and provides the security for many other protocols, too.

Like 2011's infamous BEAST attack, it has a groovy name: Lucky Thirteen.

Read more: Naked Security

UPnP flaws turn millions of firewalls into doorstops

Posted by

jasper22

at

13:04

|

Last week security researcher HD Moore unveiled his latest paper "Unplug. Don't Play," which looked into vulnerabilities in popular Universal Plug and Play (UPnP) implementations.

What is UPnP? Paul Ducklin explained the principles and the reason behind it in his recent article about insecurity in video cameras, but the simple version is this: in my opinion, UPnP is one of the worst ideas ever.

Let's put it this way: UPnP is a protocol designed to automatically configure networking equipment without user intervention.

Sounds good, right? Until you think about it. UPnP allows things like XBoxes to tell your firewall to punch a hole through so you can play games.

UPnP also allows malware to punch holes in your firewall making access for criminals far easier.

Generally speaking it is a bad idea to implement something that can disable security features without authentication or the knowledge of the person controlling the device.

So we know the dangers of rogue devices/software on the inside exploiting UPnP, now imagine if UPnP managed to listen on the outside.

We don't have to imagine, as Moore has done the work for us. He discovered over 81 million UPnP devices on the internet, 17 million of which appeared to be remotely configurable.

As if that isn't bad enough, Moore also discovered ten new vulnerabilities in the two most popular UPnP implementations.

His scans show over 23 million devices vulnerable to a remote code execution flaw.

Vulnerable products include webcams, printers, security cameras, media servers, smart TVs and routers.

Read more: Naked security

Packets of Death

Posted by

jasper22

at

12:03

|

Packets of death. I started calling them that because that’s exactly what they are.

Star2Star has a hardware OEM that has built the last two versions of our on-premise customer appliance. I’ll get more into this appliance and the magic it provides in another post. For now let’s focus on these killer packets.

...

...

Wow. Things just got weird.

The weirdness continued and I finally got to the point where I had to roll my sleeves up. I was lucky enough to find a very patient and helpful reseller in the field to stay on the phone with me for three hours while I collected data. This customer location, for some reason or another, could predictably bring down the ethernet controller with voice traffic on their network.

Let me elaborate on that for a second. When I say “bring down” an ethernet controller I mean BRING DOWN an ethernet controller. The system and ethernet interfaces would appear fine and then after a random amount of traffic the interface would report a hardware error (lost communication with PHY) and lose link. Literally the link lights on the switch and interface would go out. It was dead.

Read more: Not Just AstLinux Stuff

Google Now Boasts World's No. 2 and No. 3 Social Networks

A new report released Monday revealed that Google+, less than a year and a half after its public debut, is now the No. 2 social network in the world with 343 million active users. Even better for Google, YouTube, which had not previously been tracked as a social network until recently, is now the No. 3 social network in the world with about 300 million active users. Google Plus and YouTube are being used by 25 percent and 21 percent of the global Internet populace, respectively.

Read more: Slashdot

QR:

UEFI Secure Boot Pre-Bootloader Rewritten To Boot All Linux Versions

Posted by

jasper22

at

15:56

|

The Linux Foundation's UEFI secure boot pre-bootloader is still in the works, and has been modified substantially so that it allows any Linux version to boot through UEFI secure boot. The reason for modifying the pre-bootloader was that the current version of the loader wouldn't work with Gummiboot, which was designed to boot kernels using BootServices->LoadImage(). Further, the original pre-bootloader had been written using 'PE/Coff link loading to defeat the secure boot checks.' As it stands, anything run by the original pre-bootloader must also be link-loaded to defeat secure boot, and Gummiboot, which is not a link-loader, didn't work in this scenario. This is the reason a re-write of the pre-bootloader was required and now it supports booting of all versions of Linux.

Read more: Slashdot

QR:

PaperTab Brings (Interactive) Paper Back to the (Physical) Desktop

Posted by

jasper22

at

14:15

|

New technology from Plastic Logic, Intel and researchers from Queen’s University in Ontario is placing a high-tech spin on old-school paper, and in the process they’re giving a whole new meaning to juggling windows on your desktop. PaperTab, “a flexible paper computer,” fills your desk — your actual, physical desk — with ten or more sheets of interactive 10.7-inch plastic displays that purportedly look and feel just like paper.

Eschewing traditional controls, PaperTab’s interface revolves largely around tapping sheets against one another to open apps or functions, while you can manipulate open apps by bending, folding, and dog-earing each sheet.

The PaperTab system is powered by an Intel Core i5 processor and identifies the location of each display using an electromagnetic tracker. The information on each display changes depending on how close it is to the user, with in-hand PaperTabs acting as fully open windows complete with images and text, while far-away sheets change to show the icon its open app. If you pick a far-away sheet back up, it reverts back to full window mode, with all information returning to the screen. The video below shows off the technology in action.

Read more: Laptop

QR:

Secure file exchange with .NET Crypto API

Posted by

jasper22

at

13:13

|

Introduction

I have recently found interest in online storage services like Skydrive, Dropbox or Box, just to mention a few. I've been working on secure protocols and cryptography many times in my engineer life and I decided to find a solution to a simple requirement: exchange documents with other people confidentially using those online storage.

The requirements

Online storage allow you to store documents online and eventually share them with other users. Some of those storage services encrypt the data on their servers but most free one like Skydrive simply store data without any encryption. Even with services that encrypt the data locally when data are transferred on the internet they are no longer encrypted. So far only FileLocker seems to provide encryption during data transfer.

In order to create a secure exchange when sharing documents you need to have the following features:

- Data need to be encrypted where they are stored but although while transferring

- The owner of the data must be able to select the recipients he wishes to share the data with

- The recipient should be able to control the integrity of the data

Those are standard features you expect when securely exchanging data, so I added some other requirements to fit the constraint of an exchange of the data on the internet.

- The overhead of the exchange information must be small

- The exchange protocol must be robust

...

...

public void TestFileExchange()

{

// Load the file to encrypt

byte[] imgData = File.ReadAllBytes(IMG_FILE_NAME);

AESEncryptor aesEncryptor = new AESEncryptor(PASSWORD);

RSACryptoServiceProvider rsaProviderOfRecipient = new RSACryptoServiceProvider();

RSAOAEPEncryptor rsaDigestEncrypt = new RSAOAEPEncryptor(rsaProviderOfRecipient);

RSACryptoServiceProvider rsaProviderOfOwner = new RSACryptoServiceProvider();

RSASHA1Signature rsaDigestSigned = new RSASHA1Signature(rsaProviderOfOwner);

// Encrypt the file data, the key and sign the original file data

EncryptedFile encryptFile = new EncryptedFile(imgData,

new FileDescription(IMG_FILE_NAME, MIME_JPG, APP_SLIDESHOW, ALGO_AES),

aesEncryptor,

new Recipient[] { new Recipient(USER_ID_DEST1, rsaDigestEncrypt) },

new Owner(USER_ID_SRCE, rsaDigestSigned));

// Build an EncryptedFile instance from the encrypted content with header

EncryptedFile encryptFileOut = new EncryptedFile(encryptFile.EncryptedContent);

ExchangeDataHeader encryptedHeader = encryptFileOut.EncryptedHeader;

// Process the encrypted DigestData to extract the AES key

Read more: Codeproject

QR:

Task-based Asynchronous Pattern - WaitAsync

Posted by

jasper22

at

13:10

|

Recently I was asked about a specific scenario when some code was being converted into using TAP and this was code that already used tasks. Since the existing code used the "IsFaulted" property a lot I came up with this little extension method making it easy to see if a task failed or not using the await keyword but without adding a lot of exception handling.

1: public static Task<bool> WaitAsync(this Task task)

2: {

3: var tcs = new TaskCompletionSource<bool>();

4: task.ContinueWith(

5: t => tcs.TrySetResult(!(t.IsFaulted || t.IsCanceled)));

6: return tcs.Task;

7: }

or

public static Task<bool> WaitAsync(this Task task)

{

return task.ContinueWith(

t => !(t.IsFaulted || t.IsCanceled),

TaskContinuationOptions.ExecuteSynchronously);

}

Read more: Being Cellfish

QR:

Open Kernel Crash Dumps in Visual Studio 11

Posted by

jasper22

at

13:06

|

A dream is coming true. A dream where all the debugging you’ll ever do on your developer box is going to be in a single tool – Visual Studio.

In a later post, I will discuss device driver development in Visual Studio 11, which is another dream come true. For now, let’s take a look at how Visual Studio can open kernel crash dumps and perform crash analysis with all the comfy tool windows and UI that we know and love.

To perform kernel crash analysis in Visual Studio 11, you will need to install the Windows Driver Kit (WDK) on top of Visual Studio. Go on, I’ll wait here.

First things first – you go to File | Open Crash Dump, and you’re good to go:

Visual Studio will load that dump file and open the initial analysis window – which is a new tool called Debugger Immediate Window.

Read more: DZone

QR:

January 2013 Security Release ISO Image

Posted by

jasper22

at

11:55

|

Overview

This DVD5 ISO image file contains the security updates for Windows released on Windows Update on January 8, 2013. The image does not contain security updates for other Microsoft products. This DVD5 ISO image is intended for administrators that need to download multiple individual language versions of each security update and that do not use an automated solution such as Windows Server Update Services (WSUS). You can use this ISO image to download multiple updates in all languages at the same time.

Important: Be sure to check the individual security bulletins at http://technet.microsoft.com/en-us/security/bulletin prior to deployment of these updates to ensure that the files have not been updated at a later date.

The below Operating Systems will contain the following number of languages unless otherwise noted:

Windows XP - 24 languages

Windows XP x64 Edition - 2 languages

Windows Server 2003 - 18 languages

Windows Server 2003 x64 Edition - 11 languages

Windows Server 2003 for Itanium-based Systems - 4 languages

Windows Vista - 36 languages

Windows Vista for x64-based Systems - 36 languages

Windows Server 2008 - 19 languages

Windows Server 2008 x64 Edition - 19 languages

Windows Server 2008 for Itanium-based Systems - 4 languages

Windows Server 2008 R2 x64 Edition - 19 languages

Windows Server 2008 R2 for Itanium-based Systems - 4 languages

Windows 7 - 36 languages

Windows 7 for x64-based Systems - 36 languages

Read more: MS Download

QR:

Microsoft Research at CES: IllumiRoom

Posted by

jasper22

at

10:33

|

Earlier this morning at CES, Eric Rudder, Microsoft’s Chief Technology Strategy Officer, joined the Samsung keynote to share Microsoft’s vision for extending computing interactions to any surface in your home. This wasn’t a product launch but I’m excited by the potential shown in the research that we shared.

Imagine a space like your kitchen or a classroom achieving that same level of interactivity as your phone - this will happen through a combination of embedded devices and sensors such as Kinect for Windows. Our research demo only covers educational and entertainment scenarios but the possibilities are endless.

Read more: Next at Microsoft

QR:

Android Updates: Why Is Cyanogen So Much Faster Than Google/OEMs?

Posted by

jasper22

at

10:15

|

A little while ago I wrote an article about 5 things I hated about Android, and I realized many of the things I hated so much are problems that one team of developers have successfully eliminated on their own. Who? Why Cyanogen and the CyanogenMod team of course. I first discovered Cyanogen after getting the first Android phone (HTC Dream/T-Mobile G1) back in 2008. While I liked the phone, the speed didn’t impress me. I then went to Google and entered in “how to make the HTC Dream faster”, and lord and behold....XDA Developers.

After being an active member there for years now and witnessing the insane things that these (and many other) talented developers can do, I was faced with 2 questions: Why doesn’t Google/Android OEMs hire developers from XDA to help gets updates of Android out faster?....and....how is Cyanogen so much faster than Google and OEMs when it comes to pushing out updates and optimizing/improving them?

What CyanogenMOD Does

For anyone who doesn’t know Cyanogen, he is basically the Android Godfather when it comes to custom ROMS. His ROM is the base for almost all other Android ROMS, and if it weren’t for him and his talented team, the rooting and modding scene would certainly look a lot different. This is the team that brought Ice Cream Sandwich to devices that Google and multiple OEMs stated could never receive the update (due to hardware compatibility issues). This is the team that brought Ice Cream Sandwich to multiple devices 4 months before any OEM or carrier started rolling it out. This is the team that can provide Android updates for the device you just bought a year ago but is no longer supported by OEMs. What they have done with Android devices is nothing short of pure genius.

Read more: Android PIT

QR:

Android Secret Codes and Hacks

Posted by

jasper22

at

09:35

|

Objectives :

- How to find IMEI Number of Android Phone?

- How to get Complete Information about your Phone and Battery of Android Phone?

- How to Reset Android Phone?

- How to Factory Reset Android Phone?

- How to Format Android Phone?

- How to monitor your GTalk Service of your Android Phone?

- How to check camera settings of your Android Phone?

- How to change settings of End Button of Android Phone?

- How to change settings of Power Button of Android Phone?

- How to switch off Android phone directly?

- How to take backup of Android Phone?

- How to backup your images, songs, videos, files, etc. from Android Phone?

- How to enter into service mode of Android Phone?

- How to test Bluetooth of Android Phone?

- How to test GPS of Android Phone?

- How to test WLAN of Android Phone?

- How to test Wireless LAN of Android Phone?

- How to check MAC address of Android Phone?

This is generally for the people who don’t know anything about the phones & they get cheated while buying new phones & resell phones as well as during repairs.

Read more: Atul Palandurkar

QR:

Check your code portability with PCL Compliance Analyzer

Posted by

jasper22

at

09:09

|

I am extracting parts of my Simple.Data OData adapter to make a portable class library (PCL). The goal is to create an OData library available for desktop .NET platforms, Windows Store, Silverlight, Windows Phone and even Android/iOS (using Xamarin Mono). To study platform-specific PCL capabilities I used an Excel worksheet provided by Daniel Plaisted (@dsplaisted). It’s a very helpful document, but it would be much easier if I could simply point some tool to an assembly file and it would show its portable and non-portable calls.

I haven’t found such tool, so I wrote one. It’s called PCL Compliance Analyzer. Select an assembly file, set of platforms you want to target, and it will show you if the assembly is PCL compliant and what calls are not. Here are the results for Mono.Cecil (that I used to scan assembly calls):

Read more: Vagif Abilov's blog on .NET

Read more: Github

QR:

.NET Weak Events for the Busy Programmer

Introduction

By now we are all familiar with delegates in .NET. They are so simple yet so powerful. We use them for events, callbacks, and many other wonderful derivative functions. However delegates have a nasty little secret. When you subscribe to an event, the delegate backing that event will keep a strong reference to you. What does this mean? It means that you (the caller) will not be able to be garbage collected since the garbage collection algorithm will be able to find you. Unless you like memory leaks this is a problem. This article will demonstrate how to avoid this issue without forcing callers to manually unsubscribe. The aim of this article is make using weak events extremely simple.

Background

Now I've see my share of weak event implementations. They more or less do the job including the WeakEventManager in WPF. The issue I always encountered is either their setup code or memory usage. My implementation of the weak event pattern will address memory issues and will be able to match any event delegate signature while making it very easy to incorporate into your projects.

Implementation

The first thing developers should know about delegates is that they are classes just like any other .Net class. They have properties that we can use to create own own weak events. The issue with the delegate as I mentioned before is that they keeps a strong reference to the caller. What we need to do is to create a weak reference to the caller. This is actually simple. All we have to do is use a WeakReference object to accomplish this.

RuntimeMethodHandle mInfo = delegateToMethod.Method.MethodHandle;

if (delegateToMethod.Target != null)

{

WeakReference weak = new WeakReference(delegateToMethod.Target);

subscriptions.Add(weak, mInfo);

}

else

{

staticSubscriptions.Add(mInfo);

}

In the above example 'delegateToMethod' is our delegate. We can get to the method that it will eventually invoke and most importantly we can get to it's Target, the subscriber. We then create a weak reference to the target. This allows the target to the garbage collected if it is no longer in scope.

Read more: Codeproject

QR:

#772 – Initializing an Array as Part of a Method Call

Posted by

jasper22

at

17:53

|

When you want to pass an array to a method, you could first declare the array and then pass the array by name to the method.

Suppose that you have a Dog method that looks like this:

public void DoBarks(string[] barkSounds)

{

foreach (string s in barkSounds)

Console.WriteLine(s);

}

You can declare the array and pass it to the method:

Dog d = new Dog();

// Declare array and then pass

string[] set1 = { "Woof", "Rowf" };

d.DoBarks(set1);

Read more: 2,000 Things You Should Know About C#

QR:

Hibernating Rhinos Practices: Pairing, testing and decision making

Posted by

jasper22

at

17:51

|

We actually pair quite a lot, either physically (most of our stations have two keyboards & mice for that exact purpose) or remotely (Skype / Team Viewer).

And yet, I would say that for the vast majority of cases, we don’t pair. Pairing is usually called for when we need two pairs of eyes to look at a problem, for non trivial debugging and that is about it.

Testing is something that I deeply believe in, at the same time that I distrust unit testing. Most of our tests are actually system tests. That test the system end to end. Here is an example of such a test:

[Fact]

public void CanProjectAndSort()

{

using(var store = NewDocumentStore())

{

using(var session = store.OpenSession())

{

session.Store(new Account

{

Profile = new Profile

{

FavoriteColor = "Red",

Name = "Yo"

}

});

session.SaveChanges();

}

using(var session = store.OpenSession())

{

var results = (from a in session.Query<Account>()

.Customize(x => x.WaitForNonStaleResults())

orderby a.Profile.Name

select new {a.Id, a.Profile.Name, a.Profile.FavoriteColor}).ToArray();

Assert.Equal("Red", results[0].FavoriteColor);

}

}

}

Most of our new features are usually built first, then get tests for them. Mostly because it is more efficient to get things done by experimenting a lot without having tests to tie you down.

Read more: Ayende @ Rahien

QR:

Optimizing the Chili's dining experience

Posted by

jasper22

at

17:39

|

Back in the days of Windows 95, one of my colleagues paid a visit to his counterparts over in the Windows NT team as part of a continuing informal engagement to keep the Windows NT developers aware of the crazy stuff we've been doing on the Windows 95 side.

One particular time, his visit occurred in late morning, and it ran longer than usual, so the Windows NT folks said, "Hey, it's lunchtime. Do you want to join us for lunch? It's sort of our tradition to go to Chili's for lunch on Thursdays."

My colleague cheerfully accepted their offer.

The group were shown to their table, and the Windows NT folks didn't even look at the menus. After all, they've been here every week for who-knows-how-long, so they know the menu inside-out.

When the server came to take the orders, they naturally let my colleague order first, seeing as he was their special guest.

"I'll have a chicken ranch sandwich."

The folks from the Windows NT team then placed their orders.

"I'll have the turkey sandwich."

"Turkey sandwich."

"A turkey sandwich for me, please."

Every single person ordered a turkey sandwich.

After the server left, my colleague asked, "Why do you all order the turkey sandwich?"

Read more: The Old New Thing

QR:

Six years of WPF; what's changed?

Posted by

jasper22

at

09:00

|

Prior to working full time on Octopus Deploy, I spent a year building a risk system using WPF, for traders at an investment bank. Before that I worked as a consultant and trainer, mostly with a focus on WPF. I've lived and breathed the technology for the last six years, and in this post I'm going to share some thoughts about the past and future of WPF and the XAML-ites.

Six years ago, I wrote an article about validation in WPF on Code Project. I also wrote a custom error provider that supported IDataErrorInfo, since, would you believe, WPF in version 3.0 didn't support IDataErrorInfo. Later, I worked on a bunch of open source projects around WPF like Bindable LINQ (the original Reactive Programming for WPF, back before Rx was invented) and Magellan (ASP.NET-style MVC for WPF). I was even in the MVVM-hyping, Code Project-link sharing club known as the WPF Disciples for a while.

As I look back at WPF, I see a technology that had some good fundamentals, but has been really let down by poor implementation and, more importantly, by a lack of investment. I'm glad those days are behind me.

Back in 2006, here's what the markup for a pretty basic Window looked like (taken from an app I worked on in 2006):

<Window x:Class="PaulStovell.TrialBalance.UserInterface.MainWindow"

xmlns:tb="clr-namespace:PaulStovell.TrialBalance.UserInterface"

xmlns:tbp="clr-namespace:PaulStovell.TrialBalance.UserInterface.Providers"

xmlns:system="clr-namespace:System;assembly=mscorlib"

Title="TrialBalance"

WindowState="Maximized"

Width="1000"

Height="700"

Icon="{StaticResource Image_ApplicationIcon}"

Background="{StaticResource Brush_DefaultWindowBackground}"

x:Name="_this"

>

I mean, look at all that ceremony! x:Class! XML namespace imports! Why couldn't any of that stuff be declared in one place, or inferred by convention?

Fortunately, it's now 2012, and things have come a long way. Here's what that code would look like if I did it today:

Read more: Paul Stovell

QR:

Real Scenario: folder deployment scenarios with MSDeploy

Hi everyone Sayed here. I recently had a customer, Johan, contact me to help with some challenges regarding deployment automation. He had some very specific requirements, but he was able to automate the entire process. He has been kind enough to agree to write up his experience to share with everyone. His story is below. If you have any comments please let us know. I will pass them to Johan.

I’d like to thank Johan for his willingness to write this up and share it. FYI if you’d like me to help you in your projects I will certainly do my best, but if you are willing to share your story like Johan it will motivate me more :) – Sayed

Folder deployment scenarios with the MsDeploy command line utility

We have an Umbraco CMS web site where deployment is specific to certain folders only and not the complete website. Below is a snapshot of a typical Umbraco website.

I have omitted root files such as web.config as they never get deployed. Now as we develop new features, only those purple folders gets modified so there is no need to redeploy the bulky yellow folders which mostly contains the Umbraco CMS admin system. I will refer to these purple folders as release files.

The green media folder is a special case. Our ecommerce team modifies the content on a daily basis in a CMS environment. They may publish their changes to production at will. Content related files from this folder automatically synchronises to the production environment through a file watcher utility. Thus the CMS and PROD environments always contain the latest versions and we can never overwrite this.

This results in a special case for deployment. These are the steps we follow:

1. When preparing the QA environment, we first update the media folder from CMS

2. Then we transfer all the release files from DEV to QA

3. After QA sign off, we deploy those same the release files to PROD

Read more: .NET Web Development and Tools Blog

QR:

HttpClient Verses WebClient with .Net and C#

Posted by

jasper22

at

17:57

|

I often find I do what I know over and over again and don’t look for new solutions if the old tried and true solution works. At my last hackathon, I wrote an app the screen scraped the starbucks site. I noticed when I looked at the site that a redirect happened after sign in. My WebClient call did not follow the redirect. I asked for help from a very bright Microsoft Azure guy (Josh Twist) who blogs at http://www.thejoyofcode.com/About_us.aspx. Josh suggested switching to HttpClient and making sure to set the option that follows redirects.

Read more: Peter Kellner

QR:

Support and Q&A for Solid-State Drives

Posted by

jasper22

at

17:51

|

There’s a lot of excitement around the potential for the widespread adoption of solid-state drives (SSD) for primary storage, particularly on laptops and also among many folks in the server world. As with any new technology, as it is introduced we often need to revisit the assumptions baked into the overall system (OS, device support, applications) as a result of the performance characteristics of the technologies in use. This post looks at the way we have tuned Windows 7 to the current generation of SSDs. This is a rapidly moving area and we expect that there will continue to be ways we will tune Windows and we also expect the technology to continue to evolve, perhaps introducing new tradeoffs or challenging other underlying assumptions. Michael Fortin authored this post with help from many folks across the storage and fundamentals teams. --Steven

Many of today’s Solid State Drives (SSDs) offer the promise of improved performance, more consistent responsiveness, increased battery life, superior ruggedness, quicker startup times, and noise and vibration reductions. With prices dropping precipitously, most analysts expect more and more PCs to be sold with SSDs in place of traditional rotating hard disk drives (HDDs).

In Windows 7, we’ve focused a number of our engineering efforts with SSD operating characteristics in mind. As a result, Windows 7’s default behavior is to operate efficiently on SSDs without requiring any customer intervention. Before delving into how Windows 7’s behavior is automatically tuned to work efficiently on SSDs, a brief overview of SSD operating characteristics is warranted.

Random Reads: A very good story for SSDs

SSDs tend to be very fast for random reads. Most SSDs thoroughly trounce traditionally HDDs because the mechanical work required to position a rotating disk head isn’t required. As a result, the better SSDs can perform 4 KB random reads almost 100 times faster than the typical HDD (about 1/10th of a millisecond per read vs. roughly 10 milliseconds).

Sequential Reads and Writes: Also Good

Sequential read and write operations range between quite good to superb. Because flash chips can be configured in parallel and data spread across the chips, today’s better SSDs can read sequentially at rates greater than 200 MB/s, which is close to double the rate many 7200 RPM drives can deliver. For sequential writes, we see some devices greatly exceeding the rates of typical HDDs, and most SSDs doing fairly well in comparison. In today’s market, there are still considerable differences in sequential write rates between SSDs. Some greatly outperform the typical HDD, others lag by a bit, and a few are poor in comparison.

Random Writes & Flushes: Your mileage will vary greatly

The differences in sequential write rates are interesting to note, but for most users they won’t make for as notable a difference in overall performance as random writes.

What’s a long time for a random write? Well, an average HDD can typically move 4 KB random writes to its spinning media in 7 to 15 milliseconds, which has proven to be largely unacceptable. As a result, most HDDs come with 4, 8 or more megabytes of internal memory and attempt to cache small random writes rather than wait the full 7 to 15 milliseconds. When they do cache a write, they return success to the OS even though the bytes haven’t been moved to the spinning media. We typically see these cached writes completing in a few hundred microseconds (so 10X, 20X or faster than actually writing to spinning media). In looking at millions of disk writes from thousands of telemetry traces, we observe 92% of 4 KB or smaller IOs taking less than 1 millisecond, 80% taking less than 600 microseconds, and an impressive 48% taking less than 200 microseconds. Caching works!

On occasion, we’ll see HDDs struggle with bursts of random writes and flushes. Drives that cache too much for too long and then get caught with too much of a backlog of work to complete when a flush comes along, have proven to be problematic. These flushes and surrounding IOs can have considerably lengthened response times. We’ve seen some devices take a half second to a full second to complete individual IOs and take 10’s of seconds to return to a more consistently responsive state. For the user, this can be awful to endure as responsiveness drops to painful levels. Think of it, the response time for a single I/O can range from 200 microseconds up to a whopping 1,000,000 microseconds (1 second).

When presented with realistic workloads, we see the worst of the SSDs producing very long IO times as well, as much as one half to one full second to complete individual random write and flush requests. This is abysmal for many workloads and can make the entire system feel choppy, unresponsive and sluggish.

Random Writes & Flushes: Why is this so hard?

Read more: Engineering Windows 7

QR:

TCP/IP Fundamentals for Microsoft Windows

Posted by

jasper22

at

16:51

|

Overview

This online book is a structured, introductory approach to the basic concepts and principles of the Transmission Control Protocol/Internet Protocol (TCP/IP) protocol suite, how the most important protocols function, and their basic configuration in the Microsoft Windows Vista, Windows Server 2008, Windows XP, and Windows Server 2003 families of operating systems. This book is primarily a discussion of concepts and principles to lay a conceptual foundation for the TCP/IP protocol suite and provides an integrated discussion of both Internet Protocol version 4 (IPv4) and Internet Protocol version 6 (IPv6).

Read more: MS Download

QR:

Hidden Pitfalls With Object Initializers

Posted by

jasper22

at

16:50

|

I love automation. I’m pretty lazy by nature and the more I can offload to my little programmatic or robotic helpers the better. I’ll be sad the day they become self-aware and decide that it’s payback time and enslave us all.

But until that day, I’ll take advantage of every bit of automation that I can.

As a developer, it’s important for us to think hard about our code and take care in its crafting. But we’re all fallible. In the end, I’m just not smart enough to remember ALL the possible pitfalls of coding ALL OF THE TIME such as avoiding the Turkish I problem when comparing strings. If you are, more power to you!

I try to keep the number of rules I exclude to a minimum. It’s saved my ass many times, but it’s also strung me out in a harried attempt to make it happy. Nothing pleases it. Sure, when it gets ornery, it’s easy to suppress a rule. I try hard to avoid that because suppressing one violation sometimes hides another.

Here’s an example of a case that confounded me today. The following very straightforward looking code ran afoul of a code analysis rule.

public sealed class Owner : IDisposable

{

Dependency dependency;

public Owner()

{

// This is going to cause some issues.

this.dependency = new Dependency { SomeProperty = "Blah" };

}

public void Dispose()

{

this.dependency.Dispose();

}

}

public sealed class Dependency : IDisposable

{

public string SomeProperty { get; set; }

public void Dispose()

{

}

}

Code Analysis reported the following violation:

CA2000 Dispose objects before losing scope

In method 'Owner.Owner()', object '<>g__initLocal0' is not disposed along all exception paths. Call System.IDisposable.Dispose on object '<>g__initLocal0' before all references to it are out of scope.

That’s really odd. As you can see, dependency is disposed when its owner is disposed. So what’s the deal?

Can you see the problem?

Read more: haacked

QR:

Фишинг: Новый тренд — переклейка QR кодов в общественных местах

Posted by

jasper22

at

16:01

|

День добрый,

сим постом хочу предупредить русскоязычное сообщество о новой фишинг тенденции, семимильными шагами двигающейся по Европе. В настоящее время накрыло Германию. Думаю такими темпами очень быстро доберется до России и СНГ.

Смысл в том, что QR-код на плакате в аэропорту, рекламной брошюре у врача или например на информационном стикере, висящем в банке, аккуратно заклеивается другим, который ведет соответственно на злостную страничку фишера. Иногда он даже вырезается и вклеивается новый, например изнутри банка, на плакате за стеклом на внешней стене или входной двери. Снаружи такая переклейка практически не заметна под стеклом и не вызывает никаких сомнений, что QR-код принадлежит банку.

На такую уловку попадаются даже люди, серьезно относящиеся к безопасности своих данных, например проверяющие URL в браузерах перед оплатой PayPal и т.д. Это объясняется высоким уровнем доверия к информации, висящей например внутри крупного банка.

Из моих знакомых имеем уже два случая:

— У одного увели и поменяли все пароли сохраненные в браузере телефона, после посещения рекламирующего фильм сайта, с QR-кода снятого в аэропорту Франкфурта. По прилету в Гамбург уже не мог зайти никуда; (UPD) Подробности в коментарии…

Read more: Habrahabr.ru

QR:

OpenVPN v2.3

Posted by

jasper22

at

15:44

|

It includes major changes compared to latest 2.2.x ("oldstable") release:

- Full IPv6 support

- SSL layer modularised, enabling easier implementation for other SSL libraries

- PolarSSL support as a drop-in replacement for OpenSSL

- New plug-in API providing direct certificate access, improved logging API and easier to extend in the future

- Added 'dev_type' environment variable to scripts and plug-ins - which is set to 'TUN' or 'TAP'

- New feature: --management-external-key - to provide access to the encryption keys via the management interface

- New feature: --x509-track option, more fine grained access to X.509 fields in scripts and plug-ins

- New feature: --client-nat support

- New feature: --mark which can mark encrypted packets from the tunnel, suitable for more advanced routing and firewalling

- New feature: --management-query-proxy - manage proxy settings via the management interface (supercedes --http-proxy-fallback)

- New feature: --stale-routes-check, which cleans up the internal routing table

- New feature: --x509-username-field, where other X.509v3 fields can be used for the authentication instead of Common Name

- Improved client-kill management interface command

- Improved UTF-8 support - and added --compat-names to provide backwards compatibility with older scripts/plug-ins

- Improved auth-pam with COMMONNAME support, passing the certificate's common name in the PAM conversation

- More options can now be used inside <connection> blocks

- Completely new build system, enabling easier cross-compilation and Windows builds

- Much of the code has been better documented

- Many documentation updates

- Plenty of bug fixes and other code clean-ups

Read more: OpenVPN v2.3

QR:

WCF Configuration without Configuration

Posted by

jasper22

at

15:33

|

Before Christmas, I worked on automatically creating WCF configuration from a WSDL document.

I got until the point where I can successfully create a ServiceEndpoint and its Bindig from the WSDL (ServiceContractGenerator.cs:843 in my baulig/work-wsdl-config2), but run into several problems when creating the .config file.

The first issue I ran into was merging the new configuration items that were generated from the WSDL into an existing app.config file. The main problem is distinguishing elements that the user manually put into the app.config, new items from the WSDL file and items from the WSDL file that were previously added automatically (running "Update Service Reference" twice in MonoDevelop shouldn't add configuration twice). Visual Studio uses the configuration.svcinfo / configuration91.svcinfo files to cache the elements that it added to the app.config, but their format is undocumented (it's XML, but there's a checksum element, I've no idea how to generate that and didn't find any information on the internet).

The second big issue is automatically configuring a WCF client without System.Configuration, so it can be used in MonoTouch.

Microsoft uses some .xml inside a .zip file for Silverlight (but I also couldn't find any specification for the format) and Mono is using the SilverlightClientConfigLoader class to parse an file. But this class is incomplete, only handles the very basic cases and doesn't provide any useful error reporting to the user.

And the third big issue is that our System.Configuration implementation is buggy and the many of the System.ServiceModel.Configuration APIs unimplemented.

Requirements

So I was thinking about a solution which satisfies the following requirements:

Does not use Reflection or System.Configuration, so we can use it for the mobile platform.

Allows automatic configuration of a WCF client from a WSDL document - so you simply do "Add Service Reference" in MonoDevelop and it sets you up just like Visual Studio does, without any manual editing of configuration files.

Is at least somewhat compatible with the app.config format.

The Solution

The solution I finally came up with was a custom XML file format.

It is very similar to the section in the app.config file, but not identical. The main difference is that the name of each XML element is unique and doesn't depend on the context it's used in.

Read more: Martin's Activity Log

QR:

Line-by-line profiling with dotTrace Performance

Posted by

jasper22

at

14:59

|

dotTrace Performance allows us to profile an application in several ways. We can choose between sample profiling, tracing profiling and line-by-line profiling. Generally sampling profiling is used first to acquire a high-level overview of call performance in an application, after which tracing and line-by-line profiling is used to close in on a performance issue.

Profiling types

Sampling is the fastest and the least precise mode of profiling. With sampling, dotTrace basically grabs current call stacks on all threads once in a while. When capturing a snapshot, the number of calls isn’t available and times are approximate. Accuracy cannot be more than the sampling frequency.

Tracing is slower yet more precise than sampling. Instead of grabbing call stacks on all threads, tracing subscribes to notifications from the CLR whenever a method is entered and left. The time between these two notifications is taken as the execution time of the method. Tracing gives us precise timing information and the number of calls on the method level.

Line-by-line is the slowest yet most precise mode of profiling. It collects timing information for every statement in methods and provides the most detail on methods that perform significant work. We can specify exactly which methods we want to profile or profile all methods for which dotTrace Performance can find symbol information.

Read more: JetBrains .NET Tools Blog

QR:

Встречайте, BitTorrent клиент для Google Chrome

Posted by

jasper22

at

14:55

|

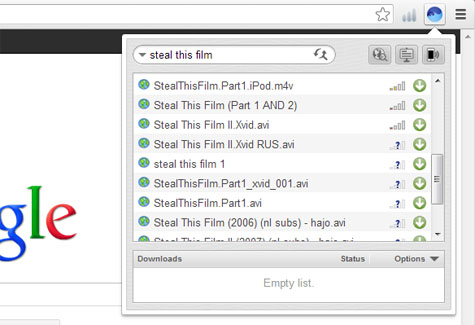

Новое расширение для Google Chrome от создателей uTorrent превращает популярный браузер в BitTorrent клиент всего за пару кликов мышкой. В интервью TorrentFreak представители BitTorrent Inc. сообщили, что работали над расширением последние шесть месяцев, а поводом для его создания послужило тоже, что и в свое время сподвигло Брэма Коэна на создание первого BitTorrent клиента — обмен большими файлами через интернет.

C момента своего скромного появления 11 лет назад BitTorrent превратился в одного из самых крупных генераторов интернет-трафика. Сотни петабайт данных ежедневно проносится через киберпространство благодаря торрентам став обыденностью для миллионов людей.

Самый популярный клиент uTorrent в настоящее время помогает обмениваться файлами более чем 125 миллионам активных пользователей и остается излюбленным оружием для многих продвинутых торрент-фанатов.

Тем не менее, у флагманского клиента BitTorrent Inc. теперь есть младший брат, устанавливаемый в пару кликов, он идеально подойдет, как и для опытных пользователей, так и для новичков в мире торрентов.

BitTorrent Surf на данный момент доступен только для Google Chrome, но фанатам Firefox не стоит унывать, так как версия расширения для их браузера на очереди. Устанавливаясь из Chrome Webstore, BitTorrent Surf интегрирует в браузер торрент-клиент и поисковик торрентов, при этом, практически не требуя настройки.

После установки в верхнем правом углу Google Chrome появляется синяя иконка-кружок BitTorrent Surf активируемая нажатием мышки. В появившемся окне расширения вбиваем искомый материал и через некоторое время клиент отобразит результаты поиска с уже готовыми для скачивания ссылками.

Read more: Habrahabr.ru

QR:

One line browser notepad

Posted by

jasper22

at

13:04

|

Introduction

Sometimes I just need to type garbage. Just to clear out my mind. Using editors to type such gibberish annoys me because it clutters my project workspace (I'm picky, I know).

So I do this. Since I live in the browser, I just open a new tab and type in the url tab.

data:text/html, <html contenteditable>

Voila, browser notepad.

Why it works?

You don't need to remember it. It's not rocket science.

Read more: Jose Jesus Perez Aguinaga

QR:

Foreach Behavior With Anonymous Methods and Captured Value

Posted by

jasper22

at

12:55

|

Recently I've been researching the behavior of the foreach loop for C# in 5.0 and earlier versions. Here's a snippet I was trying and getting different output in different versions of C#.

var values = new List<string>() { "Bob", "is", "stupid" };

var funcs = new List<Func<string>>();

foreach (var v in values)

funcs.Add(() => v);

foreach (var f in funcs)

Console.WriteLine(f());

Console.Read();

When I ran this code with Visual Studio 2010 I got the output as:

stupid stupid stupid

But when I tried the same code in Visual Studio 2012 the output was:

Bob is stupid

Which is the correct output as expected. I was wondering why this is. So I studied the implementation of the Foreach loop and found that the earlier versions of C# was designed to optimize the execution of Anonymous methods inside the foreach loop. The point is that Anonymous methods uses captured local variables. If a variable is declared locally then if your anonymous method is using an outer variable from a foreach implementation then the last updated value will be captured. Which is why I was getting the output of the program as "stupid stupid stupid" as this was the last value captured from the outer variable.

Now let's take a deep dive into captured values of variables in anonymous methods.

Outer variable: Any local variable, value parameter, or parameter array whose scope includes an anonymous method expression is called an outer variable of that anonymous-method-expression. In an instance of a function member of a class, the "this" value is considered a value parameter and is an outer variable of any anonymous-method-expression contained within that function member.

Captured value: When an outer variable is referenced by an anonymous method, the outer variable is said to have been captured by the anonymous method. Ordinarily, the lifetime of a local variable is limited to execution of the block or statement with which it is associated. However, the lifetime of a captured outer variable is extended at least until the delegate referring to the anonymous method becomes eligible for garbage collection.

Read more: C# Corner

QR:

Hyper-V for Developers Part 1

Posted by

jasper22

at

12:01

|

The goal of this blog series is to share how I use the Windows 8 Client Hyper-V feature to model application infrastructure. I will share my techniques and tips to get the developer focused on getting environments up and running as quickly as possible.

The experiences that led me to write this series stem from observation of difficulties and oversights between developers and operations teams during application deployment and troubleshooting. It is not uncommon for IT organizations to have many environments that increase in complexity as an application moves through development, integration testing, quality assurance, performance testing, and production environments. Commonly there are more “real life” components present in high level environments such as production and more mocking and simulation in lower environments such as development. The single machine developer perspective of an application may be quite different than an IT Pro supporting a farm of servers. When deployed in “real life” the application may have to work across many servers, load balancers, subnets, and potentially geographically disperse datacenters. A number of questions for the developer may arise as the application is deployed to higher level environments.

Read more: Ken Kilty's Blog

QR:

Cross Browser Debugging integrated into Visual Studio with BrowserStack

Posted by

jasper22

at

11:56

|

TL;DR - Too Long Didn't Read Version

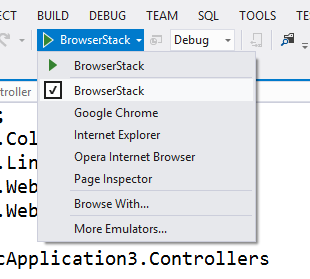

BrowserStack Integrated into Visual Studio

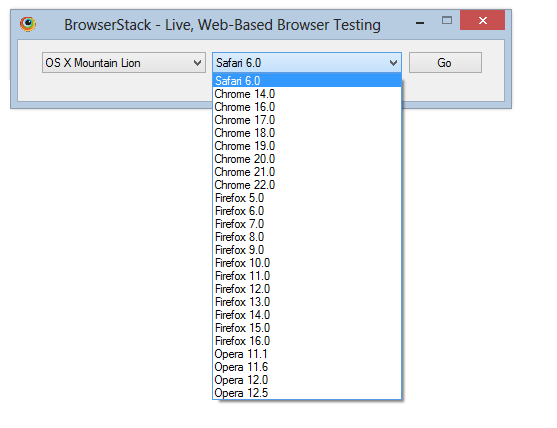

From a debug session inside Visual Studio 2012 today with ASP.NET 2012.2 RC installed. Click the dropdown next to your Debug Button, the click on "More Emulators" to go to http://asp.net/browsers and get the BrowserStack Visual Studio extension and three months free service. There's other browsers to download as well, like the Electric Plum iPhone/iPad simulator.

SIDE NOTE: When the VS2012.2 Update is finalized, you'll need to install just it and you'll get the ASP.NET Web Tools as well.

New Online Tools for Modern Sites

Head over to http://modern.ie for a bunch of tools for making cross browser sites easier, including on online site analyzer and downloadable Virtual Machines for any Virtual Platform.

I do a lot of cross-browser testing and I've been on a personal mission to make "Browse With..." and multiple browser debugging suck less in Visual Studio. This has been going on for years.

In 2010, I used PowerShell and hacked together a browser switcher script in VS2010.

My buddy Kzu and friends saw this script, realized it sucked and made things better on VS2012 with their Default Browser Switcher addon.

I worked with Jorge on our team and we delivered a the browser switcher dropdown directly integrated in VS2012. This feature is also in WebMatrix.

Later the iPhone/iPad local simulator from Electric Plum added tight integration with Visual Studio so you can test locally.

But still, it's too hard. There's been some Virtual Machines up on the Microsoft Download Center but it's tedious to dig around and get the one you need.

BrowserStack

Today the IE team announced new site at http://modern.ie to make cross-browser testing easier. Even cooler, they launched a partnership with BrowserStack.com to give us all a three month free trial to their hosted browser virtualization service.

Read more: Scott Hanselman

QR:

Microsoft confirms XNA is over

Posted by

jasper22

at

11:20

|

Computing giant ending popular indie development toolset, but DirectX will remain

Microsoft has confirmed that it will not be producing future versions of its indie development toolset XNA.

Reports emerged yesterday that the software maker was planning to end upkeep of its XNA Game Studio, and now a spokesperson has confirmed as much to Polygon.

A former Microsoft employee shared an email that had been sent to game developers, which explained that Microsoft would no longer be maintaining the XNA toolset. That same email appeared to suggest that DirectX, the widely used API for games and video, would be phased out.

However, a Microsoft spokesperson said that there are no plans to discontinue the DirectX for its Windows and Xbox platforms.

Read more: Developer online

QR:

The Truth About HttpHandlers and WebApi

Posted by

jasper22

at

10:03

|

In 2012 when Microsoft introduced the ASP.NET WebAPI, I initially became confused about yet another way to generate and transmit content across a HttpRequest. After spending some time thinking about WebAPI over the past week or two and writing a few blocks of sample code, I think I finally have some clarity around my confusion and these are those lessons I learned the hard way.

Old School API - SOAP and ASMX

When ASP.Net launched, the only framework to create and publish content that was available was WebForms. Everybody used it, and it worked well. If you wanted to create an interface for machine-to-machine communications you would create a SOAP service in an ASMX file. You could (and still can) add web references to projects that reference these services. The web reference gives you a first class object with a complete API in your project that you can interact with.

Over time, it became less compelling to build the SOAP services as they transmitted a significant amount of wrapper content around the actual payload you wanted to work with. This extra content was messy, and other programming languages didn't have the tooling support to manage them well. Developers started looking for less complicated alternatives to communicate with connected clients. It turns out that there was another option available that was overlooked by many: HttpHandlers.

HttpHandlers in ASP.NET

HttpHandlers are the backbone of ASP.NET. An HttpHandler is any class that implements the IHttpHandler interface, handles an incoming request, and provides a response to webserver communications. IHttpHandler is a trivial interface with the following signature:

Read more: Codeproject

QR:

Microsoft Visual Studio 2012 update 2

Posted by

jasper22

at

09:48

|

Overview

Visual Studio 2012 Update is providing continuous value to customers, adding new capabilities year-round to features in the main product release. These releases will be aligned with the core software development trends in the market, ensuring developers and development teams always have access to the best solution for building modern applications.

This is a community technology preview (CTP) for Visual Studio 2012 Update 2. These cumulative updates to Visual Studio 2012 include a variety of bug fixes and capability improvements. More details can be found here.

Statement of Support:

PLEASE NOTE: Technology Previews have not been subject to final validation and are not meant to be run on production workstations or servers. Since installation of Visual Studio CTPs and installation of Team Foundation Server CTPs work differently, please read the recommended upgrade approach for each product carefully.

For Visual Studio: The recommended approach for upgrading Visual Studio on developer workstations is installing the latest Visual Studio Update CTP on top of an RTM release or a previous CTP build of that Update. Visual Studio CTPs can be upgraded to a different build.

For Team Foundation Server: Do not install a Team Foundation Server Update CTP on a production server, as it will put the server in an unsupported state. Unlike with Visual Studio CTPs, installing a Team Foundation Server CTP fully replaces the current release on the server with the CTP. Team Foundation Server CTPs cannot be upgraded to future CTPs or releases nor “downgraded” to a previous release.

Read more: MS downloads

QR:

Things I Learned Reading The C# Specifications - Part 1

Posted by

jasper22

at

09:37

|

After reading Jon Skeet’s excellent C# in Depth - again (3rd edition - to be published soon) I’ve decided to try and actually read the C# language specification…

Being a sensible kind of guy I’ve decided to purchase the annotated version which only cover topics up to .NET 4 – but has priceless comments from several C# gurus.

After I’ve read a few pages I was amazed to learn that a few things I knew to be true were completely wrong and so I’ve decided to write a list of new things I’ve learnt while reading this book.

Below you’ll find a short list of new things I learnt from reading the 1st chapter:

Not all value types are saved on the stack

Many developers believe that reference types are stored on the heap while value types are always stored on the stack – this is not entirely true.

First it’s more of an implementation detail of the actual runtime and not a language requirement but more importantly it’s not possible – consider a class (a.k.a reference type) which has a integer member (a.k.a value type), the class is stored on the heap and so are it’s members including the value type since its data is copied “by-value”.

class MyClass

{

// stored in heap

int a = 5;

}

For more information read Eric Lippert’s post on the subject – he should know.

What the hell is “protected internal”

Read more: DZone

QR:

Subscribe to:

Comments (Atom)