Everything You Need To Know To Start Programming 64-Bit Windows Systems

One of the pleasures of working on the bleeding edge of Windows® is poking around in a new technology to see how it works. I don't really feel comfortable with an operating system until I have a little under-the-hood knowledge. So when the 64-bit editions of Windows XP and Windows Server™ 2003 appeared on the scene, I was all over them.

The nice thing about Win64 and the x64 CPU architecture is that they're different enough from their predecessors to be interesting, while not requiring a huge learning curve. While we developers would like to think that moving to x64 is just a recompile away, the reality is that we'll still spend far too much time in the debugger. A good working knowledge of the OS and CPU is invaluable.

In this article I'll boil down my experiences with Win64 and the x64 architecture to the essentials that a hotshot Win32® programmer needs for the move to x64. I'll assume that you know basic Win32 concepts, basic x86 concepts, and why your code should run on Win64. This frees me to focus on the good stuff. Think of this overview as a look at just the important differences relative to your knowledge of Win32 and the x86 architecture.

One nice thing about x64 systems is that you can use either Win32 or Win64 on the same machine without serious performance losses, unlike Itanium-based systems. And despite a few obscure differences between the Intel and AMD x64 implementations, the same x64-compatible build of Windows should run on either. You don't need one version of Windows for AMD x64 systems and another for Intel x64 systems.

I've divided the discussion into three broad areas: OS implementation details, just enough x64 CPU architecture to get by, and developing for x64 with Visual C++®.

The x64 Operating System

In any overview of the Windows architecture, I like to start with memory and the address space. Although a 64-bit processor could theoretically address 16 exabytes of memory (2^64), Win64 currently supports 16 terabytes, which is represented by 44 bits. Why can't you just load a machine up with 16 exabytes to use all 64 bits? There are a number of reasons.

For starters, current x64 CPUs typically only allow 40 bits (1 terabyte) of physical memory to be accessed. The architecture (but no current hardware) can extend this to up to 52 bits (4 petabytes) Even if that restriction was removed, the size of the page tables to map that much memory would be enormous.

Just as in Win32, the addressable range is divided into user and kernel mode areas. Each process gets its own unique 8TB at the bottom end, while kernel mode code lives in the upper 8 terabytes and is shared by all processes. The different versions of 64-bit Windows have different physical memory limits as shown in Figure 1 and Figure 2.

Read more: MSDN Magazine

Silverlight Tip of the Day #15 – Communicating between JavaScript & Silverlight

Posted by

jasper22

at

10:58

|

Communicating between Javascript and Silverlight is, fortunately, relatively straight forward. The following sample demonstrates how to make the call both ways.

Calling Silverlight From Java script:

In the constructor of your Silverlight app, make a call to RegisterScriptableObject().This call essentially registers a managed object for scriptable access by JavaScript code. The first parameter is any key name you want to give. This key is referenced in your Javascript code when making the call to Silverlight.

Add the function you want called in your Silverlight code. You must prefix it with the [ScriptableMember] attribute.

In Javascript, you can now call directly into your Silverlight function. This can be done through the document object. From my example below: document.getElementById("silverlightControl").Content.Page.UpdateText("Hello from Javascript!"); where “silverlightControl” is the ID of my Silverlight control.

Calling Javascript From Silverlight:

Javascript can be directly called via the HtmlPage.Window.Invoke() function.

Example for both:

Page.xaml:

namespace SilverlightApplication

{

public partial class Page : UserControl

{

public Page()

{

InitializeComponent();

HtmlPage.RegisterScriptableObject("Page", this);

HtmlPage.Window.Invoke("TalkToJavaScript", "Hello from Silverlight");

}

[ScriptableMember]

public void UpdateText(string result)

{

myTextbox.Text = result;

}

}

}

Default.aspx:

<script type="text/javascript">

function TalkToJavaScript( data)

{

alert("Message received from Silverlight: "+data);

var control = document.getElementById("silverlightControl");

control.Content.Page.UpdateText("Hello from Javascript!");

}

</script>

Page.xaml:

<UserControl x:Class="SilverlightApplication7.Page"

Width="400" Height="300">

<Grid x:Name="LayoutRoot" Background="White">

<TextBlock x:Name="myTextbox">Waiting for call...</TextBlock>

</Grid>

</UserControl>

How to run WPF - XBAP as Full Trust Application

Posted by

jasper22

at

10:51

|

Recently I work on WPF-XBAP application that will run from intranet website:

This application must have unrestricted access to client's OS resources (that is unusual for XBAP projects):

I publish it on local website by using "Click-Once" deployment mechanism:

User can launch the application from deployment page (also can run application setup):

I get security error ("User has refused to grant required permissions to the application"):

Read more: Maxim

Accessing Web Services in Silverlight

Posted by

jasper22

at

09:46

|

Silverlight

Silverlight version 4 client applications run in the browser and often need to access data from a variety of external sources. A typical example involves accessing data from a database on a back-end server and displaying it in a Silverlight user interface. Another common scenario is to update data on a back-end service through a Silverlight user interface that posts to that service. These external data sources often take the form of Web services.

The services can be SOAP services created using the Windows Communication Foundation (WCF) or some other SOAP-based technology, or they can be plain HTTP or REST services. Silverlight clients can access these Web services directly or, in the case of SOAP services, by using a proxy generated from metadata published by the service.

Silverlight also provides the necessary functionality to work with a variety of data formats used by services. These formats include XML, JSON, RSS, and Atom. These data formats are accessed using Serialization components, Linq to XML, Linq to JSON, and Syndication components. Web services that a Silverlight application can access must conform to certain rules to allow such access. These rules are discussed within the relevant topics in this section.

In This Section

Describes how to create, configure, and debug Silverlight applications that use services, as well as how to create services intended for these applications.

Describes how to implement duplex communications between a Windows Communication Foundation (WCF) Web service and a Silverlight client, where a service can spontaneously push data updates to the Silverlight client as they occur.

Describes how to work with HTTP-based services (including REST services) directly, and how to access XML, JSON, RSS, and Atom data from these services with a Silverlight client.

Describes security issues that should be considered when accessing services from Silverlight clients and when building ASP.NET and WCF services intended for use by Silverlight clients.

Describes how to configure a service so that it can be accessed by Silverlight clients across domain boundaries.

Describes the subset of WCF features supported by Silverlight 4 and outlines where there are differences between the behavior of these features in Silverlight and the full .NET Framework.

Read more: MSDN

Accessing the ASP.NET Authentication, Profile and Role Service in Silverlight

Posted by

jasper22

at

09:43

|

In ASP.NET 2.0, we introduced a very powerful set of application services in ASP.NET (Membership, Roles and profile). In 3.5 we created a client library for accessing them from Ajax and .NET Clients and exposed them via WCF web services. For more information on the base level ASP.NET appservices that this walk through is based on, please see Stefan Schackow excellent book Professional ASP.NET 2.0 Security, Membership, and Role Management.

In this tutorial I will walk you through how to access the WCF application services from a directly from the Silverlight client. This works super well if you have a site that is already using the ASP.NET application services and you just need to access them from a Silverlight client. (Special thanks to Helen for a good chunk of this implantation)

Here is what I plan to show:

1. Login\Logout

2. Save personalization settings

3. Enable custom UI based on a user's role (for example, manager or employee)

4. A custom log-in control to make the UI a bit cleaner

Read more: Brad Abrams

How to: Use the ASP.NET Authentication Service to Log In Through Silverlight Applications

Posted by

jasper22

at

09:39

|

Silverlight

This topic describes how to authenticate the end users of your Silverlight-based ASP.NET Web site when you want to create a rich user log-in experience by using the full graphical power of Silverlight, instead of relying on an HTML-based mechanism, such as the ASP.NET Login Control. You can do this by using the ASP.NET Authentication service. For information about using this service, see ASP.NET Authentication Service Overview.

To use the ASP.NET Authentication service, you must have an ASP.NET site with Forms Authentication being accessed through a Silverlight application that is hosted on a Secure Sockets Layer-enabled (HTTPS) server. There are two requirements:

Secure Sockets Layer (SSL) is required because users must be able to verify the identity of your Silverlight application before trusting it with their passwords. Therefore, it is important to host XAP packages of Silverlight applications that accept passwords from SSL-enabled sites (https:// addresses), just like regular Web pages that accept passwords.

The Authentication service itself must be hosted with SSL to protect the user’s credentials when they travel over the wire.

To enable ASP.NET authentication on the service

In Solution Explorer, right-click the service project and select Add, then New Item, and select the Silverlight-enabled WCF Service template from the Silverlight category. Call it Authentication.svc in the Name box and click Add.

Delete the Authentication.svc.cs file. ASP.NET provides a built-in implementation for this service, so no code is required for this service.

Replace the contents of Authentication.svc with the following code.

<%@ ServiceHost Language="C#"

Service="System.Web.ApplicationServices.AuthenticationService" %>

This directive accesses the AuthenticationService class, which contains the built-in Authentication service implementation provided by ASP.NET.

Ensure that the Authentication service is turned on by setting the enabled attribute of the <authenticationService> element in the configuration to true.

<system.web.extensions>

<scripting>

<webServices>

<authenticationService enabled="true"

requireSSL = "true"/>

</webServices>

</scripting>

</system.web.extensions>

Note that for debugging purposes, the requireSSL attribute can be set to false, but you must switch it back to true before going to production.

In the Web.config file, set both the name attribute of the <service> element and the contract attribute of the service <endpoint> element to System.Web.ApplicationServices.AuthenticationService.

<service name="System.Web.ApplicationServices.AuthenticationService">

<endpoint address=""

binding="customBinding"

bindingConfiguration="WebApplication2.Authentication.customBinding0"

contract="System.Web.ApplicationServices.AuthenticationService" />

<endpoint address="mex"

binding="mexHttpBinding"

contract="IMetadataExchange" />

</service>

Change the <httpTransport /> element to the <httpsTransport /> element in the <customBinding> section.

<customBinding>

<binding name=" WebApplication2.Authentication.customBinding0">

<binaryMessageEncoding />

<httpsTransport />

</binding>

</customBinding>

Now you are ready to host the service. Because the service is hosted over HTTPS, you will not be able to host it in Visual Studio. You will need to deploy the Web application to IIS. Do this on the Web tab of the Web application properties.

Note:

IIS must also be configured to support an HTTP-based binding.

To log in to the service with the Silverlight application

Use Add Service Reference or Slsvcutil.exe in your Silverlight application to add a reference to Authentication.svc. See How to: Access a Service from Silverlight for instructions on how to use the Add Service Reference Tool.

Add any other services you need for your application (for example, MyService.svc) as described in How to: Host a Secure Service in ASP.NET for Silverlight Applications.

In your Silverlight application, use code similar to the following code to log in.

var proxy = new AuthenticationServiceClient();

proxy.LoginCompleted += new EventHandler<LoginCompletedEventArgs>(proxy_LoginCompleted);

proxy.LoginAsync(userNameTextBox.Text, passwordTextBox.Text, null, false);

// Event handler:

void proxy_LoginCompleted(object sender, LoginCompletedEventArgs e)

{

if (e.Error == null)

{

// Log in successful, you now have an authentication cookie

// and can call other services.

}

}

After the login is successful, you can call the other secure services you have added (for example, MyService.svc). No additional authentication code is required to access these services.

You may be using the ClientHttp networking stack to propagate SOAP Faults to the client or for other reasons. For more information about reasons for using the networking stack based on the client operating system instead of the default browser networking stack, see How to: Make Requests to HTTP-Based Services. For more information about how to opt into the client networking stack, see How to: Specify Browser or Client HTTP Handling.

If you are using the client networking stack, cookies will not automatically be carried over between the Authentication service proxy and your service proxy. Some extra steps are needed to ensure that the authentication cookie returned by the Authentication service is used by your service proxy. Normally, if using the default BrowserHttp networking stack, the Web browser performs this automatically.

To enable WCF to give you access to the underlying cookie store that each proxy uses, add the <httpCookieContainer> binding element to the <binding> of <customBinding> (Silverlight) section above the <httpsTransport> element.

Read more: MSDN

Google Engineer Releases Open Source Bitcoin Client

A Google engineer has released an open source Java client for the Bitcoin peer-to-peer currency system, simply called BitcoinJ. Bitcoin is an Internet currency that uses a P2P architecture for processing transactions, avoiding the need for a central bank or payment system. Cio.com.au also has an interview with Gavin Andresen, the technical lead of the Bitcoin virtual currency system.

Read more: Slashdot

Augmenting your 3DS reality just got a little simpler thanks to an Android app

Posted by

jasper22

at

22:14

|

Before we proceed any further, you owe it to yourself to check out our 3DS review or the video after the break in order to fully comprehend what Nintendo's augmented reality cards mean for 3DS gaming. We'll wait right here, take your time. Now that everyone's fully up to speed, an enterprising dev has put together an app that includes all of Ninty's add-in cards for its soon-to-be-launched handheld, allowing you to stash them on your Android smartphone and freeing up more pocket space for game cartridges and bubble gum. The descriptively titled 3DS AR Cards app costs nothing to own, though we're sure its maker will appreciate a note of thanks should you end up using it.

Read more: Engadget

Read more: Android Market

YouTube Video Editor gets impressive stabilization and 3D Video Creator

Posted by

jasper22

at

22:03

|

Google has just updated its cloud-based YouTube video editor to include the fruits of its recent purchase, plus a bit of 3D thrown in for good measure. The in-browser editor can now dramatically reduce camera shake using technology acquired from Green Parrot Pictures. It works by charting the best camera path for you, as if you were using a dolly or tripod, using a 'unified optimization technique.' In essence, it gives you a one-click solution for clearing up your shaky camera work, and the best bit is that you can preview it in real-time before you publish. Considering how much processing power video optimization like this demands, Google's pushing some impressive cloud computing here, distributing the load across a range of servers.

If video stabilization wasn't enough to sate your demands for a cloud-based video editor, how about a bit of 3D? Google's integrated 3D video production using two separate streams -- the kind of thing you get when you bolt two cameras together filming at a set distance apart at the same time. It's been quite difficult to create a 3D video from two cameras using just free tools, but now Google has integrated it into the YouTube 3D Video Creator and made it compatible with YouTube's 3D features.

Read more: DownloadSquad

Microsoft's working on WGX, the future of PC gaming

Posted by

jasper22

at

21:51

|

The video showcases the Windows Gaming Experience, or WGX for short, and touches upon four main areas, including Social, Identity, Search and Transaction, explaining how they work in a very Kinect kind of way. For those of you who are unfamiliar, WGX is an internal Microsoft team comprised of all gaming-related endeavors between all of Microsoft’s platforms. Though the video is from 2010, this is the closest look we have had yet at exactly what WGX’s vision is.

Read more: Gameguru

Read more: Youtube

Catel - WPF, Silverlight and WP7 MVVM library

Posted by

jasper22

at

14:46

|

Project Description

Catel is more than just an MVVM framework. It also includes user controls and lots of enterprise library classes. The MVVM framework differs itself from other frameworks of the simplicity and solves the "nested user control" problem with dynamic viewmodels for user controls.

We are currently investigating support for Windows Phone 7. So far, it looks very promising. If you have any feedback on the Windows Phone 7 libraries, please let us know!

Our goal is to make Catel the best MVVM framework in the world. If you have any ideas, comments or feature requests,

don't hesitate to create a new issue or start a discussion.

Please take the time to review the product, we'd love to hear your feedback to improve the product!

Not sure which MVVM framework to choose?

Take a look at the MVVM framework comparison sheet.

Read more: Codeplex

ideone

Posted by

jasper22

at

14:39

|

Briefly about ideone

ideone.com is a... pastebin. But a pastebin like no other on the Internet. More accurate expression would be online mini IDE and debugging tool.

Ideone is an Italian word for great ideas - because ideone.com is a place where your greatest ideas can spring to life.

ideone.com is designed mostly for programmers (but, of course, common plain text can also be uploaded). You can use it to:

share your code (that's obvious - it is a pastebin, isn't it? :)) in a neat way,

run your code on server side in more than 40 programming languages (number still growing)

and do it all with your own input data!

ideone.com also provides free Ideone API which is availabe as a webservice. It's functionality allows you to build your own ideone-like service!

for logged in users Ideone offers possibility to manage their codes, publish multiple submissions at one go, and more.

All codes can be accessed through convenient hash links. Source code pages provide information about the code and its execution: memory usage, execution time, language and compiler version, code itself, input uploaded by the user, output generated by the program and error messages from compilers and interpreters.

It is in great measure your contribution that this service exists - it is you who share your ideas with us and report bugs and suggestions. We hope it is still going to be like this - as long as this site works! Thank you!

Read more: IDEone

Why is there the message '!Do not use this registry key' in the registry?

Posted by

jasper22

at

14:38

|

Under Software\Microsoft\Windows\CurrentVersion\Explorer\Shell Folders, there is a message to registry snoopers: The first value is called "!Do not use this registry key" and the associated data is the message "Use the SHGetFolderPath or SHGetKnownFolderPath function instead."

I added that message.

The long and sad story of the Shell Folders key explains that the registry key exists only to retain backward compatibility with four programs written in 1994. There's also a TechNet version of the article which is just as sad but not as long.

One customer saw this message and complained, "That registry key and that TechNet article explain how to obtain the current locations of those special folders, but they don't explain how to change them. This type of woefully inadequate documentation only makes the problem worse."

Hey, wow, a little message in a registry key and a magazine article are now "documentation"! The TechNet article is historical background. And the registry key is just a gentle nudge. Neither is documentation. It's not like I'm going to put a complete copy of the documentation into a registry key. Documentation lives in places like MSDN.

But it seems that some people need more than a nudge; they need a shove. Let's see, we're told that the functions for obtaining the locations of known folders are SHGetFolderPath and its more modern counterpart SHGetKnownFolderPath. I wonder what the names of the functions for modifying those locations might be?

Read more: The old new thing

The day made of glass - Future of communication

Posted by

jasper22

at

14:38

|

Can you imagine organizing your daily schedule with a few touches on your bathroom mirror? Chatting with far-away relatives through interactive video on your kitchen counter? Reading a classic novel on a whisper-thin piece of flexible glass?

Corning is not only imagining those scenarios – the company is engaged in research that could bring them alive in the not-too-distant future. You can get a glimpse of Corning’s vision in the new video, “A Day Made of Glass” above.

Corning Chairman and CEO Wendell Weeks says Corning’s vision for the future includes a world in which myriad ordinary surfaces transform “from one-dimensional utility into sophisticated electronic devices.”

The video depicts a world in which interactive glass surfaces help you stay connected through seamless delivery of real-time information – whether you’re working, shopping, eating, or relaxing.

“While we’re not saying that it will develop exactly as we’ve envisioned,” Wendell says, “we do know that this world is being created as we speak.”

Glass is the essential enabling material of this new world. “This is a visual world – so transparency is a must,” explains Wendell. But that’s just the beginning. Ubiquitous displays require materials that are flexible, durable, stable under the toughest of environmental conditions, and have a cool, touch-friendly aesthetic. And not just any glass will do. This world requires materials that are strong, yet thin and lightweight; that can enable complex electronic circuits and nano functionality; that can scale for very large applications, and that are also environmentally friendly. This world calls for the kind of specialty glass made by Corning.

Such real-time information also depends on communications networks with massive bandwidth capacity – meaning new opportunities for Corning to apply its optical communications expertise to customers’ tough challenges.

Read more: ImpactLabs

C++Ox: The Dawning of a New Standard

Posted by

jasper22

at

14:11

|

It's been 10 years since the first ISO C++ standard, and 2009 will bring us the second. The new standard will support multithreading, with a new thread library. Find out how this will improve porting code, and reduce the number of APIs and syntaxes you use.

In this special report, Internet.com delves into the new features being discussed by the standards team. Learn how these new features will revolutionize the way you code.

Special report topics include:

Overview: C++ Gets an Overhaul

Easier C++: An Introduction to Concepts

Simpler Multithreading in C++0x

The State of the Language: An Interview with Bjarne Stroustrup

Timeline: C++ in Retrospect

Read more: eBook library

A simple way to run C# code from bat file

Posted by

jasper22

at

14:10

|

I read an interested article demonstrated how to run a bat file with embedded C# code. I repost this example here, because the original post is written in Russian. Despite the fact that there are other more powerful solutions and frameworks to achieve the same goal (e.g. Powershell) – I think the way presented by the author, is very useful for different scenarios.

/*

@echo off && cls

set WinDirNet=%WinDir%\Microsoft.NET\Framework

IF EXIST "%WinDirNet%\v2.0.50727\csc.exe" set csc="%WinDirNet%\v2.0.50727\csc.exe"

IF EXIST "%WinDirNet%\v3.5\csc.exe" set csc="%WinDirNet%\v3.5\csc.exe"

IF EXIST "%WinDirNet%\v4.0.30319\csc.exe" set csc="%WinDirNet%\v4.0.30319\csc.exe"

%csc% /nologo /out:"%~0.exe" %0

"%~0.exe"

del "%~0.exe"

exit

*/

class HelloWorld

{

static void Main()

{

Read more: DevIntelligence

IIS7 Native API (C++) Starter Kit

Posted by

jasper22

at

14:09

|

Overview

To extend the server, IIS7 provides a new (C++) native core server API, which replaces the ISAPI filter and extension API from previous IIS releases. The new API features object-oriented development with an intuitive object model, provides more control over request processing, and uses simpler design patterns to help you write robust code.

NOTE: The IIS7 native (C++) server API is declared in the Platform SDK httpserv.h header file. You must obtain this SDK and register it with Visual Studio in order to compile this module.

Features

This starter kit is offered to get you started with writing your new C++ HTTP module for IIS7. In this package, you have the following:

Readme to assist in setting up your Dev Environment

Walkthrough building a simple sample module

Benefits

For many, using the ISAPI framework was difficult and error-prone after deployment. In IIS7, this framework is still supported but is replaced by the new native HTTP API documented in the Windows Vista SDK (see httpserv.h) and provides robust extensibility capability for developers.

The new C++ API in IIS7 offers developers the the ability to tie direclty into the native core server using the module built using this starter kit.

Read more: IIS

"Cool" button creation in Expression Blend Tutorial

Posted by

jasper22

at

14:06

|

In this article we will look at some of the ways to create “cool” or gel buttons in Expression Blend. If you look at the default look and feel of a WPF button in Expression Blend or Visual Studio, you will notice that it doesn’t look “cool”. It’s still that dinosaur chrome looking button control, whereas Expression Blend and Windows Presentation Foundation (WPF) are not just about functionality but also about enhancing User eXperience (UX). So to make your control “look and feel cool” you have to customize it, in other words, skin the control and style it the way you want!

Read more: Greg's Cool [Insert Clever Name] of the Day

Named Pipes in WCF are named but not by you (and how to find the actual windows object name)

Posted by

jasper22

at

13:03

|

If Windows Communication Foundation (WCF) implementation has its own idiosyncrasies, named pipes provider is the champion. First, let’s start with the name of the provider. Named Pipes in Windows can be used to communicate between process on the same machine or between different machines across a network. WCF only implements the on-machine part of it. Named Pipes name has the format \\ServeName\pipe\PipeName and for on-machine pipes you can use \\.\pipe\PipeName. WCF, if you remember, use the uri format net.pipe://host/path but unlike it may seem, the actual pipe name (Windows Object) will not be anything near \\host\pipe\path but rather a randomly generated GUID that will be different every time you start your host. When troubleshooting a TCP host/client in WCF, you can simple use netstat /ano to verify if the port is there and which process is listening to it. If it is not listening you know that the host is either not running or running in a different port. For Named Pipes, you will not be able to identify whether the host is listening or not because you don’t have the pipe name.

I will explain how WCF client can figure out the pipe name. Instead of writing a very large paragraph no one will read let’s put it all in perspective with a scenario: I have created a host with a named pipe biding. The endpoint is net.pipe://localhost/TradeService/Service1. I deploy my solution in my server as Windows Service and one day I cannot connect to my host. I check the ABC (Address-Biding-Contract) of my client and it is as expected. The same happens in the host. My objective is identify if the named pipe is available.

First I download SysInternals tools (http://technet.microsoft.com/en-us/sysinternals/default.aspx) and install everything on c:\systenternals. SysInternals include a tool to list all pipes available in the machine. I start a new cmd prompt with Administrator Privileges, move to my SysInternals folder a run PipeList.exe.

Read more: Rodney Viana's (MSFT) Blog

Loading Assemblies off Network Drives

Posted by

jasper22

at

13:01

|

.NET 4.0 introduces some nice changes that makes it easier to run .NET EXE type applications directly off network drives. Specifically the default behavior – that has caused immense amount of pain – in previous versions that refused to load assemblies from remote network shares unless CAS policy was adjusted, has been dropped and .NET EXE’s now behave more like standard Win32 applications that can load resources easily from local network drives and more easily from other trusted remote locations.

Unfortunately if you’re using COM Interop or you manually load your own instances of the .NET Runtime these new Security Policy rules don’t apply and you’re still stuck with the old CAS policy that refuses to load assemblies off of network shares. Worse, CASPOL configuration doesn’t seem to work the way it used to either so you have a worst case scenario where you have assemblies that won’t load off net shares and no easy way to adjust the policy to get them to load.

However, there’s a new quick workaround, via a simple .config switch:

<configuration>

<startup>

<supportedRuntime version="v4.0.30319"/>

<!-- supportedRuntime version="v2.0.50727"/-->

</startup>

<runtime>

<loadFromRemoteSources enabled="true"/>

</runtime>

</configuration>

This simple .config file key setting allows overriding the possible security sandboxing of assemblies loaded from remote locations. This key is new for .NET 4.0, but oddly I found that it also works with .NET 2.0 when 4.0 is installed (which seems a bit strange). When I force the runtime used to 2.0 (using the supporteRuntime version key plus explicit loading .NET 2.0 and actively checking the version of the loaded runtime) I still was able to load an assembly off a network drive. Removing the key or setting it to false again failed those same loads in both 2.x and 4.x runtimes.

So this is a nice addition for those of us stuck having to do Interop scenarios. This is specifically useful for one of my tools which is a Web Service proxy generator for FoxPro that uses auto-generated .NET Web Service proxies to call Web Services easily. In the course of support, I would say a good 50% of support requests had to do with people trying to load assemblies off a network share. The gyrations of going through CASPOL configuration put many of f them off altogether to chose a different solution – this much simpler fix is a big improvement.

Read more: Rick Strahl's Web Log

Debugging WPF and Silverlight Binding Errors In Visual Studio

Posted by

jasper22

at

12:55

|

Recently I was working with a Microsoft Silverlight project, debugging a XAML binding error. After a few hours of searching, I found a blog describing how to alter the WPF Trace Settings to enable a more verbose output while debugging.

After changing the trace level, you will see a more detailed output in the Debugger Output Window. Below is is a screenshot of the how to enable the proper settings.

Read more: Falconer developer

Detecting Certificate Authority compromises and web browser collusion

Posted by

jasper22

at

12:54

|

The Tor Project has long understood that the certification authority (CA) model of trust on the internet is susceptible to various methods of compromise. Without strong anonymity, the ability to perform targeted attacks with the blessing of a CA key is serious. In the past, I’ve worked on attacks relating to SSL/TLS trust models and for quite some time, I’ve hunted for evidence of non-academic CA compromise in the wild.

I’ve also looked for special kinds of cooperation between CAs and browsers. Proof of collusion will give us facts. It will also give us a real understanding of the faith placed in the strength of the underlying systems.

Does certificate revocation really work? No, it does not. How much faith does a vendor actually put into revocation, when verifiable evidence of malice is detected or known? Not much, and that’s the subject of this writing.

Last week, a smoking gun came into sight: A Certification Authority appeared to be compromised in some capacity, and the attacker issued themselves valid HTTPS certificates for high-value web sites. With these certificates, the attacker could impersonate the identities of the victim web sites or other related systems, probably undetectably for the majority of users on the internet.

I watch the Chromium and Mozilla Firefox projects carefully, because they are so important to the internet infrastructure. On the evening of 16 March, I noticed a very interesting code change to Chromium: revision 78478, Thu Mar 17 00:48:21 2011 UTC.

In this revision, the developers added X509Certificate::IsBlacklisted, which returns true of a HTTPS certificate has one of these particular serial numbers:

047ecbe9fca55f7bd09eae36e10cae1e

d8f35f4eb7872b2dab0692e315382fb0

b0b7133ed096f9b56fae91c874bd3ac0

9239d5348f40d1695a745470e1f23f43

d7558fdaf5f1105bb213282b707729a3

f5c86af36162f13a64f54f6dc9587c06

A comment marks the first as "Not a real certificate. For testing only." but we don’t know if this means the other certificates are or are not also for testing.

With just these serial numbers, we are not able to learn much about the certificates that Chromium now blocks. To get more information, I started the crlwatch project. Nearly every certificate contains a reference to a Certificate Revocation List (CRL). A CRL is a list of certificates that the CA has revoked for whatever reason. In theory, this means that an attacker is unable to tamper with the certificate to prevent revocation as the browser will check the CRL it finds in a certificate. In practice the attacker simply needs to tamper with the network - this is something they’re already able to do if they are performing a SSL/TLS Machine-In-The-Middle attack. Even if an attacker has a certificate, they generally are unable to modify the certificate without breaking the digital signature issued by the CA. That CA signature is what gives the certificate value to an attacker and tampering takes the attacker back to square zero. So while investigating these serials, we clearly lack the CRL distribution point in the Chrome source. However, the project that I announced on March 17th, crlwatch, was specifically written to assist in finding who issued, and potentially revoked the serial numbers in question. By matching the serial numbers found in the source for Chrome with the serial numbers of revoked certificates, we’re able to link specific serials to specific CA issuers. The more serial numbers we match in revocation lists, the higher our probability of having found the CA that issued the certificates.

About twelve hours (Thursday, March 17, 2011 | 13:00) after the above patch was pushed into source control - Google announced an important Chrome Update that involved HTTPS certificate issues.

This also is mostly uninteresting until we notice that this is not isolated to Google. Mozilla pushed out two patches of interest:

rev-f6215eef2276

rev-55f344578932

The complete changeset is semi-informative. Mozilla references a private bug in that fix that Mozilla will hopefully disclose. Similar to Chromium, the Mozilla patches create a list of certificate serial numbers that will be treated as invalid. However, the serial numbers from the Mozilla patches are different:

009239d5348f40d1695a745470e1f23f43

00d8f35f4eb7872b2dab0692e315382fb0

72032105c50c08573d8ea5304efee8b0

00b0b7133ed096f9b56fae91c874bd3ac0

00e9028b9578e415dc1a710a2b88154447

00d7558fdaf5f1105bb213282b707729a3

047ecbe9fca55f7bd09eae36e10cae1e

00f5c86af36162f13a64f54f6dc9587c06

392a434f0e07df1f8aa305de34e0c229

3e75ced46b693021218830ae86a82a71

Read more: Tor

Revocation doesn't work (18 Mar 2011)

Posted by

jasper22

at

12:53

|

When an HTTPS certificate is issued, it's typically valid for a year or two. But what if something bad happens? What if the site loses control of its key?

In that case you would really need a way to invalidate a certificate before it expires and that's what revocation does. Certificates contain instructions for how to find out whether they are revoked and clients should check this before accepting a certificate.

There are basically two methods for checking if a certificate is revoked: certificate revocation lists (CRLs) and OCSP. CRLs are long lists of serial numbers that have been revoked while OCSP only deals with a single certificate. But the details are unimportant, they are both methods of getting signed and timestamped statements about the status of a certificate.

But both methods rely on the CA being available to answer CRL or OCSP queries. If a CA went down then it could take out huge sections of the web. Because of this, clients (and I'm thinking mainly of browsers) have historically been forgiving of an unavailable CA.

But an event this week gave me cause to wonder how well revocation actually works. So I wrote the the world's dumbest HTTP proxy. It's just a convenient way to intercept network traffic from a browser. HTTPS requests involve the CONNECT method, which is implemented. All other requests (including all revocation checks) simply return a 500 error. This isn't even as advanced as Moxie's trick of returning 3.

To be clear, the proxy is just for testing. An actual attack would intercept TCP connections. The results:

Firstly, IE 8 on Windows 7:

No indication of a problem at all even though the revocation checks returned 500s. It's even EV.

(Aside: I used Wireshark to confirm that revocation checks weren't bypassing the proxy. It's also the case that SChannel can cache revocation information. I don't know how to clear this cache, so I simply used a site that I hadn't visited before. Also confirming that a cache wasn't in effect is the fact that Chrome uses SChannel to verify certificates and Chrome knew that there was an issue..)

Firefox 3.6 on Windows 7, no indication:

Read more: ImperialViolet

Hacker Spies Hit Security Firm RSA

Posted by

jasper22

at

12:27

|

Top security firm RSA Security revealed on Thursday that it’s been the victim of an “extremely sophisticated” hack.

The company said in a note posted on its website that the intruders succeeded in stealing information related to the company’s SecurID two-factor authentication products. SecurID adds an extra layer of protection to a login process by requiring users to enter a secret code number displayed on a keyfob, or in software, in addition to their password. The number is cryptographically generated and changes every 30 seconds.

“While at this time we are confident that the information extracted does not enable a successful direct attack on any of our RSA SecurID customers,” RSA wrote on its blog, “this information could potentially be used to reduce the effectiveness of a current two-factor authentication implementation as part of a broader attack. We are very actively communicating this situation to RSA customers and providing immediate steps for them to take to strengthen their SecurID implementations.”

As of 2009, RSA counted 40 million customers carrying SecurID hardware tokens, and another 250 million using software. Its customers include government agencies.

RSA CEO Art Coviello wrote in the blog post that the company was “confident that no other … products were impacted by this attack. It is important to note that we do not believe that either customer or employee personally identifiable information was compromised as a result of this incident.”

The company also provided the information in a document filed with the Securities and Exchange Commission on Thursday, which includes a list of recommendations for customers who might be affected. See below for a list of the recommendations.

Read more: Wired

The architecture of VLC media framework

Posted by

jasper22

at

12:21

|

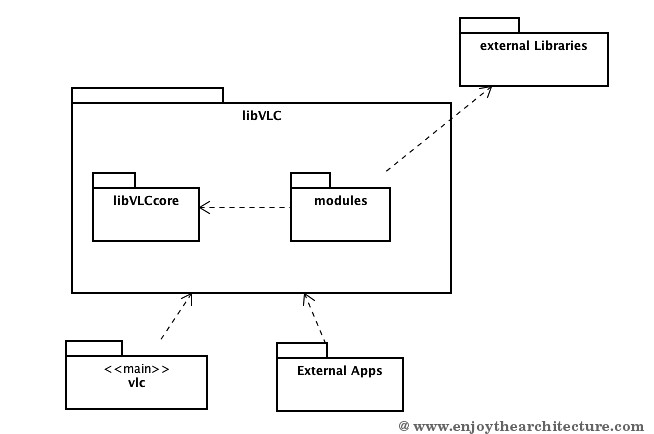

VLC is a free and open source cross-platform multimedia player and framework that plays most multimedia files as well as DVD, Audio CD, VCD, and various streaming protocols. Technically it is a software package that handles media on a computer and through a network. It offers an intuitive API and a modular architecture to easily add support for new codecs, container formats and transmission protocols. Most known package that comes from VLC is the player which is commonly used on Windows, Linux and OSX.

According Videolan website, this project is quite complex to understand:

VLC media player is a large and complex piece of software. It also uses a large number of dependencies.

Being open source allows VLC development to benefit from a large community of developers worldwide.

However, entering a project such as VLC media player can be long and complex for new developers.

Code is written by expert C hackers and sometimes it is very hard to understand. Probably a book is needed to explain how VLC works. I’ll try in few words to summarize what I found in my mining. Let’s start by the highlevel architecture:

Read more: enjoyTheArchitecture

Synchronization Contexts in WCF

Posted by

jasper22

at

11:50

|

One of the more useful features of the Windows® Communication Foundation (WCF) is its reliance on the Microsoft® .NET Framework synchronization context to marshal calls to the service instance (or to a callback object). This mechanism provides for both productivity-oriented development and for powerful extensibility. In this column, I describe briefly what synchronization contexts are and how WCF uses them, and then proceed to demonstrate various options for extending WCF to use custom synchronization contexts, both programmatically and declaratively. In addition to seeing the merits of custom synchronization contexts, you will also see some advanced .NET programming as well as WCF extensibility techniques.

What Are .NET Synchronization Contexts?

The .NET Framework 2.0 introduced a little-known feature called the synchronization context, defined by the class SynchronizationContext in the System.Threading namespace:

public delegate void SendOrPostCallback(object state);

public class SynchronizationContext

{

public virtual void Post(SendOrPostCallback callback,object state);

public virtual void Send(SendOrPostCallback callback,object state);

public static void SetSynchronizationContext

(SynchronizationContext context);

public static SynchronizationContext Current

{get;}

//More members

}

The synchronization context is stored in the thread local storage (TLS). Every thread created with the .NET Framework 2.0 may have a synchronization context (similar to an ambient transaction) obtained via the static Current property of SynchronizationContext. Current may return null if the current thread has no synchronization context. The synchronization context is used to bounce a method call between a calling thread and a target thread or threads, in case the method cannot execute on the original calling thread. The calling thread wraps the method it wants to marshal to the other thread (or threads) with a delegate of the type SendOrPostCallback, and provides it to the Send or Post methods, for synchronous or asynchronous execution respectively. You can associate a synchronization context with your current thread by calling the static method SetSynchronizationContext.

By far, the most common use of a synchronization context is with UI updates. With all multithreaded Windows technologies, from MFC to Windows Forms to WPF, only the thread that created a window is allowed to update it by processing its messages. This constraint has to do with the underlying use of the Windows message loop and the thread messaging architecture. The messaging architecture creates a problem when developing a multithreaded UI application—you would like to avoid blocking the UI when executing lengthy operations or receiving callbacks. This, of course, necessitates the use of worker threads, yet those threads cannot update the UI directly because they are not the UI thread.

In order to address this problem in the .NET Framework 2.0, the constructor of any Windows Forms-based control or form checks to see if the thread it is running on has a synchronization context, and if it does not have one, the constructor attaches a new synchronization context (called WindowsFormsSynchronizationContext). This dedicated synchronization context converts all calls to its Post or Send methods into Windows messages and posts them to the UI thread message queue to be processed on the correct thread. The Windows Forms synchronization context is the underlying technology behind the commonly used BackgroundWorker helper control.

WCF and Synchronization Contexts

By default, all WCF service calls (and callbacks) execute on threads from the I/O Completion thread pool. That pool has 1,000 threads by default, none of them under the control of your application. Now imagine a service that needs to update some user interface. The service should not access the form, control or window directly because it is on the wrong thread. To deal with that, the ServiceBehaviorAttribute offers the UseSynchronizationContext property, defined as:

[AttributeUsage(AttributeTargets.Class)]

public sealed class ServiceBehaviorAttribute : ...

{

public bool UseSynchronizationContext

{get;set;}

//More members

}

The default value of UseSynchronizationContext is set to true. To determine which synchronization context the service should use, WCF looks at the thread that opened the host. If that thread has a synchronization context and UseSynchronizationContext is set to true, then WCF automatically marshals all calls to the service to that synchronization context. There is no explicit interaction with the synchronization context required of the developer. WCF does that by providing the dispatcher of each endpoint with a reference to the synchronization context, and the dispatcher uses that synchronization context to dispatch all calls.

For example, suppose the service MyService (defined in Figure 1) needs to update some UI on the form MyForm. Because the host is opened after the form is constructed, the opening thread already has a synchronization context, and so all calls to the service will automatically be marshaled to the correct UI thread.

Figure 1 Using the UI Synchronization Context

[ServiceContract]

interface IMyContract

{...}

class MyService : IMyContract

{

/* some code to update MyForm */

}

class MyForm : Form

{...}

static class Program

{

static void Main()

{

Form form = new MyForm();//Sync context established here

ServiceHost host = new ServiceHost(typeof(MyService));

host.Open();

Application.Run(form);

host.Close();

}

}

If you were to simply open the host in Main and use the code generated by the Windows Forms designer, you would not benefit from the synchronization context, since the host is opened without it present:

//No automatic use of synchronization context

static void Main()

{

ServiceHost host = new ServiceHost(typeof(MyService));

host.Open();

Application.Run(new MyForm());//Synchronization context

//established here

host.Close();

}

Service Custom Synchronization Context

Utilizing a custom synchronization context by your service or resources has two aspects to it: implementing the synchronization context and then installing it.

Read more: MSDN magazine

WCF Service InstanceContextMode and ConcurrencyMode combinations

Posted by

jasper22

at

11:49

|

What does the InstanceContextMode and ConcurrencyMode attributes in a wcf service do?

A short MSDN description of this describes it as follows:

“A session is a correlation of all messages sent between two endpoints. Instancing refers to controlling the lifetime of user-defined service objects and their related InstanceContext objects. Concurrency is the term given to the control of the number of threads executing in an InstanceContext at the same time.” from http://msdn.microsoft.com/en-us/library/ms731193.aspx

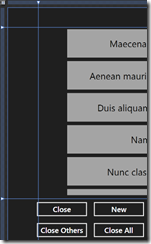

I found a nice article and some test screenshots of different combinations of how InstanceContextMode andConcurrencyMode is used together.

InstanceContextMode can have one of InstanceContextMode enum values. InstanceContextMode specifies when an instance of a service is created and whether or not this instance will be a Singleton or not.

ConcurrencyMode specifies the concurrency accessebility of each seperate instance. For example. If ConcurrencyMode is set to Multiple, then the same instance of the service can service multiple requests simultaneously. When it’s set to Sinlge, different instances of the service can handle one request at a time, but simultaneously for each instance.

Couple of examples:

- InstanceContextMode = Single, ConcurrencyMode = Sinlge – Only a single instance of the service can be created and only one request can be serviced at a time for this instance.

- InstanceContextMode = Sinlge, ConcurrencyMode = Multiple – Only a sinlge instance of the service is created, but multiple request can be serviced at the same time by this one service.

- InstanceContextMode = PerSession, ConcurrencyMode = Single – An instance of the service is created for each session, these instances can service requests simultaneously, but only one request at a time per instance.

- InstanceContextMode = PerSession, ConcurrencyMode = Multiple – An instance is created for each session and each of these instances can perfom multiple tasks simultaneously.

From this, you can see that it will not be necessary to write thread safe code when InstanceContextMode = PerSession and ConcurrencyMode = Single. It will however be necessary to make your code thread safe if you’re doing some concurrent calls from within the service itself, or work with static objects in your application.

Read more: JD Stuart's Blog

IKVM.Reflection Update

Posted by

jasper22

at

09:53

|

Missing Members

To support mcs I previously added some limited support for missing members. This has now been extended substantially to allow full fidelity reading/writing of assemblies. Normally IKVM.Reflection behaves like System.Reflection in how it tries to resolve member and assembly references, but now it is possible to customize this to enable reading a module without resolving any dependencies.

Roundtripping

To test this version of IKVM.Reflection, I've roundtripped 1758 (non-C++/CLI) modules from the CLR GAC plus the Mono 2.10 directories on my system. Roundtripping here means reading in the module and writing it back out. The original module and resulting module are both disassembled with ildasm and the resulting IL files compared (after going through two dozen regex replacements to filter out some trivial differences).

The code I used for the roundtripping is an (d)evolution of the linker prototype code. It is available here. Note that it is very far from production quality, but does make for a good case study in how to use the advanced IKVM.Reflection functionality.

Unmanaged Exports

A cool CLR feature is the ability to export static managed methods as unmanaged DLL exports. The only languages that support this, AFAIK, are C++/CLI and ILASM, but now you easily do it with IKVM.Reflection as well. Below is a Hello World example. Note that while this example exports a global method, you can export any static method. Note also that the string passed in must be ASCII, may be null and that the ordinal must be a 16 bit value that is unique (in the scope of the module).

using IKVM.Reflection;

using IKVM.Reflection.Emit;

class Demo

{

static void Main()

{

var universe = new Universe();

var name = new AssemblyName("ExportDemo");

var ab = universe.DefineDynamicAssembly(name, AssemblyBuilderAccess.Save);

var modb = ab.DefineDynamicModule("ExportDemo", "ExportDemo.dll");

var mb = modb.DefineGlobalMethod("HelloWorld", MethodAttributes.Static, null, null);

var ilgen = mb.GetILGenerator();

ilgen.EmitWriteLine("Hello World");

ilgen.Emit(OpCodes.Ret);

mb.__AddUnmanagedExport("HelloWorld", 1);

modb.CreateGlobalFunctions();

ab.Save("ExportDemo.dll", PortableExecutableKinds.Required32Bit, ImageFileMachine.I386);

}

}

The resulting ExportDemo.dll can now be called from native code in the usual ways, for example:

typedef void (__stdcall * PROC)();

HMODULE hmod = LoadLibrary(L"ExportDemo.dll");

PROC proc = (PROC)GetProcAddress(hmod, "HelloWorld");

proc();

Creating unmanaged exports is supported for I386 and AMD64. Itanium (IA64) support has not been implemented.

What's Missing

It should now be possible to write an ILDASM clone using IKVM.Reflection, however to be able to write ILASM there are still a couple of things missing:

- Function pointers (used by C++/CLI).

- API to create missing type.

- Preserving interleaved modopt/modreq ordering.

- Various C++/CLI quirks (e.g. custom modifiers on local variable signatures).

- Ability to set file alignment.

Changes:

- Added support for using missing members in emitted assembly.

- If you build IKVM.Reflection with the STABLE_SORT symbol defined, all metadata sorting will be done in a stable way, thus retaining the order in which the items are defined.

Read more: IKVM.NET Weblog

Stored Procedure Definitions and Permissions

I wrote a post a while back that showed how you can grant execute permission ‘carte blanche’ for a database role in SQL Server. You can read that post here. This post is going to build on that concept of using database roles for groups of users and allocation permissions to the role. I recently had a situation where a tester wanted permission, for themselves and the rest of the testing team, to look at the definition of all the stored procedures on a specific database, strangely enough for testing purposes. I thought for a while on how best to grant this permission, I did not want to grant the VIEW DEFINITION permission at the server level or even the database level. I just wanted to grant for all the store procedures that existed in the test database at that time. This is the solution I came up with:

Create a database role in the specific database called db_viewspdef

CREATE ROLE [db_viewspdef]

GO

I then added the tester windows group to that role:

USE [AdventureWorks]

GO

EXEC sp_addrolemember N'db_viewspdef', N'DOM\TesterGroup'

GO

My next task was to get a list of all the Stored Procedures in the database, for this I used the following query against sys.objects:

SELECT *

FROM sys.objects

WHERE type = 'P'

ORDER BY name

I then thought about concatenating some code around the result set to allow SQL to generate the code for me, so I used:

FROM sys.objects d

INNER JOIN sys.schemas s ON d.schema_id =s.schema_id

WHERE type = 'P'

ORDER BY d.name

As you can see I joined sys,objects to sys.schemas to get the schema qualified name for all the stored procedures in the Adventureworks database. I changed the output the query results to text and copied the results from the results pane to a new query window. I fired the query, permission to view the definition of each stored procedure currently in the database was granted.

Read more: The SQL DBA in the UK

Daily Chrome OS Builds for VMWare and VirtualBox Now Available

Posted by

jasper22

at

15:55

|

Hexxeh has now made available for everyone daily Chrome OS builds that can be used in VM. He has created both VMWare and VirtualBox versions of his Chrome OS Vanilla, which are snapshot builds of Chromium OS that the Chromium Team works on developing every day.

If you’ve been frustrated because you did not get a Cr-48 or you could not get Chrome OS Vanilla to work on your own PC for hardware reasons, there is now an alternative with these new images that have been released today.

The instructions are pretty simple, according to Hexxeh.

To use this, just create a new virtual machine in VirtualBox, and when it asks whether you want to create a new hard drive or use an existing one, point it to the VDI file you downloaded and extracted. Using the VMWare downloads is even easier! Simply download, install VMWare Player and then double click the VMX file in the archive you downloaded.

Read more: thechromesource

Understanding the Role of Commanding in Silverlight 4 Applications

Posted by

jasper22

at

15:36

|

I’ve had the opportunity to give a lot of presentations on the Model-View-ViewModel (MVVM) lately both publicly and internally for companies and wanted to put together a post that covers some of the frequent questions I get on commanding. MVVM relies on 4 main pillars of technology including Silverlight data binding, ViewModel classes, messaging and commanding. Although Silverlight 4 provides some built-in support for commanding, I’ve found that a lot of people new to MVVM want more details on how it works and how to use it in MVVM applications.

If you're building Silverlight 4 applications then you've more than likely heard the term "commanding" used at a talk, in a forum or in talking with a WPF or Silverlight developer. What is commanding and why should you care about it? In a nutshell, it's a mechanism used in the Model-View-ViewModel (MVVM) pattern for communicating between a View (a Silverlight screen) and a ViewModel (an object containing data consumed by a View). It normally comes into play when a button is clicked and the click event needs to be routed to a ViewModel method for processing as shown next:

When I first learned about the MVVM pattern (see my blog post on the subject here if you're getting started with the pattern) there wasn't built-in support for commanding so I ended up handling button click events in the XAML code-behind file (View.xaml.cs in the previous image) and then calling the appropriate method on the ViewModel as shown next. There were certainly other ways to do it but this technique provided a simple way to get started.

private void Button_Click(object sender, RoutedEventArgs e)

{

//Grab ViewModel from LayoutRoot's DataContext

HomeViewModel vm = (HomeViewModel)LayoutRoot.DataContext;

//Route click event to UpdatePerson() method in ViewModel

vm.UpdatePerson();

}

While this approach works, adding code into the XAML code-behind file wasn't what I wanted given that the end goal was to call the ViewModel directly without having a middle-man. I’m not against adding code into the code-behind file at all and think it’s appropriate when performing animations, transformations, etc. but in this case it’s kind of a waste. What's a developer to do? To answer this question let's examine the ICommand interface.

Read more: Dan Wahlin's WebLog

A Silverlight Resizable TextBlock (and other resizable things)

Posted by

jasper22

at

15:34

|

In this blog post I present a simple attached behaviour that uses a Thumb control within a Popup to adorn any UI element so that the user can re-size it.

A simple feature that has become quite popular on the web is to attache a small handle to text areas so that the user can resize them, this is useful if a user wants to add a large piece of text to a small comment form for example. Interestingly the Google Chrome browser makes all text areas resizeable by default, which leads to web developers wondering how to turn this feature off for their website. I thought that this was a pretty useful feature, so decided to implement a little attached behaviour that would do the same think for Silverlight:

You can make any element resizable by setting the following attached property:

<TextBox Text="This is a re-sizeable textbox"

local:ResizeHandle.Attach="True">

</TextBox>

The implementation of this behaviour is pretty straightforward. When the Attach property changes, i.e. when it is set on an element a Thumb and a Popup are created:

private static Dictionary<FrameworkElement, Popup> _popups =

new Dictionary<FrameworkElement, Popup>();

private static void OnAttachedPropertyChanged(DependencyObject d,

DependencyPropertyChangedEventArgs e)

{

FrameworkElement element = d as FrameworkElement;

// Create a popup.

var popup = new Popup();

// and associate it with the element that can be re-sized

_popups[element] = popup;

// Add a thumb to the pop-up

Thumb thumb = new Thumb()

{

Style = Application.Current.Resources["MyThumbStyle"] as Style

};

popup.Child = thumb;

// add a relationship from the thumb to the target

thumb.Tag = element;

popup.IsOpen = true;

thumb.DragDelta += new DragDeltaEventHandler(Thumb_DragDelta);

element.SizeChanged += new SizeChangedEventHandler(Element_SizeChanged);

}

A static dictionary is used to relate the elements which have this property set o their respective Popup. The Thumb is styled via a Style which is looked up from the application resources. The following style re-templates the Thumb, replacing its visuals with an image:

<Application xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

x:Class="ResizableTextBox.App">

<Application.Resources>

Read more: ScottLogic

New XML Standard for Super-Fast, Lightweight Applications Announced by W3C

Posted by

jasper22

at

14:21

|

From embedded sensors to high-frequency stock trading to everyday mobile Web applications, the race is on for technologists to build the most efficient systems for quickly streaming large sets of data from one device to another. Sometimes the language that data is communicated in can come with high costs in terms of efficiency. Today the Web's most venerable standards body, the Word Wide Web Consortium (W3C), announced official support for a new standardized data format for super-efficient transmission of data.

Efficient XML Interchange, or EXI, is described as a very compact representation of information in XML (extensible markup language). EXI is so efficient that the W3C says it has been found to improve up to 100-fold the performance, network efficiency and power consumption of applications that use XML, including but not limited to consumer mobile apps. It is particularly useful on devices with low memory or low bandwidth.

A Historic Agreement

EXI has been used in commercial contexts for more than seven years, but today's adoption of the format as a formal standard is the culmination years of collaboration between the W3C and 23 different corporate and academic institutions from around the world, including Oracle, IBM, Adobe, Chevron, Stanford University, Boeing, Cannon, France Telecom, Intel, the Web3D Consortium and others.

It's an amazing world where the transmission of large sets of data is costly enough relative to their creation, storage and processing (the price of those has fallen so much already) that industries have a strong incentive to work together to use standards to reduce those data transmission costs substantially.

Read more: ReadWrite web

Read more: W3C

Using Query Classes With NHibernate

Posted by

jasper22

at

14:20

|

Even when using an ORM, such as NHibernate, the developer still has to decide how to perform queries. The simplest strategy is to get access to an ISession and directly perform a query whenever you need data. The problem is that doing so spreads query logic throughout the entire application – a clear violation of the Single Responsibility Principle. A more advanced strategy is to use Eric Evan’s Repository pattern, thus isolating all query logic within the repository classes.

I prefer to use Query Classes. Every query needed by the application is represented by a query class, aka a specification. To perform a query I:

Instantiate a new instance of the required query class, providing any data that it needs

Pass the instantiated query class to an extension method on NHibernate’s ISession type.

To query my database for all people over the age of sixteen looks like this:

[Test]

public void QueryBySpecification()

{

var canDriveSpecification =

new PeopleOverAgeSpecification(16);

var allPeopleOfDrivingAge = session.QueryBySpecification(canDriveSpecification);

}

To be able to query for people over a certain age I had to create a suitable query class:

public class PeopleOverAgeSpecification : Specification<Person>

{

private readonly int age;

public PeopleOverAgeSpecification(int age)

{

this.age = age;

}

public override IQueryable<Person> Reduce(IQueryable<Person> collection)

{

return collection.Where(person => person.Age > age);

}

public override IQueryable<Person> Sort(IQueryable<Person> collection)

{

return collection.OrderBy(person => person.Name);

}

}

Finally, the extension method to add QueryBySpecification to ISession:

public static class SessionExtensions

{

public static IEnumerable<T> QueryBySpecification<T>(this ISession session, Specification<T> specification)

{

return specification.Fetch(

specification.Sort(

specification.Reduce(session.Query<T>())

)

);

}

}

Read more: Liam McLennan

Silverlight TV 65: 3D Graphics

Posted by

jasper22

at

14:20

|

Do 3D and graphics get you going? The folks at Archetype created an amazing 3D application that we first showed in the keynote at the Silverlight Firestarter in December, 2010. In this episode, Danny Riddell, CEO of Archetype, joins John to discuss their recent work with Silverlight 5, 3D, and graphics. Watch as Danny dives into how they built the 3D medical application and takes John through a detailed tour of the application.

Read more: Channel9

6 Tips for Turbocharging Your Unit Testing

Posted by

jasper22

at

14:20

|

Who doesn't love making software run even better? Turbocharging, if you will. Here are Typemock developer's six tips for turbocharging Isolator.

- Need to simulate a fault? Just use Isolate.WhenCalled(...).WillThrow(new Exception()) with any method!

- Isolate.WhenCalled(...) uses Behavior Sequencing - use multiple expectations in sequence

- Isolate.Swap.AllInstances() affects both existing and future instances of T!

- Use Isolator to fake any Singleton without changing a line of code: Isolate.WhenCalled(() => MyFactory.Instance).WillReturn(...)

- Use Isolate.WhenCalled(() => ...).CallOriginal() to have the fake object call an original implementation!

- Use different flavors of argument matching when setting expectations: exact or custom

What are your favorite tips with Isolator or Isolator++? Leave them in the comments and we’ll post the best ones in the next Typemock newsletter.

Read more: Typemock

Consume a Web Service in an Android application with C# MonoAnDroid application

Posted by

jasper22

at

14:09

|

Description:

In this application I will describe how to consume a web service in your MonoDroid application using VS 2010. (MonoDroid will not work in VS 2010 Express edition). To install MonoDroid navigate to http://mono-android.net/Installation. Follow the instructions and download all the necessary files.

In the Figure 1. I have created a new MonoDroid application named monoAndroidapplication.

Now Like Figure 2 go to Project -> Add Web Reference. Enter in the URL of your web service and click Add Reference I will consume a webservice (http://soatest.parasoft.com/calculator.wsdl) which will add 2 values and will display. In this webservice you can also perform division and multiplication.

Now In the Activity.cs file you have to write the code below.

using System;

using Android.App;

using Android.Content;

using Android.Runtime;

using Android.Views;

using Android.Widget;

using Android.OS;

namespace MonoAndroidApplicationListView

{

[Activity(Label = "MonoAndroidApplicationListView", MainLauncher = true)]

public class Activity1 : Activity

{

int count = 1;

protected override void OnCreate(Bundle bundle)

{

base.OnCreate(bundle);

// Set our view from the "main" layout resource

SetContentView(Resource.Layout.Main);

// Get our button from the layout resource,

// and attach an event to it

// Create a new Button and set it as our view

//Button button = FindViewById<Button>(Resource.Id.MyButton);

Button button = new Button(this);

button.Text = "Consume!";

button.Click += button_Click;

SetContentView(button);

//button.Click += delegate { button.Text = string.Format("{0} clicks!", count++); };

}

private void button_Click(object sender, System.EventArgs e)

{

// Create our web service and use it to add 2 numbers

var ws = new Calculator();

var result = ws.add(25, 10);

// Show the result in a toast

Toast.MakeText(this, result.ToString(), ToastLength.Long).Show();

}

}

}

Now in the figure below you will see the web service marked in red.

Read more: C# Corner

Wrapping a not thread safe object

Posted by

jasper22

at

13:15

|

Introduction

A colleague came to me with a question the answer for seemed very straight forward, yet an implementation took us more than a few minutes. The question was: I have a legacy object which is not thread safe, and all its methods, by its nature, synchronous. It was originally written to run on GUI thread in client application. Later on, this object had to be moved to server where it has to face new challenges. Now the requests to use this method come from the network, means, we face thread safety problem, we have to make sure only one method runs simultaneously on this object. On the other hand methods are synchronous and it would be a bad practice to keep our network "waiting". This article shows how to create a simple wrapper around the object which will synchronize the calls to original object and expose standard async methods.

An unsafe object to wrap

First lets create an unsafe class we will later on wrap, this is a test class that will indicate a proper or improper use. It can be replaced with a real unsafe object.

public class UnsafeClass

{

long testValue = 0;

int myInt = 5;

public void Double()

{

if (Interlocked.CompareExchange(ref testValue, 1, 0) != 0)

throw new ApplicationException("More then one method of UnsafeClass called simultaneously");

myInt = myInt * 2;

Thread.Sleep(100);

Interlocked.Decrement(ref testValue);

}

}

You may see we use Interlocked on a private class variable to make sure the method is running alone. And a Thread.Sleep to simulate a real action.

A wrapper

So what do we really need. An object to lock on. An instance of out UnsafeClass. And a Queue of AutoResetEvent objects (Queue<AutoResetEvent>).

The class will look like this :

public class SafeClassWrapper

{

UnsafeClass unsafeClass;

Queue<AutoResetEvent> syncQueue;

object syncRoot = new object();

public SafeClassWrapper()

{

unsafeClass = new UnsafeClass();

syncQueue = new Queue<AutoResetEvent>();

}

}

Now its time to wrap out methods with an Aync pattern. For example a mehod:

public void Double()

Will be wrapped with:

public IAsyncResult BeginDouble(AsyncCallback callback, object state)

public void EndDouble(IAsyncResult result)

Read more: Codeproject

Handling WP7 orientation changes via Visual States

Posted by

jasper22

at

13:13

|

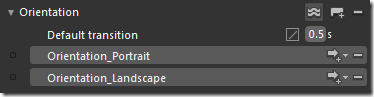

It is pretty well known that an app can be notified of the phone’s orientation changes via the PhoneApplicationFrame.OrientationChanged event. However, if you are as serious about sharing the work between the designer and the developer as I am, you will not be happy with this – the changing of the layout should be the responsibility of the designer, not the developer.

Of course, the best way to achieve the different layouts is via Visual States. You can define two states like this in Blend:

And move your controls around. For example, in SurfCube V2.2, we wanted to have the tabs and the tab buttons below each other in Portrait mode, but in a totally different arrangement in Landscape.

Read more: VBandi's blog

Crytek to release UDK alternative

Posted by

jasper22

at

13:12

|

Co-founder Yerli ponders releasing engine for free; talks up mobile future

CryEngine 3 vendor Crytek is planning the release of an indie-friendly SDK, the firm has told Develop.

Crytek co-founder Anvi Yerli said his firm already “has a business model in mind” for the CryEngine software development kit.

“It will be extremely user-friendly,” he said.

“The barriers for entry will be very low, and perhaps [it will be distributed] for free”, he added.

Yerli said he was aware the strategy will naturally be compared to Epic Games’ own UDK – a high-end game engine that is freely available for developers and hobbyists to download.

“Of course this [the CryEngine SDK] will be compared to UDK and Unity and so on, but we think this sort of competition is very good for the community.”

Yerli held back on discussing details of the dev kit. Its revenue model, and available platform, is a matter of speculation.

Epic Games’ own UDK takes 25 per cent of wholesale revenues from commercial games built on the engine. The tech can be used to make games across a variety of platforms, from PC to smartphone.

Asked about Crytek’s plan for mobile, Yerli said “the use of CryEngine outside of core games makes a lot of sense to us and we are very interested”.

“We actually have some developers going towards mobile and online for web and browser games,” he added.

Read more: Develop

Subscribe to:

Comments (Atom)