What's new in Linux 2.6.38

Posted by

jasper22

at

16:11

|

A quite minor change in the process scheduler makes systems with 2.6.38 feel much faster, and more far-reaching changes to the VFS (virtual file system) make some tasks much faster. Some of the changes to driver code that deserve mention include Wireless LAN (WLAN) drivers and expanded support for current graphics chips from AMD and Nvidia.

Ten weeks – that's all the time that Linus Torvalds and his colleagues needed to complete version 2.6.38 of the Linux kernel, which was released last night. The kernel was almost ready a few days ago, which just goes to show that the kernel developers now only need around 65 to 80 days to produce a new version; a year or two ago, they generally needed around two weeks longer.

Nevertheless, there is no shortage of changes, such as the auto grouping of processes within a session, which has caused a lot of commotion in the Linux online world as it is expected to considerably increase the reaction speed of the desktop environment under certain ambient conditions. The second major change concerns the Virtual File System (VFS), which mediates between the file system code and user space. Torvalds has been waiting for the integration of these patches for some time and expressed his excitement in two emails, in which he talked about how much faster "find" ran in a simple file system search.

Some of the other changes will probably be just as important for some users, such as the graphics drivers for new graphics chips from AMD and Nvidia or the new and improved Wireless LAN (WLAN) drivers for chips from Atheros, Broadcom, Intel, Ralink and Realtek. In contrast, server admins will probably be more interested in such things as the new SCSI target LIO and Transmit Packet Steering (XPS).

Read more: The H-Open

Running Hadoop On Ubuntu Linux (Multi-Node Cluster)

Posted by

jasper22

at

16:09

|

Table of Contents:

- What we want to do

- Tutorial approach and structure

- Prerequisites

- Configuring single-node clusters first

- Done? Let’s continue then!

- Networking

- SSH access

- Hadoop

- Cluster Overview (aka the goal)

- Masters vs. Slaves

- Configuration

- conf/masters (master only)

- conf/slaves (master only)

- conf/*-site.xml (all machines)

- Starting the multi-node cluster

- HDFS daemons

- MapReduce daemons

- Stopping the multi-node cluster

- MapReduce daemons

- HDFS daemons

- Running a MapReduce job

- Caveats

- java.io.IOException: Incompatible namespaceIDs

- Workaround 1: Start from scratch

- Workaround 2: Updating namespaceID of problematic datanodes

- What’s next?

- Related Links

- Changelog

- Comments (54)

What we want to do

In this tutorial, I will describe the required steps for setting up a multi-node Hadoop cluster using the Hadoop Distributed File System (HDFS) on Ubuntu Linux.

Are you looking for the single-node cluster tutorial? Just head over there.

Hadoop is a framework written in Java for running applications on large clusters of commodity hardware and incorporates features similar to those of the Google File System and of MapReduce. HDFS is a highly fault-tolerant distributed file system and like Hadoop designed to be deployed on low-cost hardware. It provides high throughput access to application data and is suitable for applications that have large data sets.

Read more: Michael G. Noll

android patterns

Posted by

jasper22

at

16:08

|

This is androidpatterns.com, a set of interaction patterns that can help you design Android apps. An interaction pattern is a short hand summary of a design solution that has proven to work more than once. Please be inspired: use them as a guide, not as a law.

Read more: android patterns

iLBC.NET

Posted by

jasper22

at

16:07

|

Project Description

A port of the iLBC (internet Low Bitrate Codec) speech codec for .NET platform.

Details

This is a port of iLBC (http://www.ilbcfreeware.org/) speech codec to C#, converted from the Java port by Jitsi (SIP Communicator) http://jitsi.org/.

The project targets .NET 4.0 and can be ported to Silverlight too.

License

The source code is available under LGPL license http://www.gnu.org/licenses/lgpl.html

Read more: Codeplex

.Net Perf - timing profiler for .Net

Posted by

jasper22

at

16:06

|

Introducing .Net Perf Timing. A FREE timing profiler for .Net

What is a timing profiler?

A timing profiler provides you with the ability to determine exactly how much time is being spent inside each method, relative to the total execution time of the application.

How does it work?

The application works by pre-instrumenting your assemblies with calls to our application. This allows us to keep track of when a method is entered and exited.

Why would I want to pre-instrument instead of instrumenting while the application is running?

It is very difficult to get multiple application to play well when they being instrumented in real time. Because we pre-instrument, we will not interfere with programs like TypeMock or NCover to name a few.

What's the deal with your website?

We made an attempt on quickly getting Orchard up and running on Azure. We are not web gurus, we are testing and performance gurus. We will be working on the site over the next few weeks. We wanted to get the program out as quickly as possible. Over the next week, we will be implementing a forum and a redesign.

How can I get in touch with you?

Follow us on twitter at @dotnetperf and we will follow you back.

Read more: .Net Perf

Web Platform Installer bundles for Visual Studio 2010 SP1 - and how you can build your own WebPI bundles

Posted by

jasper22

at

16:05

|

Visual Studio SP1 is now available via the Web Platform Installer, which means you've got three options:

- Download the 1.5 GB ISO image

- Run the 750KB Web Installer (which figures out what you need to download)

- Install via Web PI

Note: I covered some tips for installing VS2010 SP1 last week - including some that apply to all of these, such as removing options you don't use prior to installing the service pack to decrease the installation time and download size.

Two Visual Studio 2010 SP1 Web PI packages

There are actually two WebPI packages for VS2010 SP1. There's the standard Visual Studio 2010 SP1 package [Web PI link], which includes (quoting ScottGu's post):

- VS2010 2010 SP1

- ASP.NET MVC 3 (runtime + tools support)

- IIS 7.5 Express

- SQL Server Compact Edition 4.0 (runtime + tools support)

- Web Deployment 2.0

The notes on that package sum it up pretty well:

Looking for the latest everything? Look no further. This will get you Visual Studio 2010 Service Pack 1 and the RTM releases of ASP.NET MVC 3, IIS 7.5 Express, SQL Server Compact 4.0 with tooling, and Web Deploy 2.0. It's the value meal of Microsoft products. Tell your friends! Note: This bundle includes the Visual Studio 2010 SP1 web installer, which will dynamically determine the appropriate service pack components to download and install. This is typically in the range of 200-500 MB and will take 30-60 minutes to install, depending on your machine configuration.

There is also a Visual Studio 2010 SP1 Core package [Web PI link], which only includes only the SP without any of the other goodies (MVC3, IIS Express, etc.). If you're doing any web development, I'd highly recommend the main pack since it the other installs are small, simple installs, but if you're working in another space, you might want the core package.

Read more: Jon Galloway

Android 3.0 Hardware Acceleration

Posted by

jasper22

at

16:00

|

One of the biggest changes we made to Android in this release is the addition of a new rendering pipeline so that applications can benefit from hardware accelerated 2D graphics. Hardware accelerated graphics is nothing new to the Android platform, it has always been used for windows composition or OpenGL games for instance, but with this new rendering pipeline applications can benefit from an extra boost in performance. On a Motorola Xoom device, all the standard applications like Browser and Calendar use hardware-accelerated 2D graphics.

In this article, I will show you how to enable the hardware accelerated 2D graphics pipeline in your application and give you a few tips on how to use it properly.

Go faster

To enable the hardware accelerated 2D graphics, open your AndroidManifest.xml file and add the following attribute to the <application /> tag:

android:hardwareAccelerated="true"

If your application uses only standard widgets and drawables, this should be all you need to do. Once hardware acceleration is enabled, all drawing operations performed on a View's Canvas are performed using the GPU.

If you have custom drawing code you might need to do a bit more, which is in part why hardware acceleration is not enabled by default. And it's why you might want to read the rest of this article, to understand some of the important details of acceleration.

Controlling hardware acceleration

Because of the characteristics of the new rendering pipeline, you might run into issues with your application. Problems usually manifest themselves as invisible elements, exceptions or different-looking pixels. To help you, Android gives you 4 different ways to control hardware acceleration. You can enable or disable it on the following elements:

- Application

- Activity

- Window

- View

Read more: Android Developers

Threads Local Storage in C#

Posted by

jasper22

at

15:59

|

Calling the GetData and the SetData methods the Thread class provides we can keep isolated data separately for each threads. The following video clip shows and explains that.

Read more: Life Michael

Task Parallel Library: 4 of n

Posted by

jasper22

at

15:58

|

Introduction

This is the 4th part of my proposed series of articles on TPL. Last time I introduced Parallel For and Foreach, and covered this ground:

Parallel For/Foreach

Creating A Simple Parallel For/Foreach

Breaking And Stopping A Parallel Loop

Handling Exceptions

Cancelling A Parallel Loop

Partioning For Better Perfomance

Using Thread Local Storage

This time we are going to be looking at how to use Parallel LINQ, or PLINQ as it is better known. We shall also be looking at how to do the usual TPL like things such as Cancelling and dealing with Exceptions, as well as that we shall also look at how to use custom Partionining and custom Aggregates.

Article Series Roadmap

This is article 4 of a possible 6, which I hope people will like. Shown below is the rough outline of what I would like to cover.

Starting Tasks / Trigger Operations / ExceptionHandling / Cancelling / UI Synchronization

Continuations / Cancelling Chained Tasks

Parallel For / Custom Partioner / Aggregate Operations

Parallel LINQ (this article)

Pipelines

Advanced Scenarios / v.Next For Tasks

Now I am aware that some folk will simply read this article and state that it is similar to what is currently available on MSDN, and I in part agree with that, however there are several reasons I have chosen to still take on the task of writing up these articles, which are as follows:

It will only really be the first couple of articles which show simliar ideas to MSDN, after that I feel the material I will get into will not be on MSDN, and will be the result of some TPL research on my behalf, which I will be outlining in the article(s), so you will benefit from my research which you can just read...Aye, nice

There will be screen shots of live output here which is something MSDN does not have that much off, which may help some readers to reinforce the article(s) text

There may be some readers out here that have never even heard of Task Parallel Library so would not come across it in MSDN, you know the old story, you have to know what you are looking for in the 1st place thing.

I enjoy threading articles, so like doing them, so I did them, will do them, have done them, and continue to do them

All that said, if people having read this article, truly think this is too similar to MSDN (which I still hope it won't be) let me know that as well, and I will try and adjust the upcoming articles to make amends.

Table Of Contents

Anyway what I am going to cover in this article is as follows:

Introduction To PLinq

Useful Extension Methods

Simple PLinq Example

Ordering

Using Ranges

Handling Exceptions

Cancelling A PLinq Query

Partitioning For Possibly Better Perfomance

Using Custom Aggregation

Introduction To PLinq

As most .NET developers are now aware there in inbuilt support for querying data inside of .NET, which is known as Linq (Language Integrated Query AKA Linq), which comes in serveral main flavours, Linq to objects, Linq to SQL/EF and LINQ to XML.

We have all probably grown to love writing things like this in our everyday existense:

(from x in someData where x.SomeCriteria == matchingVariable select x).Count();

Or

(from x in peopleData where x.Age > 50 select x).<span lang="en-gb">ToList</span>();

Which is a valueable addition to the .NET language, I certainly could not get by without my Linq. Thing is the designed of TPL have thought about this and have probably seen a lot of Linq code that simply loops through looking for a certain item, or counts the items where some Predicate<T> is met, or we perform some aggregate such as the normal Linq extension methods Sum(), Average(), Aggregate() etc etc.

Read more: Codeproject

How to find out where the IsolatedStorage is in a Windows Phone 7 app?

Posted by

jasper22

at

15:56

|

Experienced Windows Phone 7 developers already know that there is an actual file system on the device that is similiar structure-wise to that of the actual Windows OS. A lot of the content managed by the device is stored in local spots scattered across the system that are not publicly disclosed (although since the OS image was already disassembled, most of them are known).

One of these locations is the folder that is designated as the isolated storage for a specific application. It is fairly easy to access it via the default System.IO.IsolatedStorage classes, but it is also possible to get the actual location of the folder that is assigned to the app.

To do this, simply add this line to your program:

IsolatedStorageFile file = IsolatedStorageFile.GetUserStoreForApplication();

Set a breakpoint to a line that goes after the one above, so that file becomes an actual instance. Once the breakpoint is hit, look at the data related to file, specifically at the m_AppFilesPath field:

Read more: Windows Phone 7

How to deal with CPU usage in WPF application ?

Posted by

jasper22

at

15:54

|

If you are building a WPF application with lots of animation inside it, may be some of which runs forever, you must have been a problem of eating up your entire CPU or a mammoth portion of CPU while the program is running.

The problem is because by default, the framerate for WPF application is set to 60 per second. Thus for every second if your application has slight change, WPF environment draws frames for 60 times and eventually take up lot of CPU in doing so.

To deal with such scenario, you should always set TimeLine.DesiredFrameRate = 20 or anything that suits you.

<EventTrigger RoutedEvent="Mouse.MouseEnter">

<EventTrigger.Actions>

<BeginStoryboard >

<Storyboard Timeline.DesiredFrameRate="20">

<DoubleAnimation Duration="0:0:2"

Storyboard.TargetProperty="RenderTransform.ScaleX" To="3"

<DoubleAnimation Duration="0:0:2"

Storyboard.TargetProperty="RenderTransform.ScaleY" To="3"

</Storyboard>

</BeginStoryboard>

</EventTrigger.Actions>

</EventTrigger>

In this Trigger, you can see the StoryBoard.DesiredFrameRate is set to 20. You can set its value between 1 to 99, but anything below 10 will give performance hiccups for your application.

If you have already built your application and want to change the default behavior of TimeLine.DesiredFramerate, you can use :

Timeline.DesiredFrameRateProperty.OverrideMetadata(

typeof(Timeline),

new FrameworkPropertyMetadata { DefaultValue = 10 }

);

Read more: Daily .Net Tips

Implementing the virtual method pattern in C#, Part One

Posted by

jasper22

at

15:53

|

I was asked recently how virtual methods work "behind the scenes": how does the CLR know at runtime which derived class method to call when a virtual method is invoked on a variable typed as the base class? Clearly it must have something upon which to make a decision, but how does it do so efficiently? I thought I might explore that question by considering how you might implement the "virtual and instance method pattern" in a language which did not have virtual or instance methods. So, for the rest of this series I am banishing virtual and instance methods from C#. I'm leaving delegates in, but delegates can only be to static methods. Our goal is to take a program written in regular C# and see how it can be transformed into C#-without-instance-methods. Along the way we'll get some insights into how virtual methods really work behind the scenes.

Let's start with some classes with a variety of behaviours:

abstract class Animal

{

public abstract string Complain();

public virtual string MakeNoise()

{

return "";

}

}

class Giraffe : Animal

{

public bool SoreThroat { get; set; }

public override string Complain()

{

return SoreThroat ? "What a pain in the neck!" : "No complaints today.";

}

}

class Cat : Animal

{

public bool Hungry { get; set; }

public override string Complain()

{

return Hungry ? "GIVE ME THAT TUNA!" : "I HATE YOU ALL!";

}

public override string MakeNoise()

{

return "MEOW MEOW MEOW MEOW MEOW MEOW";

}

}

class Dog : Animal

{

public bool Small { get; set; }

public override string Complain()

{

return "Our regressive state tax code is... SQUIRREL!";

}

public string MakeNoise() // We forgot to say "override"!

{

return Small ? "yip" : "WOOF";

}

}

Anyone who has spent five minutes in the same room as my cat and a can of tuna will recognize her influence on the program above.

OK, so we've got some abstract methods, some virtual base class methods, classes which do and do not override various methods, and one (accidental) instance method. We might have this program fragment:

string s;

Animal animal = new Giraffe();

s = animal.Complain(); // no complaints

s = animal.MakeNoise(); // no noise

animal = new Cat();

s = animal.Complain(); // I hate you

s = animal.MakeNoise(); // meow!

Dog dog = new Dog();

animal = dog;

s = animal.Complain(); // squirrel!

s = animal.MakeNoise(); // no noise

s = dog.MakeNoise(); // yip!

What has to happen here? Two interesting things. First, when Complain or MakeNoise is called on a value of type Animal, the call must be dispatched to the appropriate method based on the runtime type of the receiver. Second, when MakeNoise is called on a dog, somehow we have to do one thing if the value was of compile-time type Dog, and a different thing if the value was of compile-time type Animal but runtime type Dog.

How would we do this in a language without virtual or instance methods? Remember, every method has to be a static method.

Let's look at the non-virtual instance method first. That's straightforward. The callee can be written as:

public static string MakeNoise(Dog _this)

{

return _this.Small ? "yip" : "WOOF";

}

and the caller can be written as:

s = Dog.MakeNoise(dog); // yip!

Read more: Fabulous Adventures In Coding

Code Contracts

Posted by

jasper22

at

00:36

|

Code contracts provide a way to specify preconditions, postconditions, and object invariants in your code. Preconditions are requirements that must be met when entering a method or property. Postconditions describe expectations at the time the method or property code exits. Object invariants describe the expected state for a class that is in a good state.

Code contracts include classes for marking your code, a static analyzer for compile-time analysis, and a runtime analyzer. The classes for code contracts can be found in the System.Diagnostics.Contracts namespace.

The benefits of code contracts include the following:

Improved testing: Code contracts provide static contract verification, runtime checking, and documentation generation.

Automatic testing tools: You can use code contracts to generate more meaningful unit tests by filtering out meaningless test arguments that do not satisfy preconditions.

Static verification: The static checker can decide whether there are any contract violations without running the program. It checks for implicit contracts, such as null dereferences and array bounds, and explicit contracts.

Reference documentation: The documentation generator augments existing XML documentation files with contract information. There are also style sheets that can be used with Sandcastle so that the generated documentation pages have contract sections.

All .NET Framework languages can immediately take advantage of contracts; you do not have to write a special parser or compiler. A Visual Studio add-in lets you specify the level of code contract analysis to be performed. The analyzers can confirm that the contracts are well-formed (type checking and name resolution) and can produce a compiled form of the contracts in Microsoft intermediate language (MSIL) format. Authoring contracts in Visual Studio lets you take advantage of the standard IntelliSense provided by the tool.

Most methods in the contract class are conditionally compiled; that is, the compiler emits calls to these methods only when you define a special symbol, CONTRACTS FULL, by using the #define directive. CONTRACTS FULL lets you write contracts in your code without using #ifdef directives; you can produce different builds, some with contracts, and some without.

For tools and detailed instructions for using code contracts, see Code Contracts on the MSDN DevLabs Web site.

Preconditions

You can express preconditions by using the Contract.Requires method. Preconditions specify state when a method is invoked. They are generally used to specify valid parameter values. All members that are mentioned in preconditions must be at least as accessible as the method itself; otherwise, the precondition might not be understood by all callers of a method. The condition must have no side-effects. The run-time behavior of failed preconditions is determined by the runtime analyzer.

For example, the following precondition expresses that parameter x must be non-null.

Contract.Requires( x != null );

If your code must throw a particular exception on failure of a precondition, you can use the generic overload of Requires as follows.

Contract.Requires<ArgumentNullException>( x != null, "x" );

Legacy Requires Statements

Most code contains some parameter validation in the form of if-then-throw code. The contract tools recognize these statements as preconditions in the following cases:

The statements appear before any other statements in a method.

The entire set of such statements is followed by an explicit Contract method call, such as a call to the Requires, Ensures, EnsuresOnThrow, or EndContractBlock method.

When if-then-throw statements appear in this form, the tools recognize them as legacy requires statements. If no other contracts follow the if-then-throw sequence, end the code with the Contract.EndContractBlock method.

Copy

if ( x == null ) throw new ...

Contract.EndContractBlock(); // All previous "if" checks are preconditions

Note that the condition in the preceding test is a negated precondition. (The actual precondition would be x != null.) A negated precondition is highly restricted: It must be written as shown in the previous example; that is, it should contain no else clauses, and the body of the then clause must be a single throw statement. The if test is subject to both purity and visibility rules (see Usage Guidelines), but the throw expression is subject only to purity rules. However, the type of the exception thrown must be as visible as the method in which the contract occurs.

Postconditions

Postconditions are contracts for the state of a method when it terminates. The postcondition is checked just before exiting a method. The run-time behavior of failed postconditions is determined by the runtime analyzer.

Unlike preconditions, postconditions may reference members with less visibility. A client may not be able to understand or make use of some of the information expressed by a postcondition using private state, but this does not affect the client's ability to use the method correctly.

Standard Postconditions

You can express standard postconditions by using the Ensures method. Postconditions express a condition that must be true upon normal termination of the method.

Contract.Ensures( this .F > 0 );

Read more: MSDN

ClickOnce Deployment using IIS / Apache Server for VSTO

Posted by

jasper22

at

00:19

|

We can use Clickonce deployment almost on any Http Web Server. Clickonce deployment makes it very easy for the endusers to install the required application. I found ClickOnce deployment particularly useful when you want to distribute addins and document level customized projects for Excel / Word to the enduser in an internet/intranet scenario.

Below are the steps to configure Apache Server for ClickOnce Deployment of an Addin / Document customization projects :

1. Open the project you would like to publish.

2. Open the project properties. Update the properties as shown below.

a. Publishing Folder is the location which would be embedded into the VSTO manifest to locate server for updates.

b. Installation location is the location where the setup files are dropped.

....

4. Add MIME Types to Apache server to enable ClickOnce Deployment. Apache server 2.2 version contains httpd.config at [Install Location] -> Apache Software Foundation->Apache2.2->conf

AddType application/x-ms-application application

AddType application/x-ms-manifest manifest

AddType application/octet-stream deploy

AddType application/vnd.ms-xpsdocument xps

AddType application/xaml+xml xaml

AddType application/x-ms-xbap xbap

AddType application/x-silverlight-app xap

AddType application/microsoftpatch msp

AddType application/microsoftupdate msu

Read more: JK's Blog

Read more: Stackoverflow

Kaxaml

Welcome to Kaxaml!

Kaxaml is a lightweight XAML editor that gives you a "split view" so you can see both your XAML and your rendered content (kind of like XamlPad but without the gigabyte of SDK). Kaxaml is a hobby and was created to be shared, so it's free! Feel free to download and try it out. If you don't like it, it cleans up nicely.

Kaxaml is designed to be "notepad for XAML." It's supposed to be simple and lightweight and make it easy to just try something out. It also has some basic support for intellisense and some fun plugins (including one for snippets, one for cleaning up your XAML and for rendering your XAML to an image).

If you're having problems with the installer or if you're just a do-it-yourself kind of individual, you can download the files you need as a zip.

Silverlight

This version of Kaxaml has basic support for Silverlight. In order for the Silverlight support to work, you need to have Silverlight 4 installed. Get it here. To open a Silverlight tab, choose "New Silverlight Tab" from the file menu or hit Ctrl+L on your keyboard. Getting Silverlight properly integrated is still a work in progress so it may not always behave properly.

Read more: Kaxaml

Exception handling in T-SQL/TRY…CATCH – Underappreciated features of Microsoft SQL Server

Posted by

jasper22

at

15:28

|

As we continue our journey through the “Underappreciated features of SQL Server”, this week are are looking at a few of the T-SQL enhancements that the community felt did not get the deserved attention. This was in response to Andy Warren’s editorial of the same name on SQLServerCentral.com.

Today, we will look at exception handling in T-SQL using the TRY…CATCH statements introduced since SQL Server 2005. Because this is now almost 6 years old, I was a bit surprised to see this in the list of underappreciated features. Hence, while I will touch upon the essential points, I will provide pointers that would help you to get started on the use of TRY…CATCH and then explore a new related feature introduced in SQL 11 (“Denali”). I am posting this on a Thursday, because I will be covering the exception handling options from SQL 7/2000 onwards and also so that it the reader gets some time to experiment with the various options presented here over the week-end.

Exception handling in T-SQL – before SQL Server 2005

Before SQL Server 2005, exception handling was very primitive, with a handful of limitations. Let’s see how exception handling was done in the days of SQL Server 7/2000:

BEGIN TRANSACTION ExceptionHandling

DECLARE @ErrorNum INT

DECLARE @ErrorMsg VARCHAR(8000)

--Divide by 0 to generate the error

SELECT 1/0

-- Error handling in SQL 7/2000

-- Drawback #1: Checking for @@Error must be done immediately after execution fo a statement

SET @ErrorNum = @@ERROR

IF @ErrorNum <> 0

BEGIN

-- Error handling in SQL 7/2000

-- Drawback #2: When returning an error message to the calling program, the Message number,

-- and location/line# are no longer same as the original error.

-- Explicit care must be taken to ensure that the error number & message

-- is returned to the user.

-- Drawback #3: Error message is not controlled by the application, but by the T-SQL code!

-- (Please note that there is only one 'E' in RAISERROR)

SELECT @ErrorMsg = description FROM sysmessages WHERE error = @ErrorNum

RAISERROR ('An error occured within a user transaction. Error number is: %d, and message is: %s',16, 1, @ErrorNum, @ErrorMsg) WITH LOG

ROLLBACK TRANSACTION ExceptionHandling

END

IF (@ErrorNum = 0)

COMMIT TRANSACTION ExceptionHandling

Read more: Beyond Relational

Debug Android UI

Posted by

jasper22

at

15:24

|

When debugging an Android application it’s important to look at the structure of the UI. There are tools that help you visualize the UI. Inside this article I will be explaining two tools:

Hierarchy Viewer

Layoutopt

Hierarchy Viewer

The Hierarchy Viewer application provides a visual representation of the layout’s View hierarchy (the Layout View) and a magnified inspector of the display (the Pixel Perfect View). So lets run the tool.

Connect your device or launch an emulator

From a terminal, launch hierarchyviewer from your SDK /tools directory.

In the window that opens, you’ll see a list of Devices. When a device is selected, a list of currently active Windows is displayed on the right. The focused window is the window currently in the foreground, and also the default window loaded if you do not select another.

Select the window that you’d like to inspect and click Load View Hierarchy. The Layout View will be loaded. You can then load the Pixel Perfect View by clicking the second icon at the bottom-left of the window.

Read more: Blog

Microsoft Visual Studio 2010 Service Pack 1 (Installer)

Posted by

jasper22

at

14:56

|

Brief Description

This web installer downloads and installs Visual Studio 2010 Service Pack 1. An Internet connection is required during installation. See the ‘Additional Information’ section below for alternative (ISO) download options. Please Note: This installer is for all editions of Visual Studio 2010 (Express, Professional, Premium, Ultimate, Test Professional).

Read more: MS Download

WCF Extensibility – Behaviors

Posted by

jasper22

at

12:53

|

The first part of this series will focus on the behaviors. There are four kinds of behaviors, depending on the scope to which they apply: service, endpoint, contract and operation behaviors. The behavior interfaces are the main entry points for almost all the other extensibility points in WCF – it’s via the Apply[Client/Dispatch]Behavior method in the behavior interfaces where a user can get a reference to most of them.

Description vs. Runtime

The WCF behaviors are part of a service (or endpoint / contract / operation) description – as opposed to the service runtime. The description of a service are all the objects that, well, describe what the service will be when it starts running – which happens when the host for the service is opened (e.g., in a self-hosted service, when the program calls ServiceHost.Open). When the service host instance is created, and endpoints are being added, no listeners (TCP sockets, HTTP listeners) have been started, and the program can modify the service description to define how it will behave once it’s running. That’s because, during the service setup, there are cases where the service is in an invalid state (for example, right after the ServiceHost instance is created, no endpoints are defined), so it doesn’t make sense for the host to be started at that point.

When Open is called on the host (or, in the case of an IIS/WAS-hosted service, when the first message arrives to activate the service), it initializes all the appropriate listeners, dispatchers, filters, channels, hooks, etc. that will cause an incoming message to be directed to the appropriate operation. Most of the extensibility points in WCF are actually part of the service runtime, and since the runtime is not initialized until the host is opened, the user needs a callback to notify it that the runtime is ready, and the hooks can be used.

Behaviors

The WCF behaviors are defined by four interfaces (on the System.ServiceModel.Description namespace): IServiceBehavior, IEndpointBehavior, IContractBehavior and IOperationBehavior. They all share the same pattern:

public interface I[Service/Endpoint/Contract/Operation]Behavior {

void Validate(DescriptionObject);

void AddBindingParameters(DescriptionObject, BindingParameterCollection);

void ApplyDispatchBehavior(DescriptionObject, RuntimeObject);

void ApplyClientBehavior(DescriptionObject, RuntimeObject); // not on IServiceBehavior

}

The order in which the methods of the interfaces are called is the following:

- Validate: This gives the behavior an opportunity to prevent the host (or the client) from opening (by throwing an exception) if the validation logic finds something in the service / endpoint / contract / operation description which it deems invalid.

- AddBindingParameters: This gives the behavior an opportunity to add parameters to the BindingParameterCollection, which is used by the binding elements when they’re creating the listeners / factories at runtime. Useful to add correlation objects between behaviors and bindings. For service behaviors, this is actually called once per endpoint, since the BindingParameterCollection is used when creating the listeners for each endpoint.

- Apply[Client/Dispatch]Behavior: This is where we can get reference to the runtime objects, and modify them. This is the most used of the behavior methods (in most cases the other two methods are left blank). On the posts about each specific behavior I’ll have examples of them being used in real scenarios.

Among each method, first the service behavior is called, then the contract, then the endpoint, and finally the operation behaviors within that contract.

Adding behaviors to WCF

The behaviors can be added in three different ways:

- Code: The description of the service / endpoint / contract / operation objects have a property with a collection of behaviors associated with that object; by using this reference you can simply add one of the behaviors.

- Configuration: Available for service and endpoint behaviors, it’s possible to add them via the system.serviceModel/behaviors section. It’s possible to specify service behaviors or endpoint behaviors this way.

Read more: Carlos' blog

7 Ways to Protect your .NET Code from Reverse-Engineering

Posted by

jasper22

at

12:48

|

Introduction

If you are making your software available internationally, and your software is written in .NET (which is particularly easy to decompile), then you ought to consider protecting your code. You can protect your code in various ways, including obfuscation, pruning, resource encryption, and string encoding. In this article, we will show you 7 different ways to protect your Intellectual Property against reverse-engineering, theft, and modification, by using just one tool: SmartAssembly.

Obfuscation

Obfuscation is a classic code protection technique used to make your code hard to read. Obfuscation changes the name of your classes and methods to unreadable or meaningless characters, making it more difficult for others to understand your code.

SmartAssembly offers a choice between type/method name mangling and field name mangling.

Obfuscating type and method names

Read more: Codeproject

59 Open Source Tools That Can Replace Popular Security Software

Posted by

jasper22

at

12:47

|

It's been about a year since we last updated our list of open source tools that can replace popular security software. This year's list includes many old favorites, but we also found some that we had previously overlooked.

In addition, we added a new category -- data loss prevention apps. With all the attention generated by the WikiLeaks scandal, more companies are investing in this type of software, and we found a couple of good open source options.

Thanks to Datamation readers for their past suggestions of great open source security apps. Feel free to suggest more in the comments section below.

Anti-Spam

1. ASSP Replaces: Barracuda Spam and Virus Firewall, SpamHero, Abaca Email Protection Gateway

ASSP (short for "Anti-Spam SMTP Proxy") humbly calls itself "the absolute best SPAM fighting weapon that the world has ever known!" It works with most SMTP servers to stop spam and scan for viruses (using ClamAV). Operating System: OS Independent.

2. MailScanner Replaces: Barracuda Spam and Virus Firewall, SpamHero, Abaca Email Protection Gateway

Used by more than 100,000 sites, MailScanner leverages Apache's SpamAssassin project and ClamAV to provide anti-spam and anti-virus capabilities. It's designed to sit on corporate mail gateways or ISP servers to protect end users from threats. Operating System: OS Independent.

3. SpamAssassin Replaces: Barracuda Spam and Virus Firewall, SpamHero, Abaca Email Protection Gateway

This Apache project declares itself "the powerful #1 open-source spam filter." It uses a variety of different techniques, including header and text analysis, Bayesian filtering, DNS blocklists, and collaborative filtering databases, to filter out bulk e-mail at the mail server level. Operating System: primarily Linux and OS X, although Windows versions are available.

4. SpamBayes Replaces: Barracuda Spam and Virus Firewall, SpamHero, Abaca Email Protection Gateway

This group of tools uses Bayesian filters to identify spam based on keywords contained in the messages. It includes an Outlook plug-in for Windows users as well as a number of different versions that work for other e-mail clients and operating systems. Operating System: OS Independent.

Anti-Virus/Anti-Malware

5. ClamAV Replaces Avast! Linux Edition, VirusScan Enterprise for Linux

Undoubtedly the most widely used open-source anti-virus solution, ClamAV quickly and effectively blocks Trojans, viruses, and other kinds malware. The site now also offers paid Windows software called "Immunet," which is powered by the same engine. Operating System: Linux.

Read more: Datamation

Debian GNU/Linux 6.0 (squeeze)

Posted by

jasper22

at

12:46

|

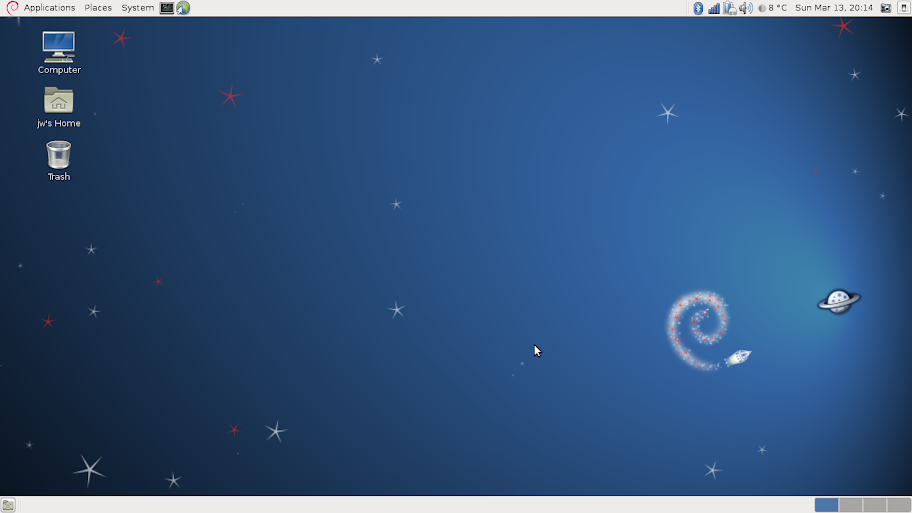

I have been a bit slack in not writing about the release of Debian GNU/Linux 6.0, which was made over a month ago now. A new Debian stable release is always a big deal, not least because it doesn't happen very often, and it doesn't happen on a predictable, regular schedule. It is also because Debian is the base used for a lot of other Linux distributions, including Ubuntu (and thus Linux Mint the plethora of other Ubuntu derivatives), SimplyMEPIS, Knoppix and more. But there are a lot of people who use Debian itself as the basis for a personally constructed and customised Linux installation - including some of the regular readers here on ZDNet UK.

So far, I have installed Debian 6.0 on three of my laptop/netbook systems: Fujitsu Lifebook S6510, Lenovo S10-3s and Samsung NF310. This screen shot above taken on the Samsung:

Read more: Jamie's Mostly Linux Stuff

Linux 2.6.38 Boosts Performance

Posted by

jasper22

at

12:42

|

The second major Linux kernel release of 2011 is now available, offering open source users enhanced performance over its predecessors.

The Linux 2.6.38 kernel goes a step beyond what Linux developers provided in the 2.6.37 kernel released earlier this year, by eliminating the last main global lock, which further unlocks Linux performance.

"There are many performance enhancements that went into 2.6.38, Transparent Hugepages is one of those noticeable features," Tim Burke, vice president of Linux Engineering at Red Hat told InternetNews.com. "To give you a better idea of how important Red Hat considers transparent hugepages, Red Hat led the initial upstream implementation in the timeframe to include in Red Hat Enterprise Linux 6. We continue to evolve the types of memory use cases to be fully covered by transparent hugepages."

With Transparent Huge Pages (THP), memory allocations for processor use grow from 4 KB in size to 2 MB. Burke explained that THP reduces the number of memory allocations and leverages higher performance hardware.

"The impact of the inclusion of the Transparent Huge Pages (THP) in 2.6.38 is that it will offer improved performance on workloads that requires large amount of memory, such as JVM and database servers," Burke said.

Burke noted that one of the main beneficiaries of THP is virtualization.

Read more: internetnews.com

Adding Restart/Recovery capability to your app's with "Windows Restart and Recovery Recipe" code recipe from MSDN Code Gallery

Posted by

jasper22

at

12:41

|

Windows 7 includes two closely related features: Application Restart and Application Recovery. These features are designed to eliminate much of the pain caused by the unexpected termination of applications, whether as a result of an unhandled exception, a “hard hang,” or an external request that is part of updating a DLL or other resource in use by the application.

Application Recovery applies to applications that have a “document” or any other in-memory information that might be lost when the application closes unexpectedly, such as when an unhandled exception is thrown.Application Restart applies to all applications, whether they might have unsaved data or not, and to more unexpected closes, including a DLL update or a system reboot.

Any Windows application can register with the operating system for restart, and optionally for recovery.

To register for recovery, the application provides a callback function. The operating system will call this function on a separate thread (remember, your main thread just throw an exception) if the application crashes or hangs. The callback function can save any unsaved data in a known location. It cannot access the UI thread, which is no longer running. Do note, that the call back function cant run forever. The OS will not permit it to run more than few seconds.

To register for restart, the application provides a command line argument that will inform the application it is restarting. A restarted application that uses recovery can look in the known location for recovery data when it is restarted, and reload the unsaved data.

This recipe provide guidance and an easy way to start using these great features in your application, removing any complication of how and where to store your application data.

What’s in the box?

This Restart and Recovery recipe includes:

Complete source code of the recipe and its samples

Managed .NET assembly

C++ header and class files to be included in your C++ application.

C#, and C++ test applications

Documentation

Read more: Greg's Cool [Insert Clever Name] of the Day

Read more: MSDN

ASP.NET WF4 / WCF and Async Calls

Posted by

jasper22

at

12:38

|

How should you use WF4 and WCF with ASP.NET?

For this post I’ve created a really simple workflow and WCF service that delay for a specific amount of time and then return a value. Then I created an ASP.NET page that I can use to invoke the workflow and WCF service to test their behavior

The Workflow Definition

First off – let’s get one thing straight. When you create a workflow, you are creating a workflow definition. The workflow definition still has to be validated, expressions compiled etc. and this work only needs to be done once. When you hand this workflow definition to WorkflowInvoker or WorkflowApplication it will create a new instance of the workflow (which you will never see).

To make this clear, in this sample I have a workflow file SayHello.xaml but I named the variable SayHelloDefinition.

private static readonly Activity SayHelloDefinition = new SayHello();

The Easy Way

If you want to do something really simple, you can just invoke workflows and services synchronously using WorkflowInvoker

private void InvokeWorkflow(int delay)

{

var input = new Dictionary<string, object> { { "Name", this.TextBoxName.Text }, { "Delay", delay } };this.Trace.Write(string.Format("Starting workflow on thread {0}", Thread.CurrentThread.ManagedThreadId));var output = WorkflowInvoker.Invoke(SayHelloDefinition, input);this.Trace.Write(string.Format("Completed workflow on thread {0}", Thread.CurrentThread.ManagedThreadId));this.LabelGreeting.Text = output["Greeting"].ToString();

}

Read more: Ron Jacobs

Anatomy of a .NET Assembly - PE Headers

Posted by

jasper22

at

12:36

|

Today, I'll be starting a look at what exactly is inside a .NET assembly - how the metadata and IL is stored, how Windows knows how to load it, and what all those bytes are actually doing. First of all, we need to understand the PE file format.

PE files

.NET assemblies are built on top of the PE (Portable Executable) file format that is used for all Windows executables and dlls, which itself is built on top of the MSDOS executable file format. The reason for this is that when .NET 1 was released, it wasn't a built-in part of the operating system like it is nowadays. Prior to Windows XP, .NET executables had to load like any other executable, had to execute native code to start the CLR to read & execute the rest of the file.

However, starting with Windows XP, the operating system loader knows natively how to deal with .NET assemblies, rendering most of this legacy code & structure unnecessary. It still is part of the spec, and so is part of every .NET assembly.

The result of this is that there are a lot of structure values in the assembly that simply aren't meaningful in a .NET assembly, as they refer to features that aren't needed. These are either set to zero or to certain pre-defined values, specified in the CLR spec. There are also several fields that specify the size of other datastructures in the file, which I will generally be glossing over in this initial post.

Structure of a PE file

Most of a PE file is split up into separate sections; each section stores different types of data. For instance, the .text section stores all the executable code; .rsrc stores unmanaged resources, .debug contains debugging information, and so on. Each section has a section header associated with it; this specifies whether the section is executable, read-only or read/write, whether it can be cached...

When an exe or dll is loaded, each section can be mapped into a different location in memory as the OS loader sees fit. In order to reliably address a particular location within a file, most file offsets are specified using a Relative Virtual Address (RVA). This specifies the offset from the start of each section, rather than the offset within the executable file on disk, so the various sections can be moved around in memory without breaking anything. The mapping from RVA to file offset is done using the section headers, which specify the range of RVAs which are valid within that section.

For example, if the .rsrc section header specifies that the base RVA is 0x4000, and the section starts at file offset 0xa00, then an RVA of 0x401d (offset 0x1d within the .rsrc section) corresponds to a file offset of 0xa1d. Because each section has its own base RVA, each valid RVA has a one-to-one mapping with a particular file offset.

PE headers

As I said above, most of the header information isn't relevant to .NET assemblies. To help show what's going on, I've created a diagram identifying all the various parts of the first 512 bytes of a .NET executable assembly. I've highlighted the relevant bytes that I will refer to in this post:

Read more: Simple talk

7000 Concurrent Connections With Asynchronous WCF

Posted by

jasper22

at

12:32

|

It’s rare that a web service has some intensive processor bound computation to execute. Far more common for business applications, is a web service that executes one or more IO intensive operations. Typically our web service would access a database over the network, read and write files, or maybe call another web service. If we execute these operations synchronously, the thread that processes the web service request will spend most of its time waiting on IO. By executing IO operations asynchronously we can free the thread processing the request to process other requests while waiting for the IO operation to complete.

In my experiments with a simple self-hosted WCF service, I’ve been able to demonstrate up to 7000 concurrent connections handled by just 12 threads.

Before I show you how to write an asynchronous WCF service, I want to clear up the commonly held misconception (yes, by me too until a year or so ago), that asynchronous IO operations spawn threads. Many of the APIs in the .NET BCL (Base Class Library) provide asynchronous versions of their methods. So, for example, HttpWebRequest has a BeginGetResponse / EndGetResponse method pair alongside the synchronous method GetResponse. This pattern is called the Asynchronous Programming Model (APM). When the APM supports IO operations, they are implemented using an operating system service called IO Completion Ports (IOCP). IOCP provides a queue where IO operations can be parked while the OS waits for them to complete, and provides a thread pool to handle the completed operations. This means that in-progress IO operations do not consume threads.

The WCF infrastructure allows you to define your operation contracts using APM. Here’s a contract for a GetCustomer operation:

[ServiceContract(SessionMode = SessionMode.NotAllowed)]

public interface ICustomerService

{

[OperationContract(AsyncPattern = true)]

IAsyncResult BeginGetCustomerDetails(int customerId, AsyncCallback callback, object state);

Customer EndGetCustomerDetails(IAsyncResult asyncResult);

}

Essentially ‘GetCustomerDetails’ takes a customerId and returns a Customer. In order to create an asynchronous version of the contract I’ve simply followed the APM pattern and created a BeginGetCustomerDetails and an EndGetCustomerDetails. You tell WCF that you are implementing APM by setting AsyncPattern to true on the operation contract.

The IAsyncResult that’s returned from the ‘begin’ method and passed as an argument to the ‘end’ method links the two together. Here’s a simple implementation of IAsyncResult that I’ve used for these experiments, you should be able to use it for any asynchronous WCF service:

public class SimpleAsyncResult<T> : IAsyncResult

{

private readonly object accessLock = new object();

private bool isCompleted = false;

private T result;

public SimpleAsyncResult(object asyncState)

{

AsyncState = asyncState;

}

public T Result

{

get

{

lock (accessLock)

{

return result;

}

}

set

{

lock (accessLock)

{

result = value;

}

}

}

public bool IsCompleted

{

get

{

lock (accessLock)

{

return isCompleted;

}

}

set

{

lock (accessLock)

{

isCompleted = value;

}

}

}

// WCF seems to use the async callback rather than checking the wait handle

// so we can safely return null here.

public WaitHandle AsyncWaitHandle { get { return null; } }

Read more: Code rant

Generating Open XML WordprocessingML Documents

This is a blog post series on parameterized Open XML WordprocessingML document generation. While it is easy enough to write an purpose-built application that generates WordprocessingML documents, too often, developers find themselves building new applications for similar but somewhat different scenarios. However, if we take the right approach, it is possible to build a simple document generation system that makes it far easier to address a wide variety of scenarios. I believe that a flexible document generation system can be written in a few hundred lines of code. This page lists the posts that are part of the series. I’ll be updating this page with new posts as I write them.

| Post Title | Description | |

| 1 | Generating Open XML WordprocessingML Documents | Introduces this blog post series, outlines the goals of the series, and desribes various approaches that I may take as I develop some document generation examples. |

| 2 | Using a WordprocessingML Document as a Template in the Document Generation Process | In this post, I examine the approaches for building a template document for the document generation process. In my approach to document generation, a template document is a DOCX document that contains content controls that will control the document generation process. |

| 3 | The Second Iteration of the Template Document | Based on feedback, this post shows an updated design for the template document. |

| 4 | More enhancements to the Template Document | This post discusses an enhancement to the document template that enables the template designer to add infrastructure code. In addition, it discusses how the document generation process will work. |

| 5 | Generating C# Code from an XML Tree using Virtual Extension Methods | Presents code that given any arbitrary LINQ to XML tree, can generate code that will create that tree. The code to generate code is written as a recursive functional transform from XML to C#. |

| 6 | Simulating Virtual Extension Methods | Shows one approach for extending a class hierarchy by simulating virtual extension methods. |

| 7 | Refinement: Generating C# code from an XML Tree using Virtual Extension Methods | Makes the approach of generating code that will generate an arbitrary XML tree more robust. |

| 8 | Text Templates (T4) and the Code Generation Process | Explores T4 text templates, and considers how they can be used in the Open XML document generation process. |

| 9 | A Super-Simple Template System | Defines a template system that makes it easier to generate C# code. |

| 10 | Video of use of Document Generation Example | Screen-cast that shows the doc gen system in action. |

| 11 | Release of V1 of Simple DOCX Generation System | Release of the first version of this simple prototype doc gen system. |

| 12 | Changing the Schema for the Document Generation System | Contains a short screen-cast that shows how to adjust the data coming into the doc gen system, and to adjust the document template to use the new data. |

Read more: Eric White's Blog

Subscribe to:

Comments (Atom)